AI is the biggest source of innovation available to organizations today. It can accelerate product development, improve operational efficiency, uplevel customer experiences, and foster creativity by automating routine tasks and leaving the more strategic and innovative projects to humans. By leveraging AI, organizations can make better decisions, be more competitive, and adapt to market changes more effectively. From small businesses to large enterprises, every organization should be exploring AI right now.

However, new technology often brings new security challenges, and AI is no different. AI platforms are rapidly emerging in the market, much like the cloud did a decade ago, and their adoption is inevitable, whether organizations are prepared or not. The answer is not to simply block AI, but to secure it in a way that doesn’t hinder innovation.

At Orca Security, it’s our goal to provide security that’s a technology enabler for our customers, so they can focus on what they do best: propel their business forward by developing cloud-native apps and leveraging AI, without having to compromise security. In this blog, I’ll dig into the challenges that organizations face around AI innovation and how Orca is helping to remove those barriers.

What are the challenges to AI Innovation?

AI innovation faces several challenges, including data privacy, compliance, and computing power. Access to high-quality data is often limited by privacy concerns, while regulations struggle to keep pace with rapid technological advancements. There’s also a gap in skilled talent and infrastructure, which hinders the development and implementation of new AI technologies.

And then there’s AI security. Hackers can exploit weaknesses in machine learning models to manipulate outcomes or extract sensitive information, which makes developing secure algorithms a top priority. Compliance with regulations, such as GDPR, PCI DSS, and HIPAA, as well as new specific AI regulations such as the EU AI Act, US AI Bill of Rights, and NIST AI Risk Management Framework, add further complexity to AI security. Balancing innovation with the need for security remains a significant hurdle in the AI landscape.

At Orca Security, we’ve been working to help remove these obstacles for organizations, and in particular our customers, so they can reap the benefits of AI innovation, without having to sacrifice security.

Five ways Orca is removing AI barriers

Here are five ways we are helping our customers and other organizations reap the rewards of AI innovation, while ensuring they do so securely.

#1. Driving awareness for AI security

One of the challenges of AI security is its nascency and current shortage of comprehensive resources and seasoned experts. Organizations must often develop their own solutions to protect AI services without external guidance or examples. A great source of learning is the OWASP Machine Learning Security Top Ten list that provides an overview of the top 10 security issues of machine learning systems.

To increase awareness of AI security risks, Orca recently conducted a detailed study of billions of cloud assets across cloud environments on AWS, Azure, Google Cloud, Oracle Cloud, and Alibaba Cloud. We presented our findings and insights in the 2024 State of AI Security Report.

For example, we found some pretty rampant risks:

- 45% of Amazon SageMaker buckets are using non-randomized default bucket names.

- 98% of organizations have not disabled the default root access for Amazon SageMaker notebook instances.

- 62% of organizations have deployed an AI package with at least one CVE.

We hope the report will raise awareness of the most common AI risks already out there so developers, CISOs, and security professionals can better understand how to innovate with AI without taking security shortcuts.

#2. Providing visibility into AI security risks

As with any cloud environment, visibility into AI deployments can be spotty. Shadow AI is very common with developers spinning up AI models, even if just for testing, without security teams knowing. This could all come back to bite you later.

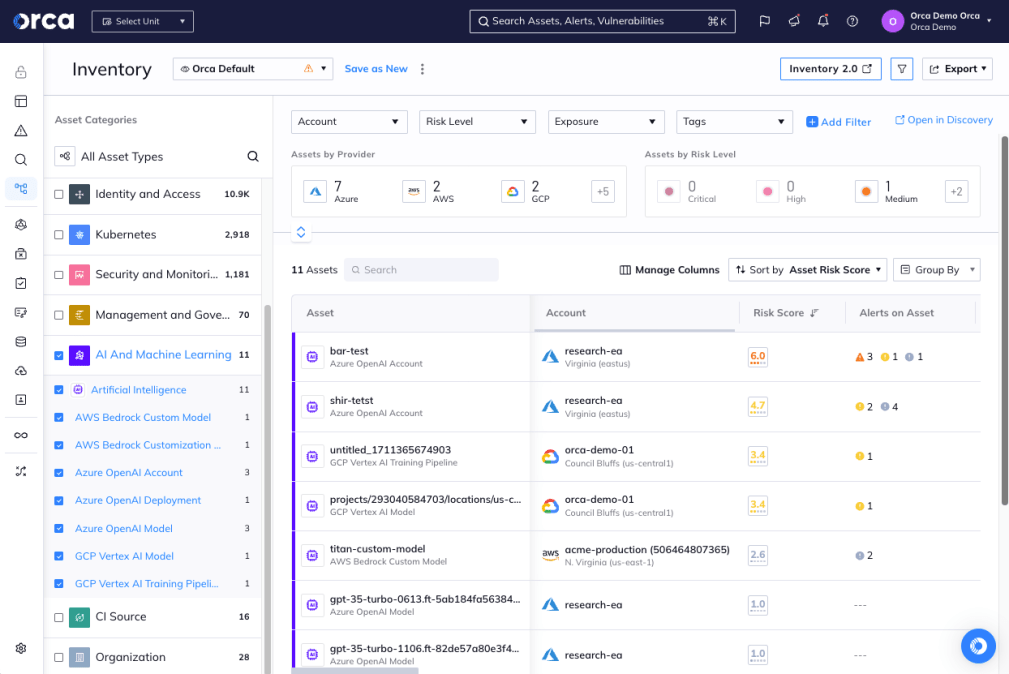

To solve this issue, we extended our cloud security platform to cover AI services and packages, providing the same risk insights and deep data that we provide on other cloud resources. Many of the security risks facing AI models and LLMs are similar to other cloud assets, including limited visibility, accidental public access, unencrypted sensitive data, shadow data, and unsecured keys. In addition, we’re applying our existing technology for use cases that are unique to AI security, such as detecting sensitive data in training sets and preventing data poisoning and model skewing.

Imagine having a complete inventory of every AI model deployed in your cloud, including those “shadow AI” projects that often fly under the radar. Our AI-SPM (AI Security Posture Management) provides just that – a comprehensive bill of materials for your AI landscape. But we don’t stop at visibility. We’ve developed an out-of-the-box framework that continuously analyzes your cloud estate, ensuring the proper upkeep of AI models, network security, data protection, and more.

One of the most critical aspects of AI security is protecting sensitive data. Orca not only detects sensitive information in AI projects but also provides prioritized alerts with actionable remediation instructions. We’ve also tackled the often-overlooked issue of exposed AI keys and tokens, performing automatic scans of code repositories to catch these vulnerabilities before they can be exploited.

#3. Integrating AI security into the SDLC

Another important issue with AI innovation is that security must be included throughout the entire process, and not just added at the end of an AI project just before it’s put into production.

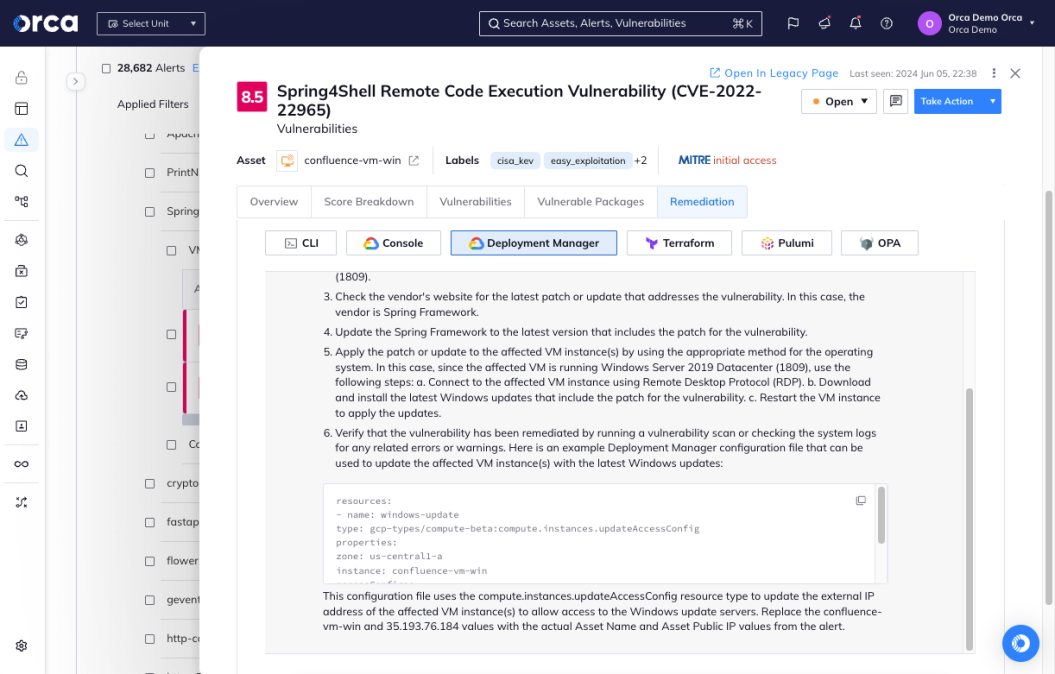

Integrating security practices earlier in the software development lifecycle, starting from the planning and design phases, helps identify and address vulnerabilities before they become deeply embedded in the code, reducing the cost and complexity of fixing issues later on. By incorporating security checks, testing, and reviews early, development teams can enhance the overall security posture of the AI program, minimize risks, and ensure compliance, all while accelerating development processes and reducing potential disruptions.

With Orca’s Shift Left Security, we can help developers detect and remediate risks in AI Infrastructure as Code and container images before they are shipped into production. If a new risk is detected in code that’s already been deployed, Orca helps identify the owner of the originating artifact, so that security teams can quickly assign remediation to the right developer, even down to the actual line of code that needs to be modified.

#4. Enhancing cloud security with AI

With the cybersecurity industry facing a serious skills shortage, the ever-increasing complexity of cloud environments, and the exponential growth of AI usage, cloud security teams are struggling to keep up. They are literally receiving hundreds of alerts each day that require investigation, remediation, and response.

As cloud environments increase in complexity, more advanced technical skills are needed, further adding to the cloud security skills gap. Many cloud security tools are difficult to operationalize and use, resulting in limited value to the organization and leaving teams struggling to understand their cloud environments.

For this reason we’ve been at the forefront of leveraging AI to augment cloud security, lowering required skill thresholds, simplifying tasks, and using AI to calculate optimal cloud configurations. Orca’s AI-driven capabilities significantly improve cloud security postures and alleviate daily workloads and stress while allowing developers to focus on higher-value tasks.

#5. Providing AI security hands on learning

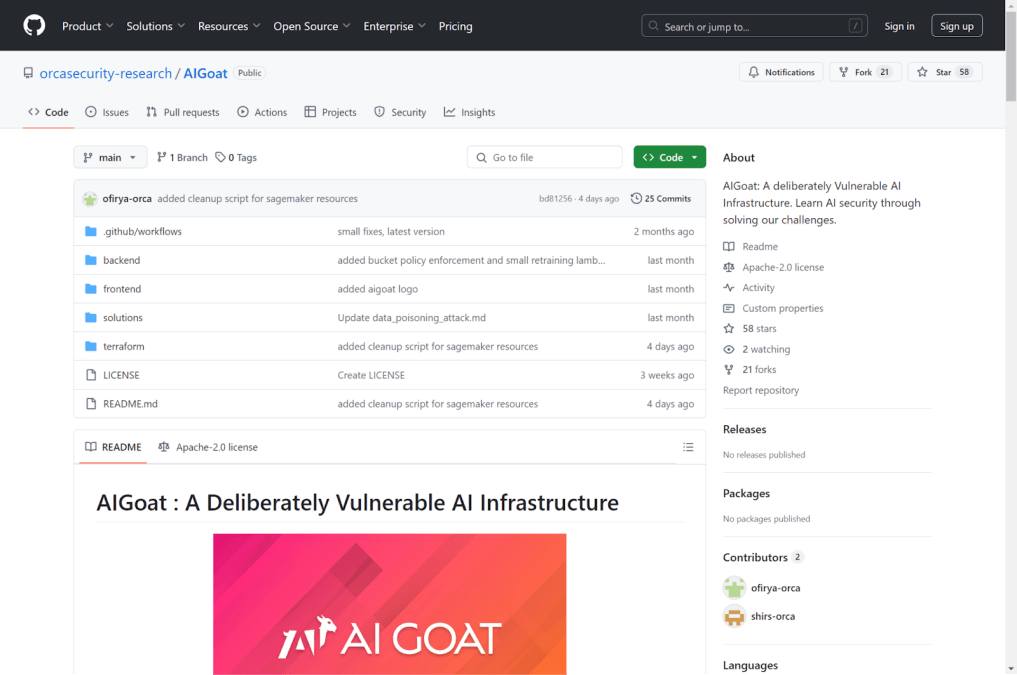

As a cloud security vendor, we take our role in advancing security seriously. Therefore, Orca is a proud contributor to the open source community. Recognizing the lack of hands-on AI security learning tools, Orca is providing AI Goat to developers and security practitioners. AI Goat is an intentionally vulnerable AI environment that includes numerous threats and vulnerabilities for testing and learning purposes.

Developers, security professionals and pentesters can use this environment to understand how AI-specific risks —based on the OWASP Machine Learning Security Top Ten risks—can be exploited, and how organizations can best defend against these types of attacks.

Conclusion

In conclusion, as we continue to remove security barriers, we are empowering our customers to fully harness the transformative potential of AI. By prioritizing robust, yet streamlined security solutions, we enable innovation at speed – without compromising safety. This approach not only accelerates AI adoption but also ensures that our customers can explore and implement AI-driven advancements confidently and securely, fostering a future where innovation and security go hand-in-hand.

About the Orca Cloud Security Platform

Orca’s agentless-first Cloud Security Platform connects to your environment in minutes and provides 100% visibility of all your assets on AWS, Azure, Google Cloud, Kubernetes, and more. Orca detects, prioritizes, and helps remediate cloud risks across every layer of your cloud estate, including vulnerabilities, malware, misconfigurations, lateral movement risk, API risks, AI risks, sensitive data at risk, weak and leaked passwords, and overly permissive identities.

Schedule a 1:1 demo to learn more about how Orca can secure your AI innovation efforts.