As artificial intelligence becomes foundational to modern business and software development, organizations are discovering a new dimension of risk. AI now powers everything from customer chatbots and data analytics to cloud automation and code generation. Yet these same systems introduce new vulnerabilities that traditional security tools and processes were never designed to handle.

AI Security is the discipline focused on protecting AI systems, data, and operations from misuse, manipulation, or attack. It also encompasses the responsible and secure use of AI across environments. As organizations deploy AI models and services in the cloud, understanding and implementing AI security is quickly becoming a critical part of every cloud security strategy.

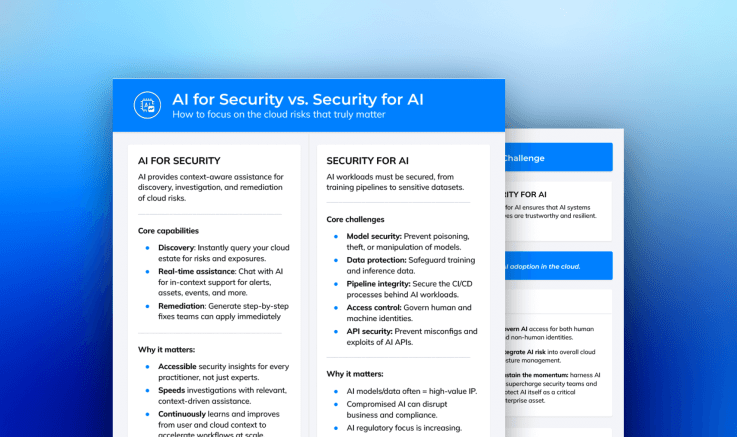

What do we mean by “AI Security”?

“AI security” can mean several things depending on context, but broadly it refers to the practices, technologies and governance that protect AI systems—and bolster security via AI—so that they deliver value safely and resiliently.

It operates alongside a complementary discipline: AI for security. Below is how the two differ.

- AI for security: Using AI and machine learning to improve threat detection, prioritize risks, and automate response across cloud environments.

- Security of AI: Safeguarding the models, data, and pipelines that power AI applications themselves from adversarial manipulation, data poisoning, or misuse.

Both are essential. As organizations deploy AI within their cloud workloads, containerized applications, and CI/CD pipelines, securing these systems becomes as important as securing the infrastructure that supports them.

Why AI Security matters (especially for cloud environments)

AI models and pipelines are quickly becoming part of the cloud attack surface. Hackers can poison training data, insert hidden backdoors, exploit inference endpoints, or exfiltrate model parameters to gain privileged insight into proprietary systems.

If your organization relies on AI and ML to enhance cybersecurity, those systems themselves become mission-critical. A compromise doesn’t just expose data, it undermines the integrity of the very tools used to protect it.

In highly dynamic cloud environments, where workloads spin up and down rapidly and automation drives speed, the absence of AI-specific guardrails can turn automation into amplification of risk.

Common challenges include:

- Model and data sprawl: AI services are deployed rapidly across SaaS, containers, and serverless environments, often without centralized oversight.

- Limited visibility: Security teams may not know which models handle sensitive data, which access production workloads, or which expose public inference endpoints.

- Gaps in traditional controls: IAM policies or network segmentation do little to prevent model inversion, adversarial inputs, or prompt injection attacks.

- Compliance pressure: New AI governance and data-protection regulations are accelerating globally. Building AI security maturity now is a competitive advantage.

As the Cloud Security Alliance summarizes, “AI security primarily revolves around safeguarding systems to ensure confidentiality, integrity, and availability.” For cloud-native enterprises, that principle must now extend to models, datasets, and pipelines.

Key pillars of AI Security

Establishing a resilient AI security capability requires a blend of governance, lifecycle management, and continuous monitoring.

Governance and model lifecycle controls

Define ownership, data lineage, and accountability for every model. Maintain visibility into who built each model, what data it uses, and how it’s being monitored.

Confidentiality, integrity, and availability (CIA)

Protect model weights, training data, and inference endpoints through encryption, access controls, and observability. Ensure AI services are resilient and tamper-proof.

Threat modeling and adversarial resilience

AI systems face unique threats: poisoning, backdoors, inference abuse. Integrate adversarial threat modeling and testing into your regular risk assessment processes.

Monitoring, detection, and response

Track model drift, anomalous performance, and suspicious API activity. Treat significant deviations or abuse of inference endpoints as potential security incidents.

Secure deployment and infrastructure integration

AI often runs within cloud-native infrastructure—containers, functions, and data pipelines. Apply least privilege, segmentation, and runtime monitoring just as you would for other workloads.

Transparency and explainability

Ensure the ability to audit model behavior and detect unintended bias or drift. Explainable AI builds trust and supports compliance with emerging regulatory requirements.

Common risk vectors in AI Security

Organizations building AI security programs should understand the main types of threats facing AI systems today:

- Model poisoning: Malicious data is injected into training sets, causing the model to behave incorrectly.

- Backdoor attacks: Hidden triggers embedded during training activate malicious behavior under specific conditions.

- Model inversion and theft: Attackers infer sensitive data or extract proprietary model weights via exposed inference APIs.

- API abuse: Weak authentication on inference endpoints allows data extraction or denial-of-service attacks.

- Drift and bias: Without ongoing monitoring, model performance can degrade or skew over time, creating business and reputational risk.

- Automation risk: AI/ML used for security may produce blind spots if the model is mis-tuned or its domain shifts.

How to strengthen your AI Security posture

Building a resilient AI security posture requires the same foundations that underpin cloud security maturity: visibility, context, and continuous governance. The following five steps can help organizations operationalize AI security within their cloud environments.

Step 1: Discover and inventory AI assets

- Begin by identifying every AI and machine learning system across your environment—models in production, inference endpoints, training pipelines, and associated data stores.

- Classify each asset by sensitivity and exposure. Which models process confidential data? Which are externally accessible? Which drive critical business functions?

- Map interdependencies across data sources, compute resources, and third-party APIs to establish a baseline understanding of your AI footprint.

Step 2: Assess and prioritize risk

- Evaluate each model or pipeline against key risk factors such as data sensitivity, external exposure, dependency chains, and the maturity of monitoring controls.

- Incorporate AI-specific threats—like model poisoning, prompt injection, and inference abuse—into your existing cloud threat modeling process.

- Prioritize the systems whose compromise would have the greatest business or regulatory impact.

Step 3: Implement controls

- Apply foundational governance controls including model versioning, audit logging, and access restrictions.

- Extend standard cloud security practices—least-privilege IAM, network segmentation, container runtime protection—to the infrastructure supporting AI workloads.

- Layer on AI-specific defenses such as adversarial testing, input anomaly detection, drift monitoring, and validation of inference outputs.

- Integrate AI assets into your broader cloud posture management and vulnerability assessment workflows to maintain unified visibility and context.

Step 4: Continuously monitor and respond

- Establish telemetry for model performance, inference requests, and drift indicators. Monitor for unusual patterns, such as spikes in API traffic or unexpected shifts in output distributions.

- Treat anomalies as potential security incidents and feed them into your existing alerting and response workflows.

- Conduct regular red teaming and adversarial testing to validate model resilience and update detection thresholds as environments evolve.

Step 5: Strengthen governance and culture

- Sustainable AI security depends on cross-functional alignment between DevOps, data science, and security teams.

- Define clear ownership for each model’s security and monitoring responsibilities. Maintain a living model catalog, complete with risk assessments and remediation status.

- Provide ongoing education on AI-specific threats, and ensure new AI initiatives align with your overarching cloud security strategy—eliminating shadow AI and unmanaged risk.

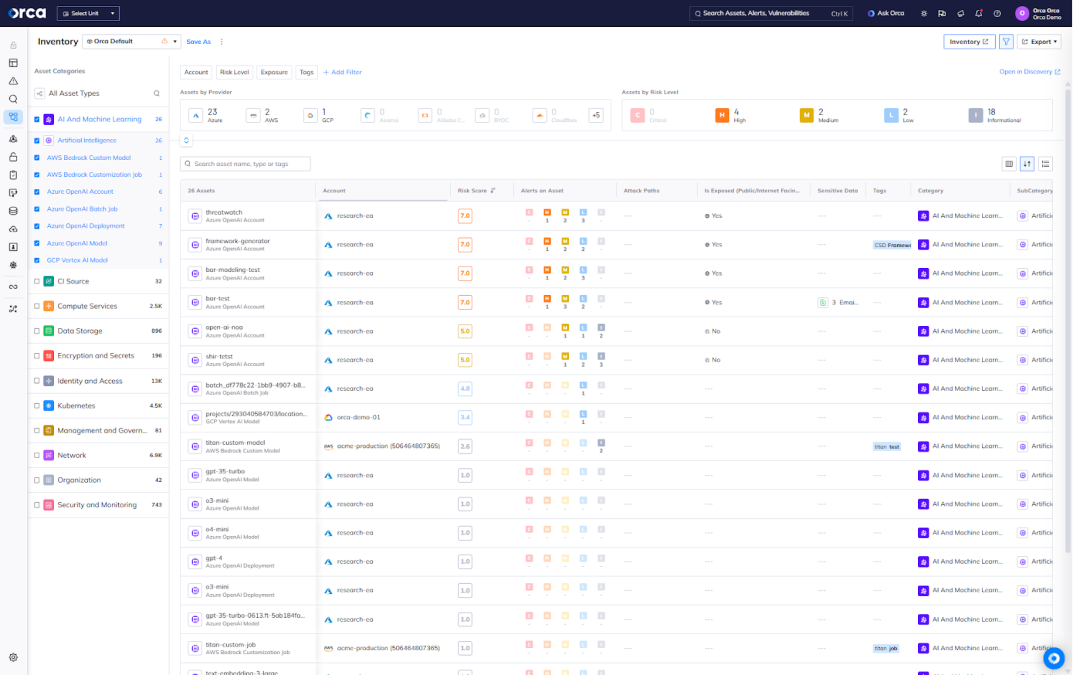

How Orca delivers AI Security in the cloud

Securing AI models and pipelines in the cloud requires the same holistic, context-driven visibility that the Orca Cloud Security Platform already delivers across workloads, containers, identities, and data. The Orca Platform provides organizations with the visibility and intelligence needed to identify, prioritize, and remediate AI-related risks without requiring agents or complexity.

Orca’s agentless-first architecture provides full-stack visibility across your entire cloud estate, delivering a comprehensive view of all AI services, applications, and models running in your environment, including shadow AI that may operate outside sanctioned deployments.

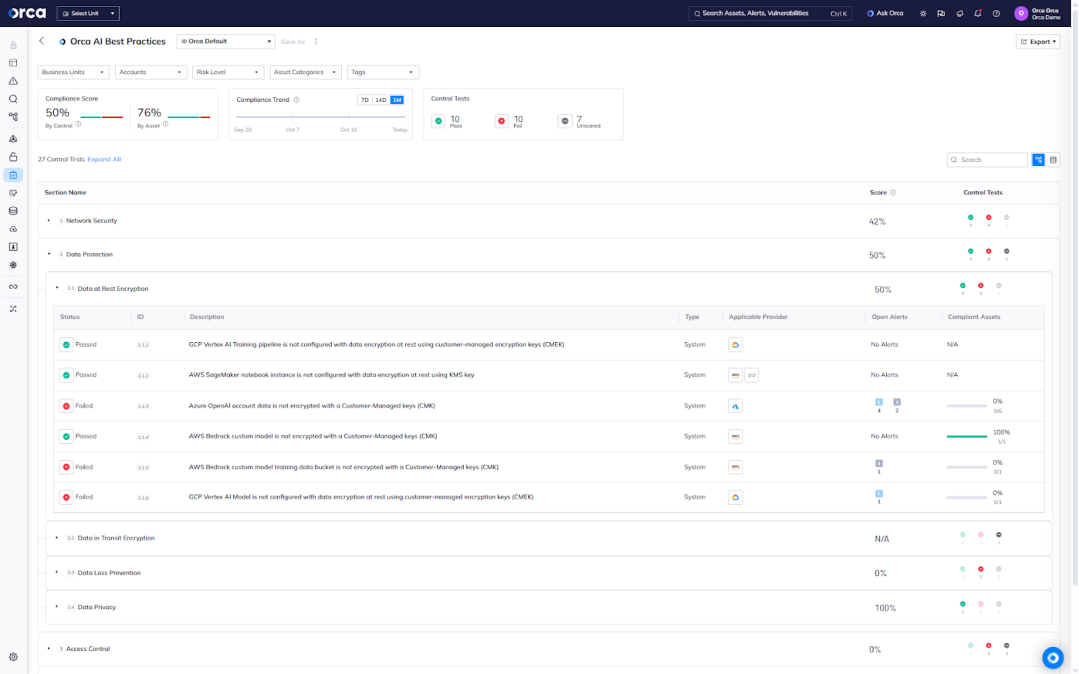

To help organizations securely innovate with AI, Orca offers a dedicated framework aligned to AI Security best practices. This framework continuously and automatically monitors, tracks, and addresses AI-related risks to ensure every model and service remains properly governed and protected.

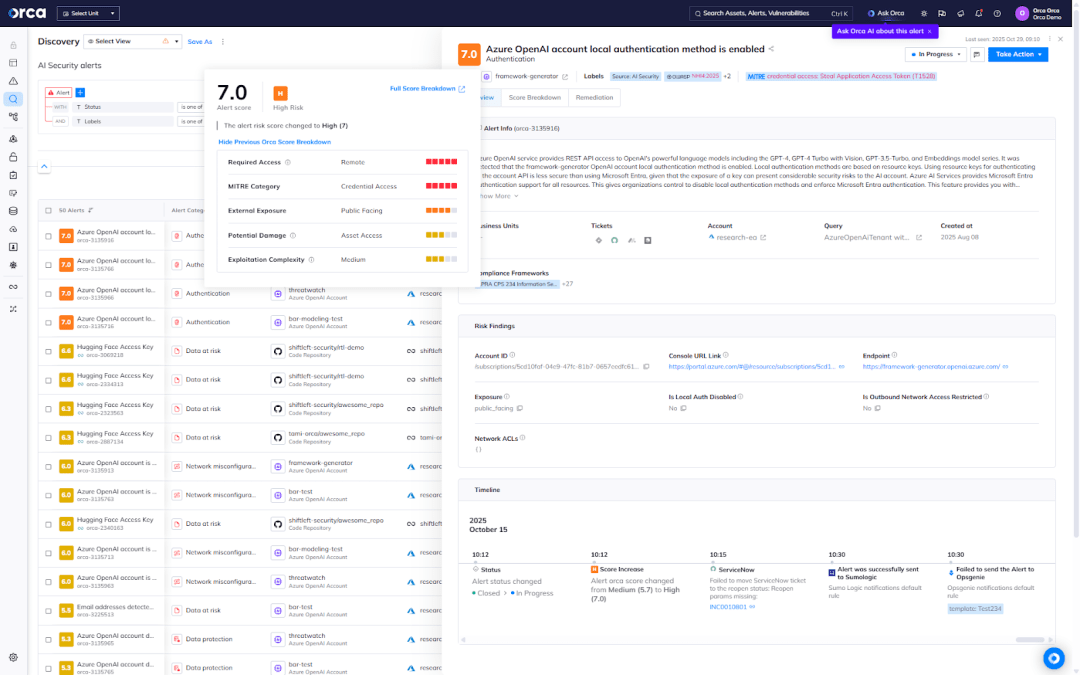

Orca verifies that AI services are securely configured, covering areas such as network controls, data protection, access management, and identity governance. It also alerts you if sensitive data is detected within AI training files, allowing you to take immediate action to prevent unintended exposure.

In addition, Orca identifies when API keys, tokens, or other credentials for AI services and software packages are exposed in code repositories, helping teams quickly mitigate risk before attackers can exploit it.

As AI becomes increasingly embedded in cloud applications, Orca continues to evolve its platform to incorporate AI-specific telemetry, model lifecycle visibility, and data-sensitivity analysis—all within the same unified view. This allows organizations to strengthen their AI security posture using the same trusted platform they already rely on for cloud and container protection.

Bringing it all together

AI brings tremendous opportunity—smarter automation, improved detection, and entirely new ways to drive innovation. But every new capability also introduces a new surface of risk. From training data to inference endpoints, from the threat of adversarial input to model drift, the security stakes are real.

“AI security” isn’t a niche topic anymore—it is core to modern cloud security posture. By addressing it now with governance, visibility, prioritized controls, and continuous monitoring, organizations can avoid being the target of tomorrow’s model-based attacks.

If your cloud environment includes AI/ML models—or you’re planning to leverage them—make AI security a foundational part of your strategy rather than an afterthought.

Ready to learn more?

To learn more about how AI is reshaping cloud risk, download the 2025 State of Cloud Security Report. And if you’d like help assessing where your AI models sit in your cloud risk surface, schedule a personalized 1:1 demo.