Orca Security today released its inaugural State of AI Security Report, a deep dive into the security risks of deployed AI models in cloud services. The report presents an in-depth analysis of actual risks discovered in production environments, which inform the report’s key recommendations for fortifying AI security.

Developed by the Orca Research Pod, this report was compiled by analyzing data captured from billions of cloud assets on AWS, Azure, Google Cloud, Oracle Cloud, and Alibaba Cloud scanned by the Orca Cloud Security Platform.

In this post, we review the report’s important findings and takeaways.

Key report findings

Orca’s report reveals several key findings, including:

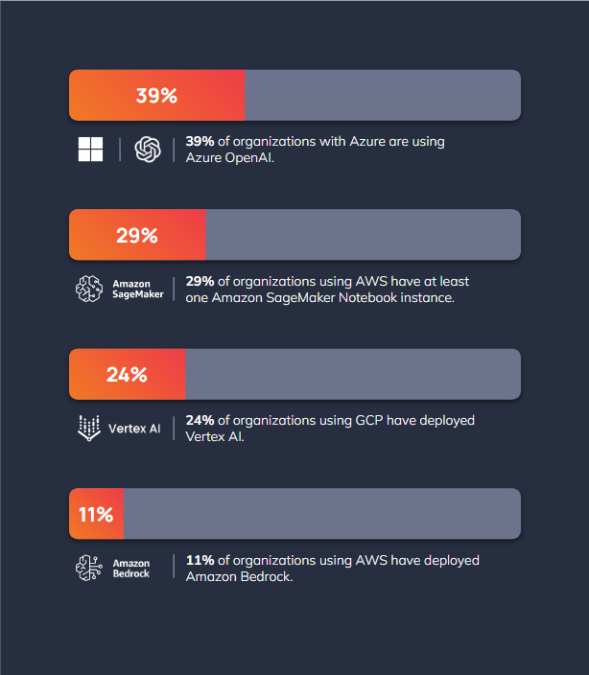

- More than half of organizations are deploying their own AI models: According to Orca researchers, 56% of organizations have adopted AI to build custom applications. Azure OpenAI is currently the front runner among cloud provider AI services, with 39% of organizations with Azure using it. Sckit-learn is the most used AI package (43%) and GPT-3.5 is the most popular AI model, with 79% of organizations using GPT-3.5 in their cloud.

- Default AI settings are often accepted without regard for security: The default settings of AI services tend to favor development speed rather than security, which results in most organizations using insecure default settings. For example, 45% of Amazon SageMaker buckets are using non randomized default bucket names, and 98% of organizations have not disabled the default root access for Amazon SageMaker notebook instances.

- Most vulnerabilities in AI models are low to medium risk—for now: 62% of organizations have deployed an AI package with at least one CVE. However, most of these vulnerabilities are low to medium risk with an average CVSS score of 6.9, and only 0.2% of the vulnerabilities have a public exploit (compared to the 2.5% average).

As the report reveals, current adoption rates for AI signal that organizations are making a strategic pivot toward the technology. As referenced, more than one in two organizations are leveraging AI to build custom solutions. This number is high, Orca researchers point out, considering the relative nascency of the technology and the substantial capital investments required. It signals both a long-term commitment to the technology and conditions that demand enhanced AI security.

“Orca’s 2024 State of AI Security Report provides valuable insights into how prevalent the OWASP Machine Learning Security Top 10 risks are in actual production environments. By understanding more about the occurrence of these risks, developers and practitioners can better defend their AI models against bad actors. Anyone who cares about AI or ML security will find tremendous value in this study.”

Shain Singh, Project Co-Lead of the OWASP ML Security Top 10

Cloud Provider AI service usage

The report also unveils the significant adoption of cloud providers’ AI services, especially when considering their recent introduction. For example, nearly four in 10 organizations using Azure also leverage Azure OpenAI, which became generally available in November 2021.

AI security risks

As organizations invest more in AI innovation, AI security does not appear to be top of mind as of yet. The report uncovers a wide range of AI risks shared by most organizations—such as exposed API keys, overly permissive identities, misconfigurations, and more. Orca researchers trace many of these risks back to the default settings of cloud providers, which often grant wide access and broad permissions.

Additionally, the report examines the high prevalence of vulnerabilities in AI packages deployed in cloud environments. While most of them present low to medium risk, Orca researchers point out how a single vulnerability can support a critical attack path that attackers could exploit.

Challenges in AI security

As the Orca Research Pod explores, organizations looking to enhance their AI security are likely to encounter strong headwinds created by a number of factors. First, the speed of AI innovation continues to accelerate, challenging AI security teams and solutions to keep pace. The nascency of AI also creates a shortage of comprehensive resources and seasoned experts in AI security, often leaving organizations to compensate for this gap on their own. Further resistance comes from changes to the regulatory landscape, with new institutions and frameworks starting to emerge and govern the use of AI. For example, the European Union AI Act went into effect August 1, 2024, and sets regulatory requirements for specific AI applications, in some cases banning those found to pose “unacceptable risk.” As Orca researchers note, organizations will need to stay agile as new regulations take effect.

“Security teams need to effectively manage risk to support current AI usage as well as increased adoption of AI to support business growth initiatives. This study by the Orca Research Pod with data from Orca Security scans sheds light on AI adoption and the top risks that need attention, including vulnerabilities in AI packages, AI exposures, access issues, and misconfigurations. The study offers useful recommendations and practical ways for security teams to proactively mitigate risk to prevent costly mitigation efforts and incidents later.”

Melinda Marks, Practice Director, Cybersecurity at Enterprise Strategy Group

Promoting AI security: 5 best practices

So, how do organizations balance AI innovation with AI security? The report presents a comprehensive list of best practices, some of which include:

- Beware of default settings: Always check the default settings of new AI resources and restrict these settings whenever appropriate.

- Limit privileges: Excessive privileges give attackers freedom of movement and a platform to launch multi-phased attacks. Protect against lateral movement and other threats by eliminating redundant privileges and restricting access.

- Manage vulnerabilities: Unlike AI security, most vulnerabilities are not new. Often, AI services rely on existing solutions with known vulnerabilities. Detecting and mapping those vulnerabilities in your environments is essential to manage and remediate them appropriately.

- Secure data: Favor more restrictive settings for data protection, such as opting for self-managed encryption keys while ensuring you enable encryption at rest. Also, offer awareness training that instructs users on data security best practices.

- Isolate networks: Limit network access to your assets, opening assets to network activity only when necessary, and precisely defining what type of network to allow in and out.

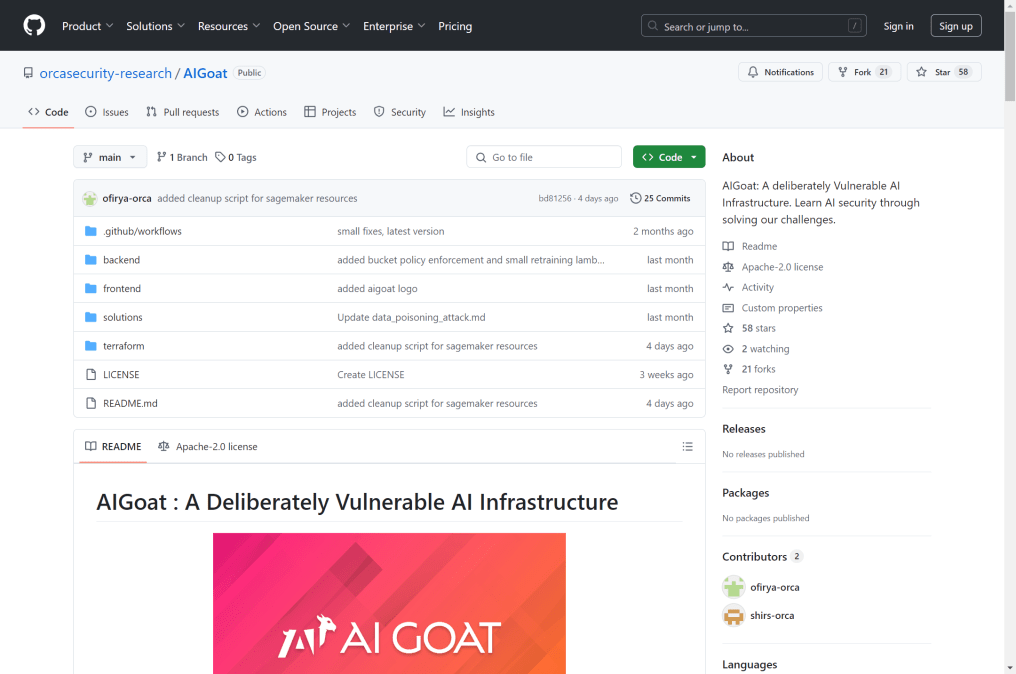

Get hands on with AI Goat

Provided as an open source tool on the Orca Research GitHub repository, Orca’s AI Goat is an intentionally vulnerable AI environment that includes numerous threats and vulnerabilities for testing and learning purposes.

Developers, security professionals and pentesters can use this environment to understand how AI-specific risks —based on the OWASP Machine Learning Security Top Ten risks—can be exploited, and how organizations can best defend against these types of attacks.

About the Orca Cloud Security Platform

The Orca Cloud Security Platform is an agentless-first cloud security solution that detects, prioritizes, and remediates security risks and compliance issues across AWS, Azure, Google Cloud, and Kubernetes environments. The Orca Platform leverages patented SideScanning Technology™ to provide comprehensive risk detection and full coverage of the cloud estate.

The Orca Platform leverages generative AI to power search, remediation, IAM policy optimization, and more, reducing workloads and accelerating and improving cloud security postures.

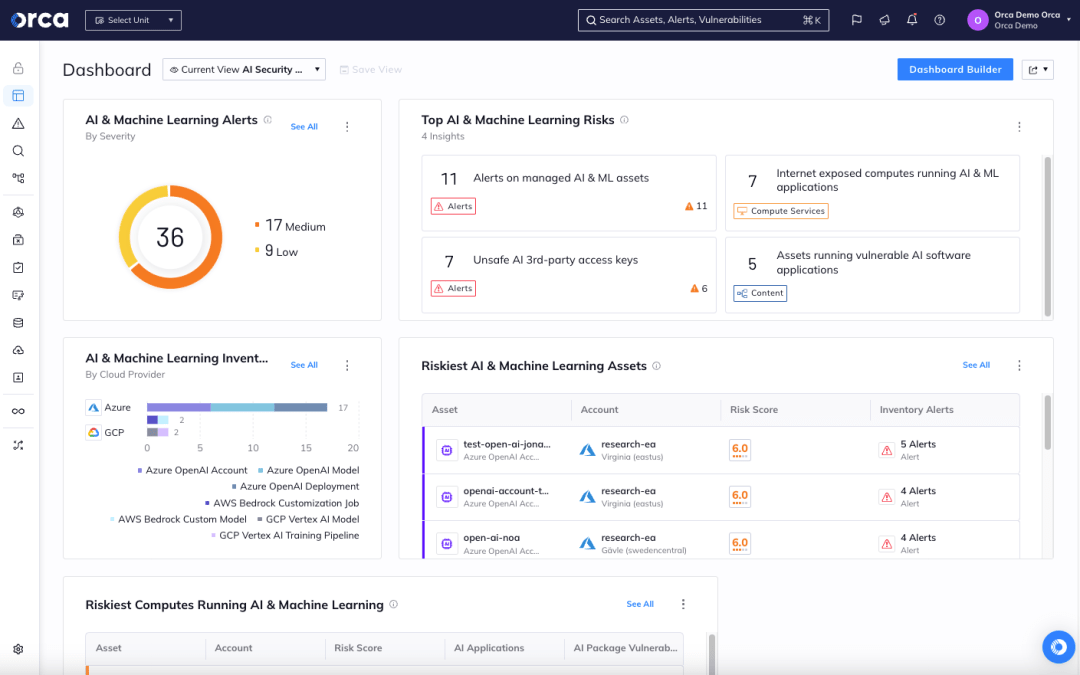

To help Orca customers leverage their own AI initiatives securely, Orca includes AI Security Posture Management (AI-SPM). Orca’s AI-SPM provides full visibility into all deployed AI models (including shadow AI) and protects against data tampering and leakage.

Orca is trusted by global innovators, including Unity, Wiley, SAP, Postman, Autodesk, Lemonade, and Gannett. Schedule a personalized 1:1 demo to see how Orca can help you fortify your AI security in the cloud.