Over the last year, we’ve witnessed a pivotal shift in how large language models (LLMs) are used – not just as static assistants but as adaptive, memory-driven agents that can persist and recall information. One of the most promising developments in this direction is the Model Context Protocol (MCP) introduced by Anthropic. But Anthropic isn’t alone – other platforms like Google’s A2A protocol and Gemini, OpenAI and Copilot are also building toward similar context-awareness mechanisms.

In this blog, we’ll explore:

- What MCP, A2A, and other agent context protocols are – and why they matter

- How similar ideas are being approached by each major player

- The risks of using agent context protocols

- How to best protect ourselves as we’re moving towards the future

What Does it Really Mean to Bring Memory to AI?

When we talk about bringing memory to AI, we’re not referring to long-term recall or a better chat history. We’re talking about a shift from isolated language models to embedded agents that operate with real context. Technologies like MCP and A2A make this possible by wiring models directly into the systems, tools, and environments we use every day.

Until recently, most language models operated in isolation – you gave them a prompt, they gave you a response. No memory, no awareness of what you were doing, no connection to anything outside the text. That’s changing fast. Now, we’re hooking models into real sources of context: shell commands, file systems, browser sessions, HTTP requests, and more. It means the model can understand what’s happening around it, react to live system state, and even influence other tools. That’s powerful – and potentially dangerous if the integration isn’t locked down properly.

These new interfaces introduce fresh attack surfaces. Prompt injection is no longer just about tricking a model into saying something strange, it’s now about triggering real actions or leaking sensitive data the model shouldn’t have had access to in the first place. When a model has read or write access to live systems, a single vulnerability can have real-world consequences.

A2A and MCP both aim to standardize how models access contextual data – but with that standardization comes a new level of exposure. As defenders, we need to start thinking about models the way we think about microservices: what data are they exposed to, who can talk to them, and what guardrails are in place? Can we audit what the model accessed? Do we have controls to limit scope or revoke access dynamically?

Bringing memory to AI is inevitable and valuable – and honestly, it’s where the most exciting use cases live. But the security story is just beginning. If we don’t get serious about defining boundaries and enforcing them now, we’ll be dealing with some very avoidable incidents later.

So what is MCP, really?

The Model Context Protocol (MCP) is a specification for exposing structured, real-world context – like files, shell output, database state, or user activity, to AI models through a standardized JSON endpoint. Instead of manually embedding this information into prompts, MCP allows tools to expose it automatically, giving models a consistent way to understand and act within their environment.

This opens the door to all kinds of practical integrations. You could connect a model to a Gmail MCP server and let it reference specific emails when answering questions. You could hook it up to your local development environment using an MCP server that exposes open files, current tasks, or git info. There are MCP servers for practically everything you can think about! The official MCP server collection includes real working examples – from Docker integration and HTTP request inspection to filesystem exploration and SQLite queries. The point isn’t what the model can do on its own, but what it can do when connected to your actual environment in a controlled and secure way.

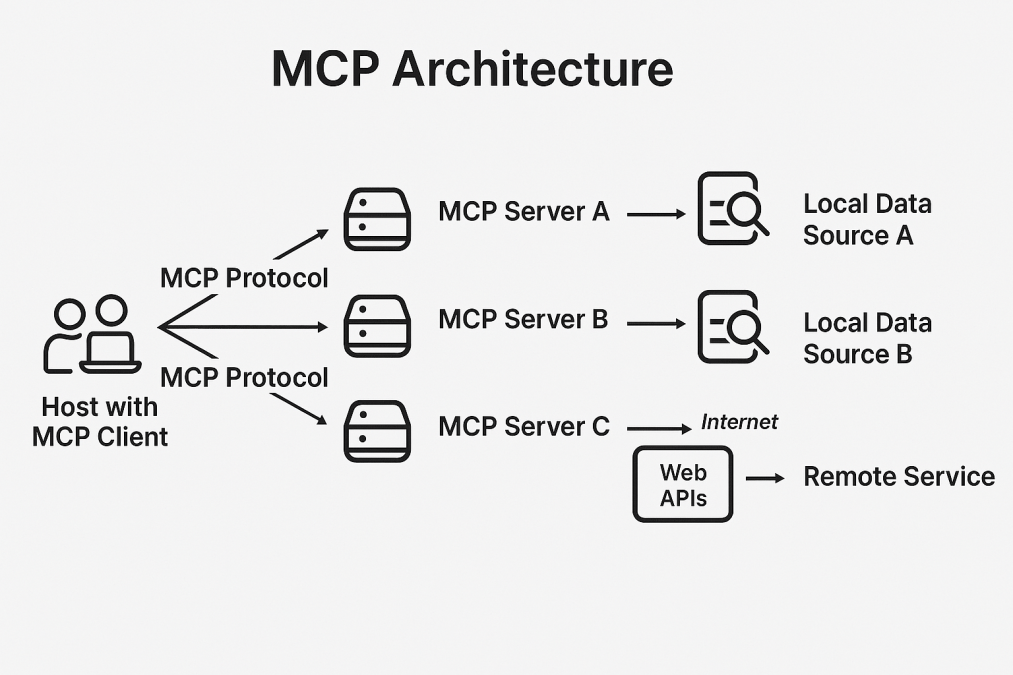

In the MCP architecture, hosts are LLM applications that initiate connections, clients are the connectors embedded within those hosts, and servers are the services that expose context or capabilities.

In order to understand how MCP works lets get a few things straight – the MCP Client acts as the user-facing application that initiates requests and displays results, while MCP servers function as intermediary systems that process these requests, manage connections to various data sources, and return organized information back to the client according to the standardized protocol.

As shown in the diagram, an MCP Client hosted on a user’s system can simultaneously communicate with multiple MCP Servers (A, B, and C), each serving different purposes. Each server interacts with their respective local data sources, providing access to localized and remote information. This architecture enables clients to access diverse data sources and services through a unified protocol, creating a flexible and scalable network that balances local data processing with cloud-based resources.

Google’s Agent to Agent (A2A) Protocol

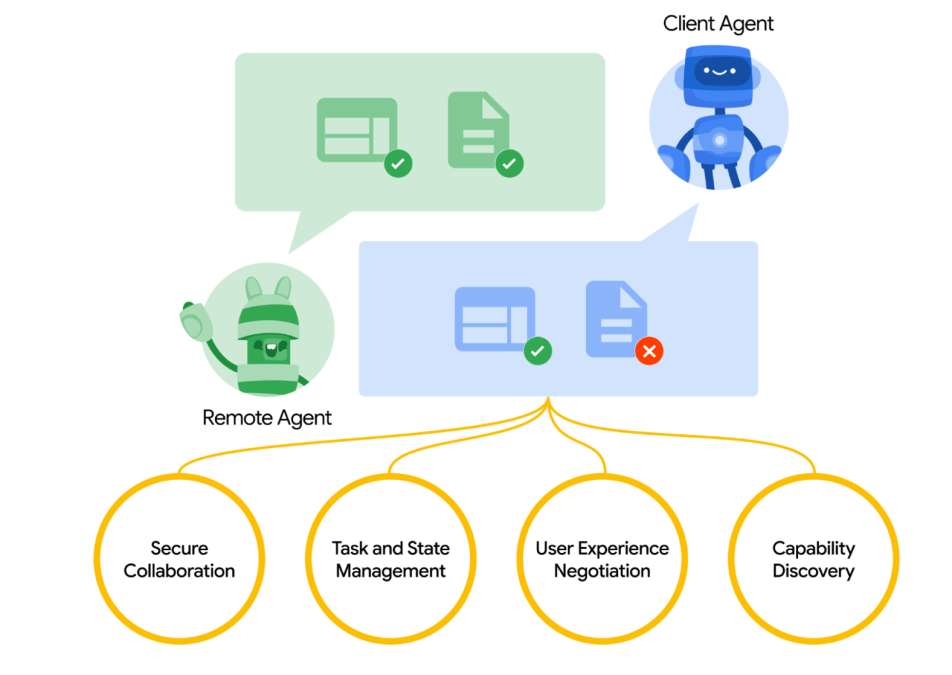

Google recently introduced the A2A protocol as an open standard for secure, structured communication between AI agents across different platforms. Though it is still in early stages, Google has introduced it as a proposed open standard to enable agents across platforms to securely collaborate and communicate using familiar web technologies.

According to Google, A2A is an open protocol that complements Anthropic’s Model Context Protocol (MCP), which focuses on providing helpful tools and real-world context to agents. While MCP enables models to interface with their environment, A2A provides a framework for agents to securely collaborate and share information with each other – bridging isolated systems into a more connected, cooperative AI ecosystem.

A2A provides a framework for AI agents to talk to each other using structured messages over HTTPS, with JSON as the payload. It supports authentication, permission control, and payload signing to ensure trust and security between agents.

With the new A2A protocol, agents can request help from each other: like asking another agent to book a meeting, retrieve data, or run a workflow – without needing to know how that agent is implemented. Agents can pass along relevant context (like memory, user preferences, or goals) when collaborating, allowing downstream agents to act intelligently without having to start from scratch.

Additionally, the standard Gemini does have a few concepts that resemble MCP/A2A and incorporate many contextual-awareness features:

- Workspace integration with Gmail, Docs, and Calendar means Gemini can pull live data: Gemini can connect to and retrieve information from Google Workspace apps like Gmail, Docs, and Calendar. This allows it to access “live data” and process current information from these services to answer questions, summarize content, or help with tasks.

- “Extensions” let Gemini query third-party tools like YouTube or Google Flights: Gemini has the capability to use “Extensions” (or plugins) to interact with third-party services like YouTube, Google Flights, and others.

- Gemini’s App Actions allow structured interactions within Android apps, mimicking MCP/A2A function calling: Android App Actions enable more direct and structured ways for users (and potentially AI models) to interact with specific functionalities within Android applications.

OpenAI’s Agent Context features

Though OpenAI have yet to fully integrate with the new MCP protocol or introduce their own version of it, they did started rolling out several features allowing for a more elaborate context, such as:

- Custom GPTs with Memory: When you create a custom GPT (via ChatGPT), it can remember facts about the user across sessions. This includes preferences, names, writing styles, or goals. You can even view and delete what it remembers.

- ChatGPT Memory: Memory is also available in the regular ChatGPT app (Pro users). The assistant can remember you like concise responses, or that you’re a programmer using Python. This makes conversations feel more personalized, without having to repeat yourself every time.

- Tools, Function Calling & Retrievers (RAG): Like MCP, all these components can now work together. For example:

- The model can call an API to check your calendar

- Search a vector database for relevant knowledge (retriever)

- Use that info and your memory to respond in a tailored way

Github’s Copilot

Github was one of the first to introduce MCP support to their set of abilities. There are already Github MCP servers, providing users comfortable access and integration with the platform.

But what about Copilot? Well, you can definitely connect MCP servers to Copilot, just like you would any other MCP client. But there are some built-in capabilities Copilot has, resembling MCP behaviour, that should be noted:

- Local Context Awareness: Copilot primarily relies on your open files, file structure, and recent edits to inform its suggestions. It builds a “temporary context window” from code you’re actively working on.

- Project-wide Indexing: In Copilot for Business and Copilot Chat, it can index and reference other files in your repo (not just the current file), sort of like an implicit, short-term memory.

- Copilot Workspace (Coming): GitHub has previewed a concept called Copilot Workspace, which allows task planning and execution across a repo. This might evolve into more persistent, stateful agent behavior – MCP-style context sharing could be part of that.

Security Risks of Agent Context Sharing and Agent-to-Agent Communication in AI Systems

Well, as it is with most new concepts in the AI space, the benefits are compelling – but they come with real risks if you’re not careful.

These concepts are a very exciting and helpful innovation, but it also introduces a few risks security people should be aware of.

1. Data Leakage

The more context you give an LLM, the more useful the LLM becomes – but this also enhances the risk we expose ourselves to, in a scenario where the model or its surrounding environment is ever compromised. The more data we give out, the more potential data a determined attacker can get their hands on.

This is especially true in agent-based environments where context is dynamically shared between tools or services – attackers may not need to compromise the model itself, just any one of the services feeding it data.

2. Misconfigurations and over-exposure

As with any service deployed in the cloud, careless exposure to the internet can be dangerous. It’s tempting to spin up a local MCP server and bind it to 0.0.0.0 for convenience during testing – but doing so can unintentionally expose the /v1/context endpoint to the network. This endpoint is often designed to serve rich, structured context to LLMs, and if left open, it could allow unauthorized models or services to access sensitive data.

The risk is even greater in A2A-style environments, where agents are expected to discover and communicate with one another over open protocols. A single misconfigured endpoint in this setup can inadvertently expose internal context to unauthorized peers – or attackers posing as trusted agents. Without strict access controls and proper scoping, even a small configuration mistake can grant visibility into project directories, user metadata, or system internals – turning a simple oversight into a critical entry point.

3. Supply Chain Attack Risk

Many MCP-like use cases involve usage of plugins, extensions or external tools like IDEs, terminals, or browsers that write to or influence the context. If a malicious or compromised extension can write to your model’s context, it can poison the model’s understanding of your workspace – or worse, leak private information.

This becomes particularly risky when using pre-built plugins or community-contributed integrations with minimal audit trails – such as browser extensions or IDE helpers that sync context to MCP or A2A agents.

4. Data poisoning

Just like any other LLM’s data store, context retrieved from external sources can be manipulated – especially if it’s written by other systems, third-party APIs, or plugins. If the model relies on this information to make decisions or generate actions, poisoned or tampered data could lead to incorrect outputs, misbehavior, or unintended actions.

For example, if you are using MCP for reading and crafting emails, an attacker (or even a malicious insider) could intentionally send bad, spam, or misinformed emails to throw off the LLM reading the messages. In an A2A setup, one malicious agent could poison a shared state or inject misleading context into another agent’s workspace – causing models to act on incorrect assumptions, or generate harmful outputs without any direct prompt manipulation.

5. Cross Tool Contamination

In environments where an agent (LLM) is connected to multiple agents, servers or applications simultaneously (e.g., Gmail, Slack, GitHub), malicious behavior in one agent can compromise the integrity of the entire environment.

For example, if a single MCP server is breached, it can become a launchpad for attacking others by manipulating the model into interacting with additional servers on the attacker’s behalf. This risk is amplified in A2A’s collaborative model, where loosely defined or implicit trust boundaries between agents can turn one compromised component into a staging point for indirect attacks across the entire system. Without strict isolation and authorization controls between each server or agent, lateral movement becomes not just possible – but likely.

6. Prompt Injection

At the end of the day, allowing our models access to external data we can’t necessarily control can pose a great risk. There is a possibility our data source has been hijacked by an attacker that knows a model is connected to it in some way, and inject hidden commands to the LLM (or GenAI) through the data provided to the LLM by the external source (via MCP, plugins, peer agents, etc).

A real-world example of this risk can be seen in the WhatsApp MCP exploitation, where an attacker injected malicious content into context fields, leading the model to execute unintended actions. This same risk applies across agent-to-agent protocols as well – if one peer can insert arbitrary context or manipulate prompts passed through shared memory, it can effectively hijack the behavior of another agent downstream.

7. Remote Code Execution (RCE)

Some of the tools and implementations we introduced include tools – callable functions the LLM can invoke based on context. For example, a model might be able to draft an email or trigger a deployment. If an attacker can compromise the model or manipulate the tool definitions, they may escalate to remote code execution by tricking the LLM into calling a function with malicious parameters. This is especially dangerous in environments where the model has high levels of autonomy.

8. Poorly Designed Authentication Procedures

Initially, MCP lacked a standard for authentication. Later updates embedded OAuth 2.1 flows like token issuance and validation – directly into the MCP server. This design breaks the principle of separation between identity and resource logic, increases operational complexity, and broadens the protocol’s attack surface.

In agent-to-agent architectures, improperly scoped authentication flows can result in agents trusting the wrong peers, or accepting tokens from unauthorized sources – leading to data exposure, impersonation, or privilege escalation across the mesh.

Security Best Practices for Agent Context Protocols

So how can we use the impressive capabilities provided to us but still keep our environment safe and secure?

Here are a few best practices that could lower the risk of using agent context protocols:

1. Control network exposure

When using MCP servers or A2A-styled solutions, users should be mindful to deploy these solutions with strict network access policies – make sure only necessary ports are open, and only trusted entities can access assets in the protocol’s environment. Avoid exposing significant endpoints to the public unless absolutely necessary, and in those cases, enforce strong authentication, TLS encryption, and zero trust network segmentation.

2. Enforce context validation and sanitization

Treat external context data as sensitive input. Ensure all context payloads are validated, stripped of unnecessary or unsafe content, and never written directly by untrusted or user-controlled processes. This prevents potential injection, information leakage, or model manipulation.

3. Authentication

It is very important to always enforce strong token-based authentication. Ensure credentials are securely stored, rotated regularly, and scoped to the minimum necessary access. Design the system so that the agent context protocols’ server verifies tokens issued by your existing IdP, and optionally use an API gateway to handle validation and securely pass identity to the server. In MCP servers, on unauthorized requests, return a WWW-Authenticate header to guide clients to the correct authorization server, as proposed, for example, in MCP issue #205.

4. Monitor and log access to context endpoints

Monitor and log all requests and traffic between agent context protocols – whether it is between agents or agent-to-server communication, so you can trace who accessed the data and when and detect possible anomalies.

5. Apply least-privilege context sharing

Only include what’s strictly necessary in your context:

- Avoid sending full editor states, entire directories, or unrelated user metadata

- When using IDE integrations or automated agents, ensure they explicitly filter what they share

- Prefer fine-grained redaction and scoping

6. Secure your supply chain

Always make sure all components and dependencies with access to your model are trustworthy and updated, and keep an eye out on new relevant vulnerabilities found.

The Road Ahead: Toward Agent-Oriented AI

The emergence of agent context protocols standards signals a significant shift:

- From Chat to Memory: Agents will no longer start from scratch every time you chat, but will have preserved memory of your usual needs and preferences, like in OpenAI.

- From Prompt to Protocol: App developers will move from brittle prompts to structured context schemas, allowing them to precisely match the LLMs response to the user’s needs.

- Cross platform integration: users will now be able to receive results based on multiple data sources at once, as per their preferences, and with access to their personal data that is not present in the current LLM’s or GenAI’s database.

- Centralized control: these new developments lead us straight to assistant like applications – MCP, A2A and future, similar developments allow us to control multiple applications and perform tasks using only one platform

We’re on the cusp of “LLM Operating Systems” – where every app has a memory, context, and toolchain that persists and evolves.

Final Thoughts

These agent context protocols are more than just protocols – they’re a blueprint for the future of intelligent systems. As Anthropic and Google lead with a standardized context-loading framework, other platforms are adapting in parallel with their own interpretations.

But as with all protocols, the devil is in the details. The moment you standardize a way to expose sensitive context, you also standardize a way to misuse it.

Security teams should get ahead of this. MCP, A2A and friends are going to be everywhere – in IDEs, terminals, even browser extensions, and the sooner we build a secure-by-default mindset around it, the better.

About Orca Security

We’re on a mission to provide the industry’s most comprehensive cloud security platform while adhering to what we believe in: frictionless security and contextual insights, so you can prioritize your most critical risks and operate in the cloud with confidence.

Unlike other solutions, Orca is agentless-first and fully deploys in minutes with 100% coverage, providing wide and deep visibility into risks across every layer of your cloud estate. This includes cloud configurations, workloads, and identities. Orca combines all this information in a Unified Data Model to effectively prioritize risks and recognize when seemingly unrelated issues can be combined to create dangerous attack paths. To learn more, see the Orca Platform in action.