Generative AI is fundamentally changing how we work, expanding human creativity and optimizing productivity across the search-summarize-synthesize workflow we use in our day-to-day jobs. Anthropic recently open-sourced the Model Context Protocol (MCP), a standard for AI assistants to connect and interact with other data sources. This innovation extends the relevance of GenAI to the data sources that matter most to operating your business.

Over the past 18 months, Orca Security has innovated to ensure organizations can use GenAI securely, with capabilities like AI-SPM to see AI models used at your organization, as well as use AI to improve security outcomes.

Here, our team built an MCP Server to interact with Orca Platform data using Claude, Cursor and other AI Clients. This innovation enables security teams to gain instant access to deep cloud telemetry from Orca without directly engaging in the Orca Platform, making it easier than ever to understand their cloud security risks and compliance gaps.

A quick introduction to GenAI, Anthropic, and Claude

Artificial intelligence (AI) is founded on the building blocks of machine learning, deep learning, and large language learning models (LLM). Generative AI (GenAI) is a type of AI that can create new content. Someone can type in a question, otherwise known as a prompt, and the GenAI tool can return an answer in text, code, or images.

Anthropic has been at the forefront of AI innovation since 2021, launching their family of LLMs named Claude in March 2023. Claude is also the name of the GenAI chatbot that people can use to take advantage of the LLMs that power its advanced reasoning, vision analysis, code generation, and multilingual processing capabilities.

What is the Model Context Protocol (MCP)?

As GenAI skyrocketed in popularity, organizations started wondering how they could benefit from the promised efficiency gains by using their own private data as context for GenAI prompting. Aside from security and ethical concerns, there was another problem that stalled the broad adoption of GenAI with enterprise data sources—organizations had to share context in a bespoke manner per data source.

When we think of how an application communicates with another app, we think of APIs—a uniform way of requesting and responding with data. AI models didn’t have a standard way to interface with apps and their data, until now.

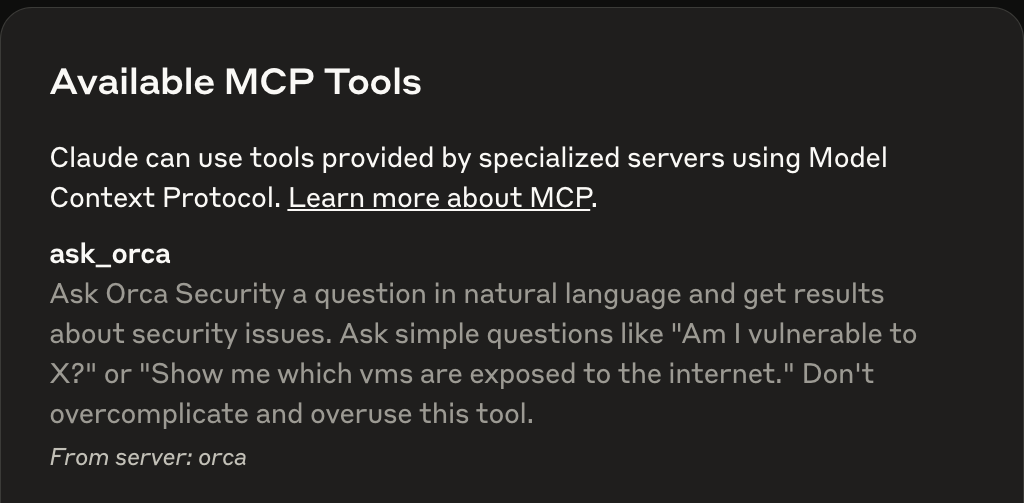

Model Context Protocol (MCP) is a standard made available by Anthropic to simplify how their genAI chatbot, Claude, can gain context from other data sources. MCP servers give developers a way to expose data and services from their apps so people can use Claude to ask questions, create summaries, and simply understand their data better.

How does the Orca MCP Server work?

The Orca Pod created an Orca MCP Server so people can securely connect the data from their Orca environment with Claude. Once the MCP Server is connected, people can ask questions about their cloud environment, create summaries, and discover more stories buried in the plethora of cloud security and compliance data from the Orca Platform. This saves security and compliance teams from the need to learn the complicated query languages of cloud providers or juggle multiple interfaces of legacy solutions to find answers. The Orca MCP Server extends Orca’s AI-Driven Search capabilities, which enable teams to better understand their cloud by asking questions in plain language, with more than 50 languages supported.

Understanding your cloud risks is as simple as asking Claude a question

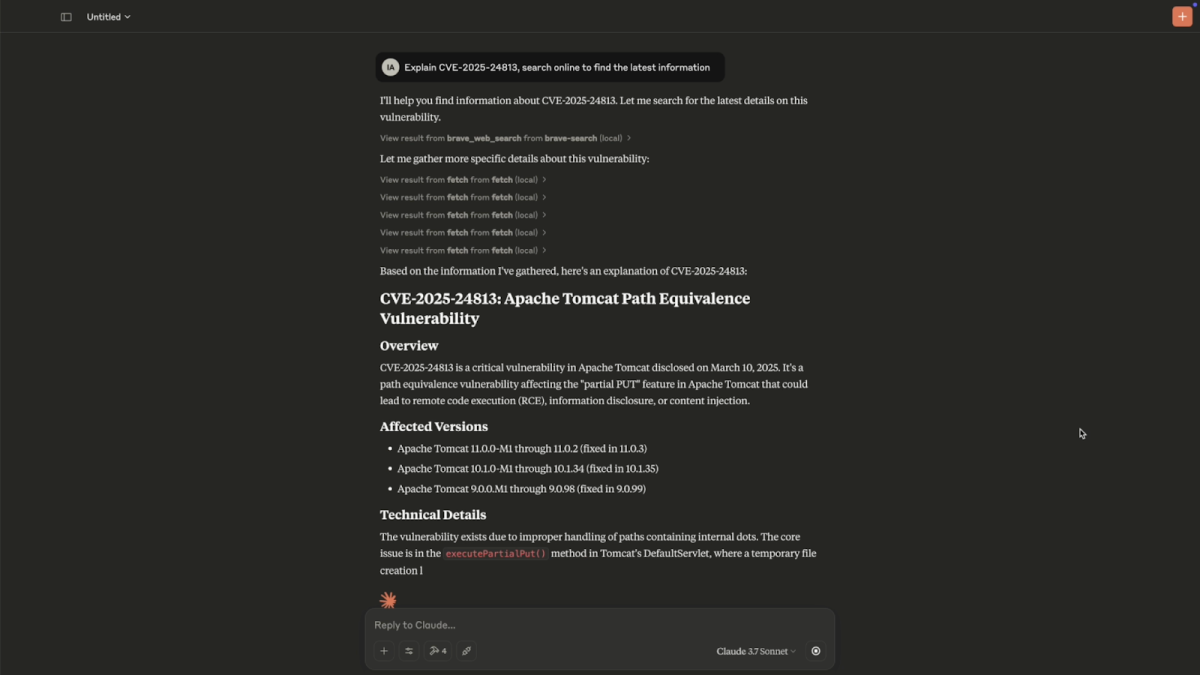

Let’s say a new CVE hits the news and you want to quickly understand what the vulnerability is and if your organization is impacted by it. By connecting to the Orca MCP Server, it becomes as simple as asking Claude a series of questions. In this example, we’ll use Claude to understand the impact of CVE-2025-24813, a recent vulnerability in Apache Tomcat, disclosed on March 10, 2025.

1 – What is CVE-2025-24813?

The first step is to understand what kind of vulnerability we’re dealing with, so we ask Claude to give us the latest information on this CVE.

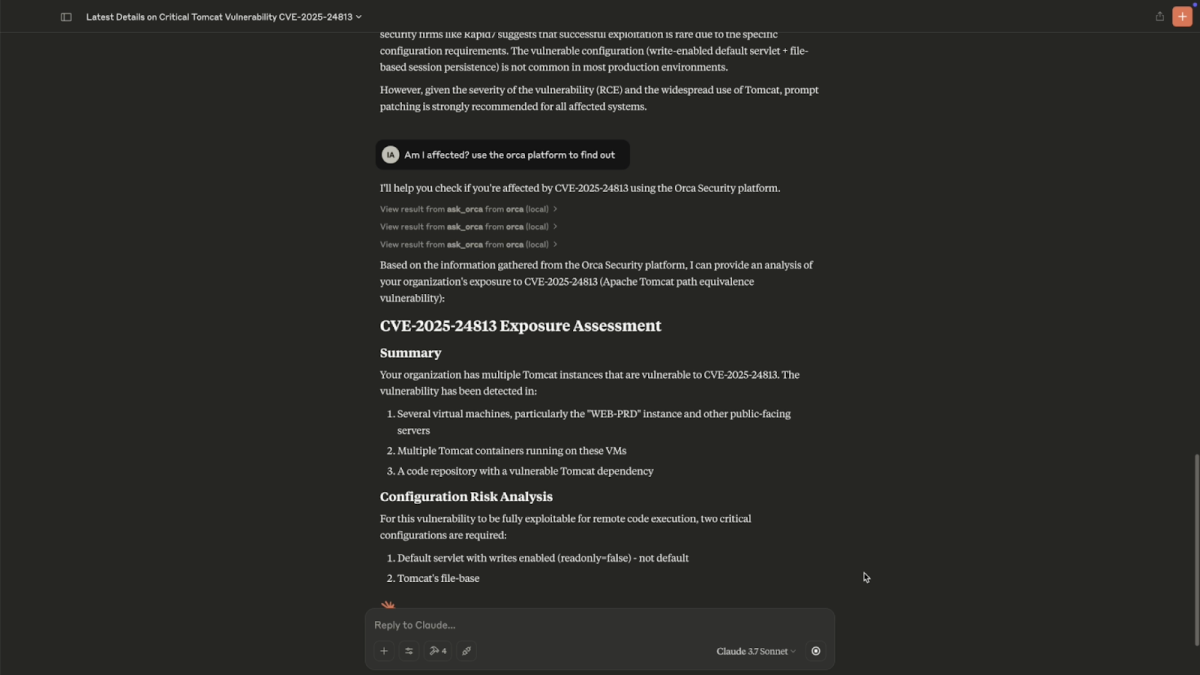

2 – Is my environment impacted by CVE-2025-24813?

To understand if our organization is at risk, we can ask Claude to access the Orca MCP that is connected to our environment to see how we are affected by this CVE.

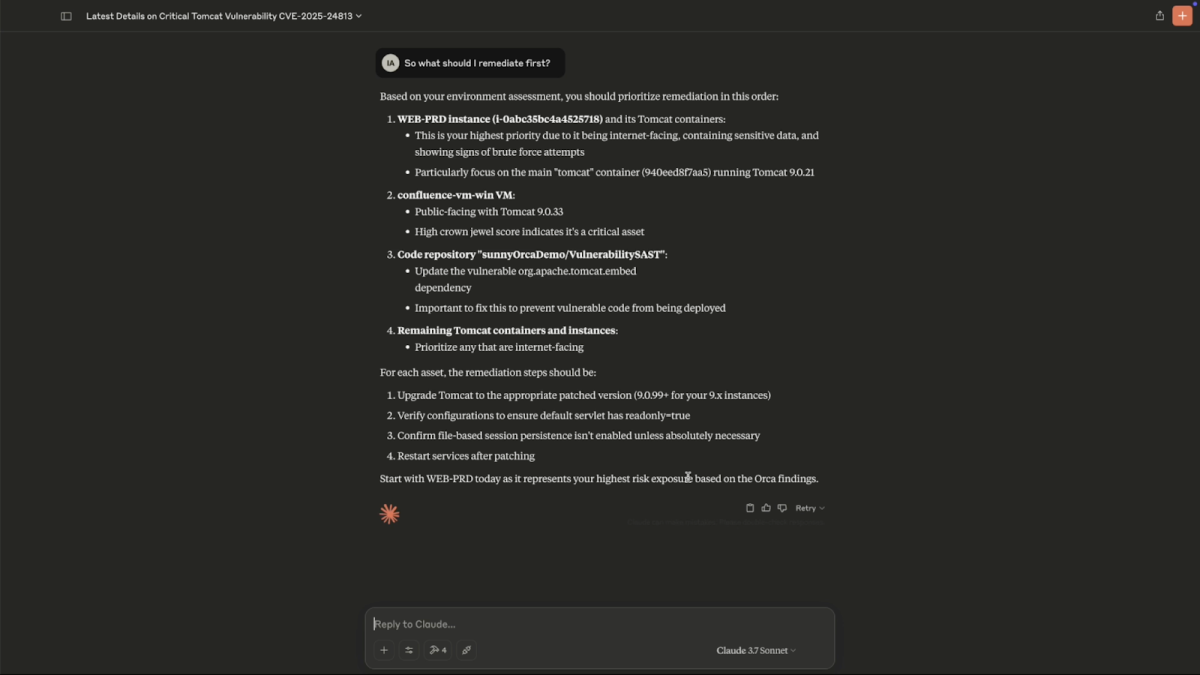

3 – How should I approach remediation?

Once we get a grasp on the risk to our organization from this CVE, we can prioritize remediation steps using the Orca findings.

Watch the full demo of the Orca MCP server in action below:

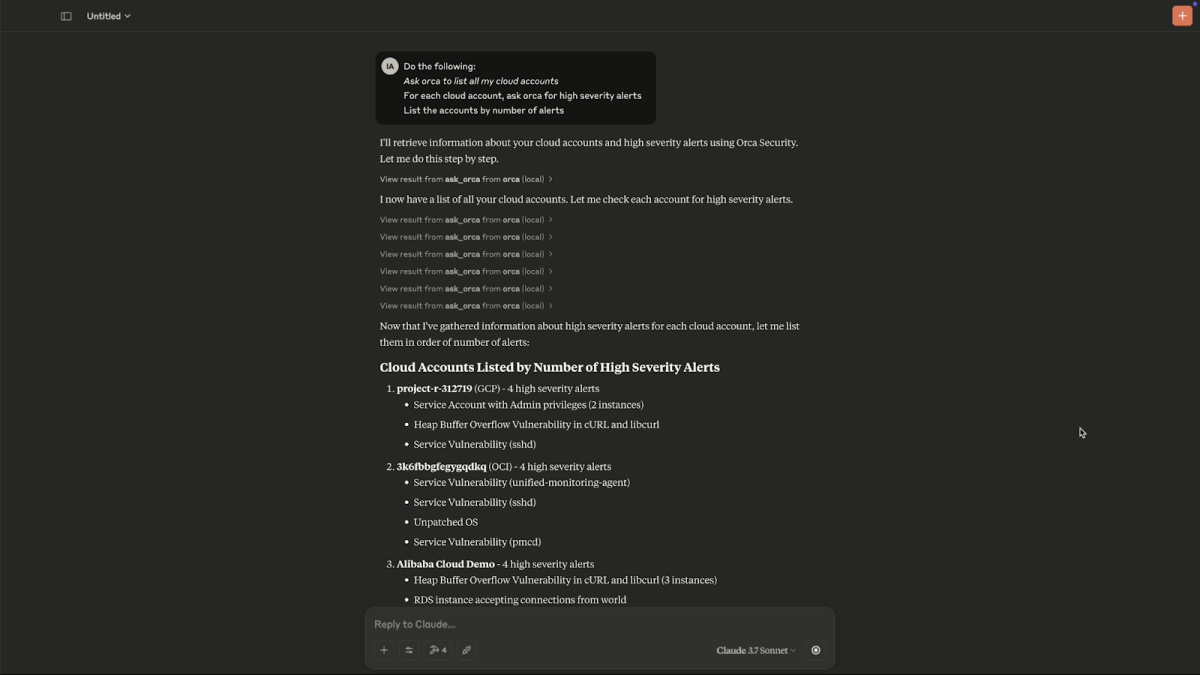

Bonus Actions with the Orca MCP server

Instead of a single query per prompt, we can chain a series of Orca queries in a prompt to really do some work for us! For example, if we want to understand which cloud accounts have the highest number of high severity alerts, we can prompt Claude as shown in the screenshot below.

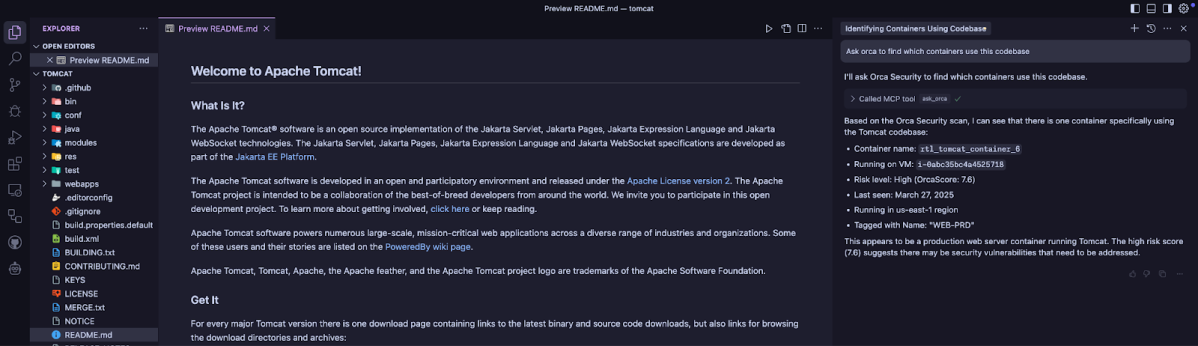

Or if you’re more interested in integrating with your codebase, you could use Cursor and ask “Search for containers that use this codebase”

Want to use these capabilities or learn more?

If you’re an Orca customer and you’d like to try out the Orca MCP Server, reach out to your account team. If you want to create your own MCP server, take a look at Amazon’s community blog, and check out other MCP servers here.

About Orca

The Orca Cloud Security Platform is an open platform that identifies, prioritizes, and remediates security risks and compliance gaps across AWS, Azure, Google Cloud, Oracle Cloud, Alibaba Cloud, and Kubernetes. The Orca Platform leverages our patented SideScanning™ technology to provide complete coverage and comprehensive risk detection. To see how this platform can work for your organization, schedule a personalized 1:1 demo.