Executive Summary:

- The Orca Research Pod has discovered CVE-2025-48710 in kro (Kube Resource Orchestrator) where an attacker could introduce a malicious CustomResourceDefinition (CRD)

- kro is an alpha experimental open source project that allows you to define custom Kubernetes APIs (CRDs) using ResourceGraphDefinition resources

- Potential adversaries can maliciously modify custom resources managed by kro, as well as introducing custom resources that affect kro’s reconciliation process

- Both lead to a confused deputy vulnerability, where kro is unknowingly enforced to deploy malicious applications that are controlled by attackers

- In our example, we’ve taken advantage of these findings to replace a default image with our own reverse-shell and achieve remote code execution on a container in the cluster

- The bugs are patched, no user intervention is required. You can check the following links for more information: https://github.com/kro-run/kro/release

What is kro?

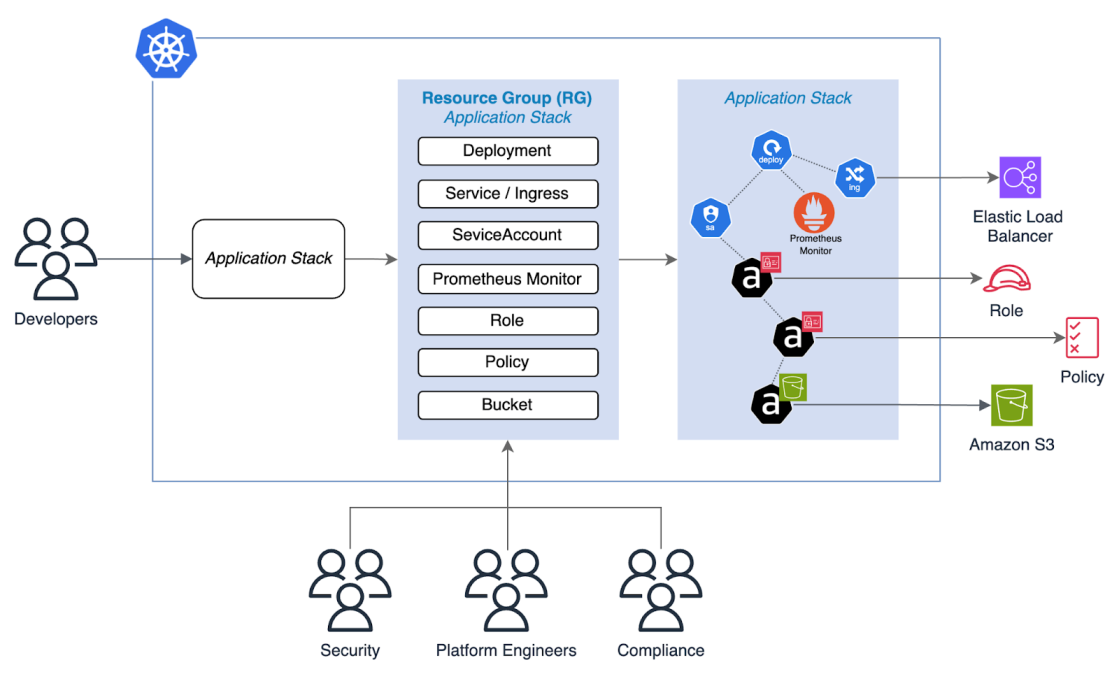

Kube Resource Orchestrator (kro, pronounced “crow”) is an alpha experimental open-source project maintained by AWS, Google Cloud and Azure. Kro helps users create application stacks through dynamic construction of Custom Resources. It introduces a native way to manage any group of Kubernetes resources as a single unit, which may encourage Kubernetes practitioners to use it over helm (as written by Wilso Spearman from Parity).

Once installed, kro defines a Custom Resource named ResourceGraphDefinition which serves as an abstraction layer for other Kubernetes APIs. From the docs: “ResourceGraphDefinitions are the fundamental building blocks in kro. They provide a way to define, organize, and manage sets of related Kubernetes resources as a single, reusable unit.” In other words, creating ResourceGraphDefinitions (RGD) will result in new CustomResourceDefinitions (CRD) that expose APIs for deploying multiple resources together.

Creating a set of resources with kro

A ResourceGraphDefinition acts as the blueprint of the application stack which defines how the descendant custom API will be defined. It specifically decides the API’s schema, and the resources that will be created.

A simple RGD example of an nginx application will look like the following:

apiVersion: kro.run/v1alpha1

kind: ResourceGraphDefinition

metadata:

name: my-nginx-app-rgd

spec:

schema:

apiVersion: v1alpha1

kind: MyNginxApp

spec:

name: string | default="my-nginx-app"

image: string | default="nginx"

resources:

- id: deployment

template:

apiVersion: apps/v1

kind: Deployment

metadata:

name: ${schema.spec.name}

spec:

replicas: 3

selector:

matchLabels:

app: ${schema.spec.name}

template:

metadata:

labels:

app: ${schema.spec.name}

spec:

containers:

- name: ${schema.spec.name}

image: ${schema.spec.image}

ports:

- containerPort: 80

- id: service

template:

apiVersion: v1

kind: Service

metadata:

name: ${schema.spec.name}-service

spec:

type: LoadBalancer

selector: ${deployment.spec.selector.matchLabels}

ports:

- protocol: TCP

port: 80

targetPort: 80Once applied, it will expose a new Kubernetes API named MyNginxApp, that could then be used in order to create a 3-replicas nginx deployment exposed by a LoadBalancer on port 80, via an instance definition like the following:

apiVersion: kro.run/v1alpha1

kind: MyNginxApp

metadata:

name: my-nginx-app-instance

spec:

name: best-nginx-appThe Confused Deputy Scenario

A confused deputy scenario is a security issue where an entity that doesn’t have permission to perform an action can coerce a more-privileged entity to perform the action.”

Kubernetes CRDs are powered by controllers. The controller is what handles reconciliation by watching for CRDs and applying their blueprint. This is how resources like deployments, services, etc are magically created in the process of applying a CRD.

When we applied an instance of RGD (requires specific RBAC permissions), kro’s controller was running in the background to create a new CRD named mynginxapps.kro.run according to the RGD’s blueprint. Since every CRD is powered by a controller, kro also defines a new in-memory microcontroller that will handle reconciliation of future mynginxapps.kro.run instances. This is why we’ve seen the required deployment and service resources being created when we apply a MyNginxApp instance.

Modifying the CRD

Onboarding kro comes with great power, and as Uncle Ben from Spiderman taught us, with great power comes great responsibility. Kro’s default installation permission is cluster-admin. While this allows users to create RGDs, it is the equivalent to giving users the keys to the entire kingdom, which is why granting explicit RBAC permissions is best practice. Even though this can be differently configured, many use-cases of kro require high privileged access.

With that being said, administrators should be careful with giving users the permission to create RGD resources. But what about other CRDs?

As it turns out, confusing kro’s microcontroller can be as simple as directly modifying its watched CRD, regardless of modifying the original RGD resource. This is possible because kro doesn’t actively watch for CRD modifications, and auto-scheduled reconciliation is only triggered based on the resync period, which is every 10 hours by default.

This opens an easy attack surface to confuse the dynamic controller, and maliciously update the blueprint. In our example, we’ve replaced the default image with our own reverse-shell and achieved remote code execution on a container in the cluster. Following our report, kro’s maintainers immediately opened a new related issue, and started working on a fix.

Note: kro considers the ability to mutate a CRD a cluster-admin permission, and not a vulnerability in kro. While kro makes every effort to perform the correct behavior based on the data available to it, the ability to manipulate the data store underneath kro is the responsibility of the cluster administrator. kro is in active development and not intended for production use.

Turtle racing the informer

Kro’s controller uses dynamic informers to watch for real-time resource changes. Informers use the watch verb to subscribe to updates from the API server on a resource, in this case a custom resource definition, which triggers the reconciliation process.

In kro, the main informer watches for RGD resource changes using the following GVK (Group, Version, Kind):

- Group: kro.run

- Version: v1alpha1

- Kind: ResourceGraphDefinition

The micro informers watch for cascading CRD resource changes using a different GVK. For example:

- Group: kro.run

- Version: v1alpha1

- Kind: MyNginxApp

When an RGD is being deleted, its associated dynamic informer enters a watch error crash loop and doesn’t properly shut down. I originally thought this crash loop was an upstream issue, but it turned out to be a bug in kro. Additionally, the informers never shut down on watch errors, but gradually increase retry intervals. So while there’s actually no real race here, a potential attacker can use this bug to introduce a new malicious CRD that will be respected by the dangling micro informer.

What’s really interesting about this issue is the fact that a fix was already pushed a month before I started my research on kro, but it wasn’t part of the latest version release. Only a few days after I sent the report to the maintainer, a new version was released with the fix. While this vector is not trivial to exploit, it highlights a broader concern around the culture of timely distinguished hotfixes in open-source development.

Final Words

To carry these confused deputy attacks, the attacker must have cluster-wide permissions over CRD and the Kubernetes APIs. Kubernetes administrators can grant `create` permissions over CRD as they see fit, which can be overlooked as these don’t appear serious at first, as accessing applications / secrets.

I would like to thank kro’s maintainer Amine for his help fixing these issues responsibly. Kro is currently an alpha experimental project, and there’s active work on maturing the project at both architectural and implementation levels. For anyone interested in maturing this project, please contribute to the GitHub repo.

How can Orca help?

There are two ways customers can use Orca to identify these risks in their own environment. Orca Sensor is our lightweight eBPF-based sensor that provides runtime visibility and protection natively integrated with the Orca Platform. Sensor can detect malicious activity related to this attack vector, like reverse shell execution shown in the video below. Additionally, Orca customers can analyze the permissions of the role associated with kro’s installation for visibility and mitigation.

About Orca Security

We’re on a mission to provide the industry’s most comprehensive cloud security platform while adhering to what we believe in: frictionless security and contextual insights, so you can prioritize your most critical risks and operate in the cloud with confidence.

Unlike other solutions, Orca is agentless-first and fully deploys in minutes with 100% coverage, providing wide and deep visibility into risks across every layer of your cloud estate. This includes cloud configurations, workloads, and identities. Orca combines all this information in a Unified Data Model to effectively prioritize risks and recognize when seemingly unrelated issues can be combined to create dangerous attack paths. To learn more, see the Orca Platform in action.