Life at Orca Security revolves around the Pod – the people who have joined Orca’s mission to make the cloud a safer place for all. The ‘Pod Spotlight’ is a Q&A series dedicated to showcasing all of the amazing talent along with their personal stories at Orca.

This month, we’re excited to spotlight Ofir Yakobi, a cloud security researcher at Orca. Together with Shir Sadon, she created AI Goat, an innovative open-source project that helps security professionals learn about machine learning vulnerabilities through hands-on experience.

Can you tell us about yourself and your background?

I’m Ofir Yakobi, a cloud security researcher at Orca Security. I started as an IT engineer, became a security analyst, then a security researcher focusing on malware and on-prem solutions before joining Orca.

What do you enjoy about your research role?

I really enjoy working with talented colleagues who I can learn from and who help each other out. We also get to do fascinating research. For instance, I worked on developing a PoC exploit around typosquatting in GitHub Actions which showed just how easy attackers can take advantage of a simple typo. Following our discovery of the Sys:All Loophole in Google Kubernetes Engine (GKE), I scanned publicly available GKE clusters and discovered just how many GKE Clusters in production were vulnerable, including a GKE cluster from a NASDAQ listed company.

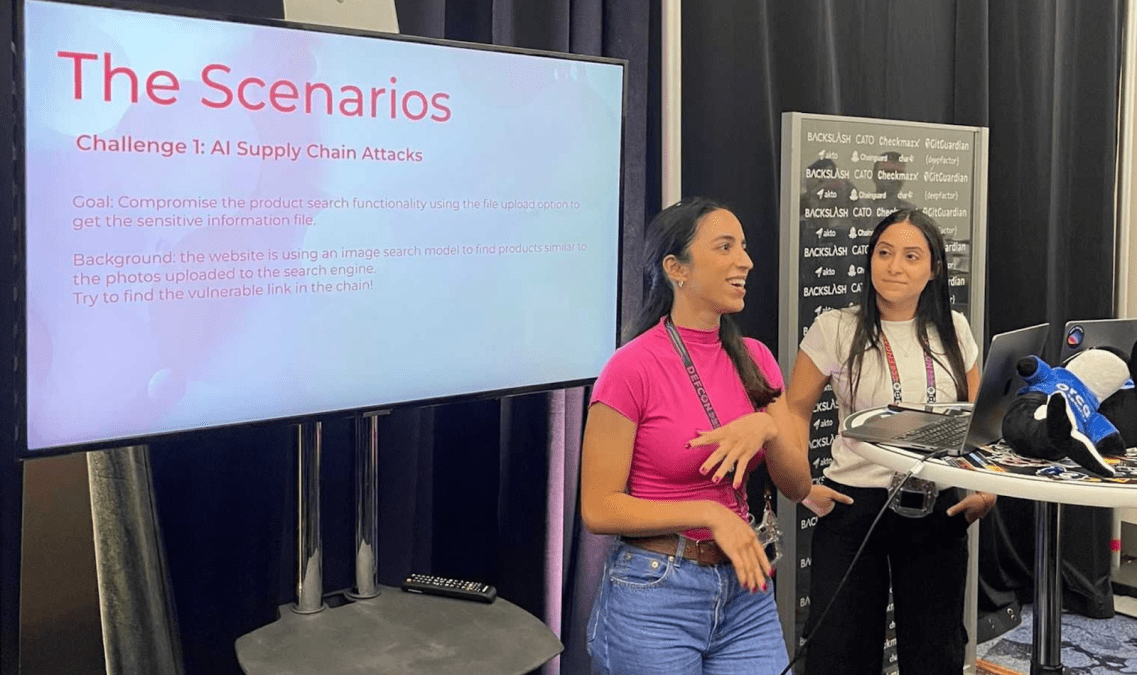

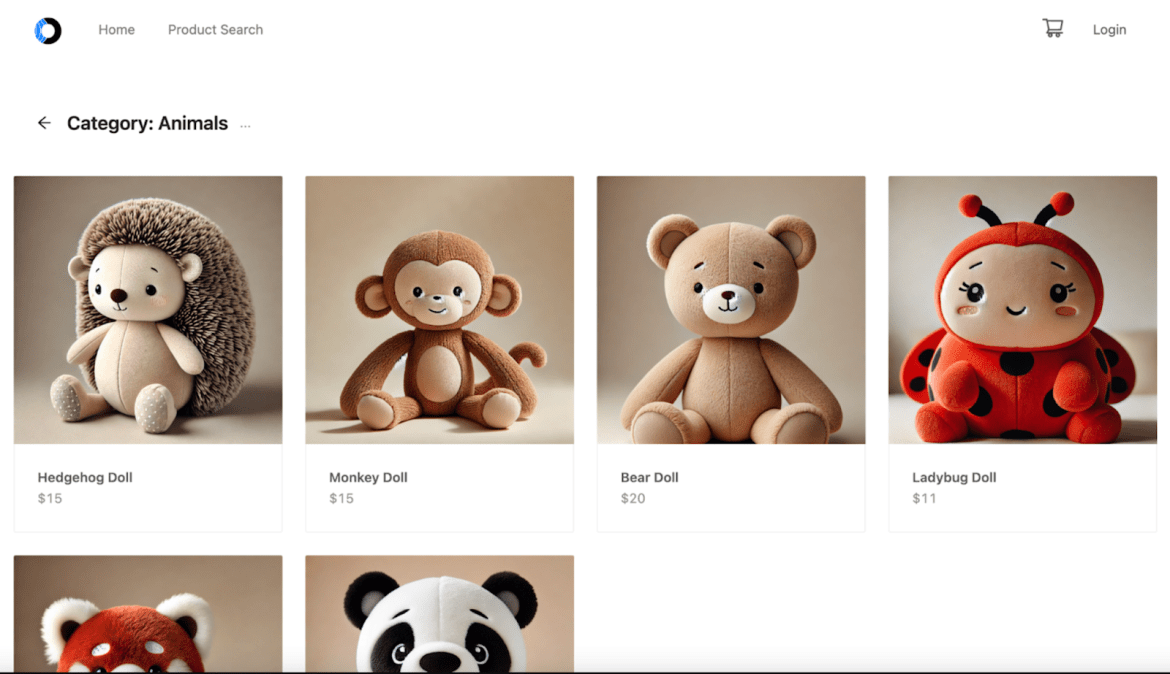

This year has been particularly exciting as I’ve had the opportunity to present our work at major security conferences including BlackHat DefCon and OWASP 2024. At these events, Shir and I demonstrated AI Goat through an interactive vulnerable toy store simulation. The demo let security professionals explore various AI security risks, from supply chain attacks to data poisoning scenarios. The security community’s response was fantastic, with many expressing excitement about finally having a dedicated platform for learning and testing AI security concepts.

What is AI Goat and how does it work?

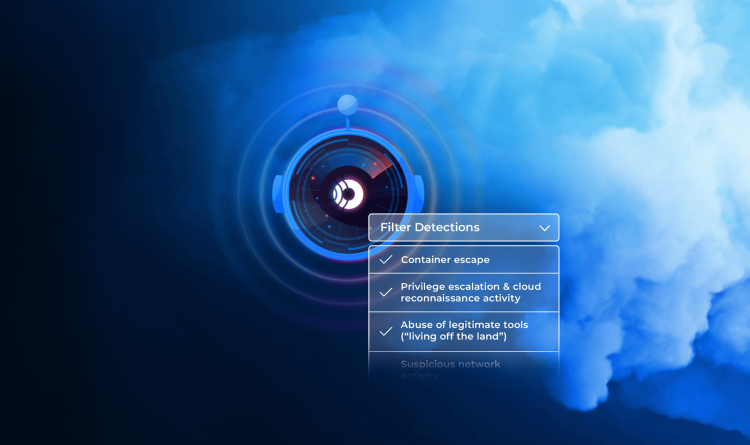

AI Goat is our open source project that provides infrastructure for learning about machine learning vulnerabilities through hands-on exploitation of deliberately included vulnerabilities and misconfigurations.Users can practice exploiting these vulnerabilities in hands-on scenarios that simulate real-world machine learning vulnerabilities.

What led to the creation of AI Goat?

It was created because when trying to learn about AI and machine learning security, I found there wasn’t much research or many learning resources available. While we have tools like web security goats for web security, there was nothing similar for AI security. I wanted to both learn myself and provide infrastructure for others to learn.

What do you enjoy about working at Orca Security?

I enjoy two main aspects: working with talented colleagues I can learn from, and the interesting nature of the research work. We also contribute to product research and collaborate with developers to improve the product.

To learn more about Ofir’s work, follow her on LinkedIn and check out Ofir’s Orca blogs.