According to Gartner, worldwide spending on generative AI is set to reach $644 billion (USD) in 2025, a nearly 77% year-over-year increase. That level of investment underscores the growing importance of AI to modern business strategy. Yet capturing real value from AI requires more than enthusiasm and budget—it demands a clear understanding of the underlying AI models driving outcomes.

In this post, we reveal the 10 most popular AI models of 2025 based on analysis of billions of cloud assets by the Orca Research Pod. This update refreshes the ranking covered in our Top 10 AI Models of 2024 blog, highlighting how model usage patterns have evolved as organizations scale their AI initiatives.

AI usage in the cloud continues to surge

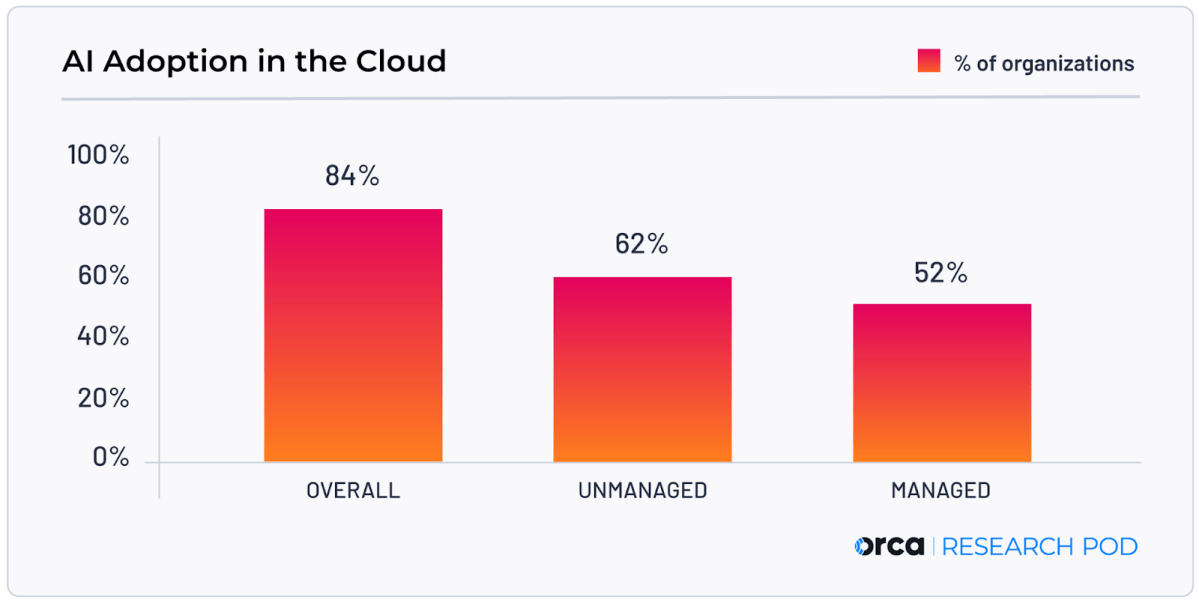

The Orca Research Pod found that AI model usage in cloud environments jumped from 56% of organizations in 2024 to 84% in 2025. That’s a dramatic rise in adoption across industries and cloud estates.

Another notable trend in this year’s rankings is the prevalence of OpenAI models, which dominate the list of the most popular AI models in the cloud. A key explanation is the significant adoption of Azure OpenAI, Microsoft’s managed AI service that provides scalable, enterprise-grade access to OpenAI’s foundation models. According to the 2025 State of Cloud Security Report, 30% of organizations use Azure OpenAI, making it one of the most widely adopted services in cloud environments.

Additionally, 27% of organizations use Azure Machine Learning (Azure ML) Workspace, which supports custom model training and orchestration, including workflows that often integrate with OpenAI models. The significant adoption of both services helps explain why OpenAI offerings are so prominent across enterprise cloud environments.

Readers of last year’s analysis may notice a sharp drop in the adoption rates for individual models in this year’s list. While this may appear to suggest AI usage is falling, it reflects an increase in the number of organizations using AI and the number of AI models available to them. Rather than adoption rates concentrated among a few models, we see a greater distribution of usage across available options.

What are AI models?

An AI model is a computer program trained to perform a specific task (or set of tasks) by learning patterns from data. Different models excel at different things—language, vision, speech, coding, retrieval, multimodal reasoning, and more. While some models are general-purpose and can handle a wide range of prompts, while others are optimized for speed, cost, embeddings, or domain-specific performance.

AI models typically work alongside two related building blocks:

- AI services: Cloud-specific capabilities offered by cloud providers that let teams deploy, fine-tune, or consume AI functionality at scale.

- AI packages: Frameworks, libraries, or accelerators that help train, customize, optimize, or operationalize models.

Together, these components power the AI-driven applications showing up across today’s cloud-native stacks.

The most used AI models in 2025

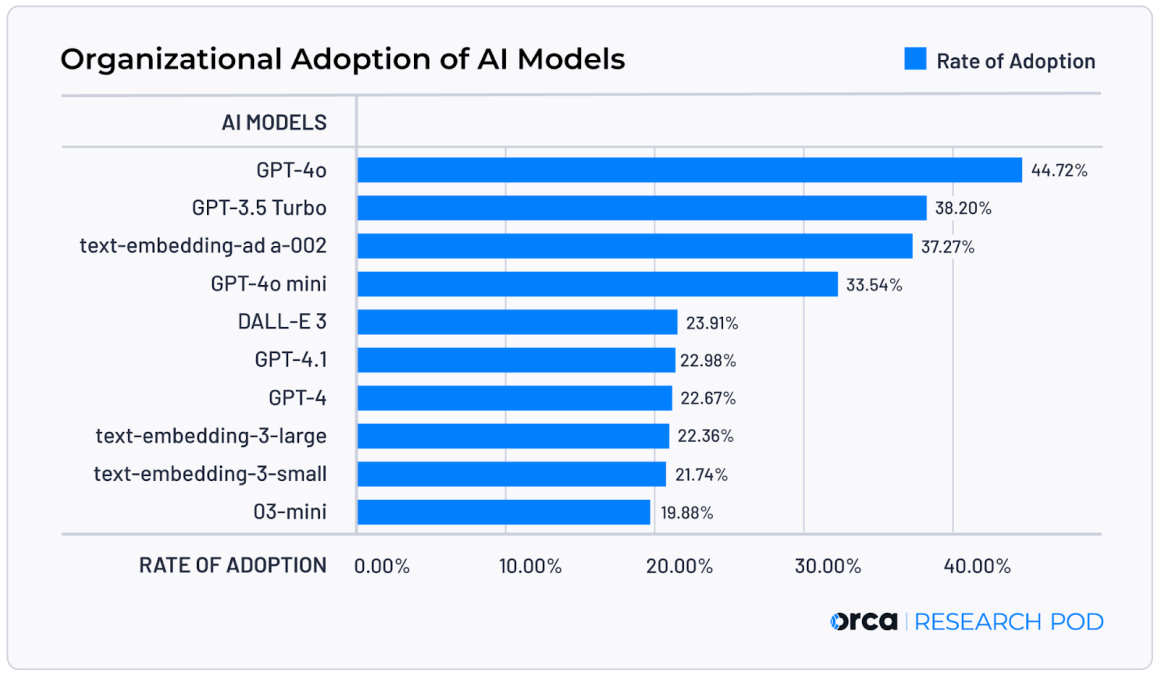

Below are the 10 most widely adopted AI models observed in cloud environments, based on the Orca Research Pod’s analysis. Percentages represent the share of organizations with a specific model deployed in their cloud estate out of all organizations using at least one model in the cloud.

#1. GPT-4o (44.72%)

OpenAI’s flagship GPT-4o (“omni”) leads the list for 2025, appearing in nearly 45% of cloud environments. GPT-4o is a high-intelligence, multimodal model that can reason across text, images, and audio, supporting real-time voice interaction, complex analysis, and rich generative experiences.

Where it’s used: Conversational interfaces, knowledge assistants, multilingual Q&A, cloud operations copilots, and cloud security workflows that benefit from natural-language interaction.

#2. GPT-3.5 Turbo (38.20%)

Still a workhorse in production, GPT-3.5 Turbo remains popular for teams balancing speed, scale, and cost efficiency. Originally introduced in 2022, it delivers strong performance for high-volume text-generation and transformation tasks, including summaries, routing, translation, templated responses, lightweight chat, and structured output generation.

Where it’s used: Ticket triage, documentation summarization, internal chatbots, automated knowledgebase enrichment, and other everyday language operations.

#3. text-embedding-ada-002 (37.27%)

Despite being a specialized model, text-embedding-ada-002 ranks third in our list, offering proof that embeddings power a huge share of behind-the-scenes AI intelligence. This model converts text into numeric vectors that capture semantic similarity, enabling search, clustering, recommendations, and retrieval-augmented generation (RAG).

Where it’s used: Search relevance, deduplication, content tagging, recommendation engines, and grounding large language models (LLMs) with enterprise data.

#4. GPT-4o mini (33.54%)

GPT-4o mini brings much of GPT-4o’s flexibility to teams with tight latency, throughput, or cost constraints. Lighter-weight yet still multimodal, it’s well suited for scaling AI assistants across large user bases or embedding AI into edge or real-time applications.

Where it’s used: In-app copilots, field/edge devices, customer support chats, and thin-client experiences where every millisecond and dollar counts.

#5. DALL·E 3 (23.91%)

DALL·E 3 continues to define text-to-image excellence, used by nearly one in four AI-adopting organizations. It generates high-fidelity, instruction-following synthetic imagery from natural language prompts.

Where it’s used: Product mockups, marketing assets, brand concepting, UI illustration, visual A/B experiments, and rapid creative prototyping.

#6. GPT-4.1 (22.98%)

Positioned as an enhancement to GPT-4, GPT-4.1 focuses on improved reasoning quality, better adherence to instructions, and reduced hallucinations. While newer omni-class models grab headlines, GPT-4.1’s reliability profile makes it attractive for regulated or accuracy-sensitive workflows.Where it’s used: Policy generation, compliance documentation, report drafting, analysis review tasks, and other high-assurance enterprise use cases.

#7. GPT-4 (22.67%)

Released in 2023, GPT-4 still shows strong staying power in enterprise environments. With a large context window and robust reasoning ability, it remains trusted in mission-critical production where stability and tested behavior matter more than cutting-edge features.

Where it’s used: Established AI apps, long-form content generation, complex knowledge analysis, and enterprise chat interfaces with guardrails.

#8. text-embedding-3-large (22.36%)

The first of two newer embedding models on this list, text-embedding-3-large improves semantic fidelity and recall for enterprise-scale search and retrieval. Its richer vector representations help systems understand nuanced language across long or technical documents.

Where it’s used: Knowledge graph enrichment, legal/technical corpus search, AI copilots that reference policies or codebases, and advanced RAG.

#9. text-embedding-3-small (21.74%)

text-embedding-3-small delivers strong semantic performance in a smaller, faster, and more cost-efficient package. Ideal for large-scale indexing jobs, high-QPS search, or workloads running in constrained environments.

Where it’s used: Log enrichment, lightweight semantic tagging, personalization at scale, and near-real-time similarity search.

#10. o3-mini (19.88%)

Rounding out the list, o3-mini is an efficient OpenAI model showing meaningful traction across cloud estates. Though less publicly marketed than others, its uptake suggests strong utility in resource-constrained, low-latency, or embedded AI scenarios.

Where it’s used: Batch automation, small-footprint agents, operational scripting, and embedded intelligence in cloud tooling.

AI model usage insights from the Orca Research Pod

The Orca Research Pod continuously analyzes billions of scans of cloud assets across the globe and hundreds of thousands of code repositories to surface emerging technology patterns and security risks. Their latest findings on AI model adoption informed this 2025 ranking.

The team recently presented key insights on the rise of AI in cloud environments in the 2025 State of Cloud Security Report, which also uncovered broader trends covering a wide range of cloud security risks.

How Orca uses AI models to improve cloud security

Orca became the first CNAPP to integrate with GPT-3 back in early 2023, applying generative AI to accelerate risk detection, simplify investigations, and streamline remediation workflows.

Since then, we’ve continued to evolve the Orca Cloud Security Platform to secure AI innovation in the cloud and leverage AI to enhance cloud security. Our AI Security Posture Management (AI-SPM) capabilities give organizations full and deep visibility into AI risks across more than 50 AI models and packages.

Meanwhile, Orca AI powers AI-driven capabilities throughout our Platform, including AI-driven discovery of assets and risks using natural-language search, on-demand guidance for triage and investigation, automated generation of remediation code and instructions, multi-lingual support across 50+ languages, and more.

Learn more

Ready to secure your AI innovation in the cloud? Schedule a personalized 1:1 demo to see how Orca helps you secure your entire multi-cloud or hybrid-cloud estate.