The Challenge

AI Deployments Rapidly Introduce Cloud Security Risks

AI presents a myriad of business benefits. Yet without the right AI security in place, organizations face new cloud risks, some familiar, and some unique to AI models. Siloed AI-SPM products only multiply the existing challenges facing security teams—alert fatigue, blind spots, and inability to see the bigger picture—while leaving organizations exposed to critical risks.

Security teams don’t know which AI models are in use and aren’t able to discover shadow AI.

Misconfigured public access settings, exposed keys, and unencrypted data can cause model theft.

If sensitive data is (accidentally) included in training data, this can cause AI models to expose sensitive data and PII.

Our Approach

Complete End-to-End AI-SPM with 100% Visibility

Orca’s AI-SPM leverages our patented, agentless SideScanning™ technology to provide the same visibility, risk insight, and deep data for AI models that it does for other cloud resources, while also addressing unique AI use cases. Orca’s AISPM solution covers 50+ AI models and software packages, allowing you to confidently adopt AI tools while maintaining visibility and security for your entire tech stack—no point solutions needed.

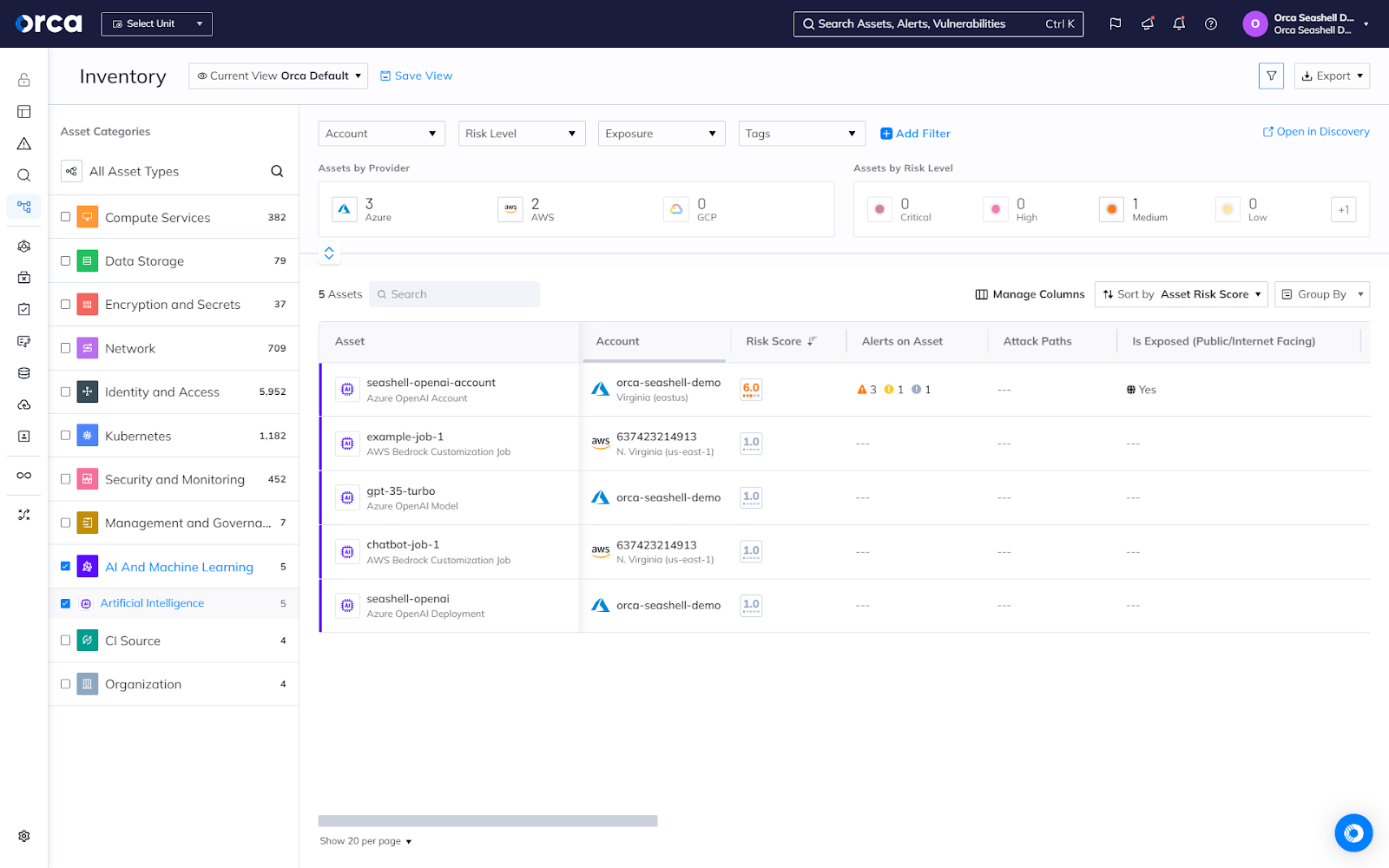

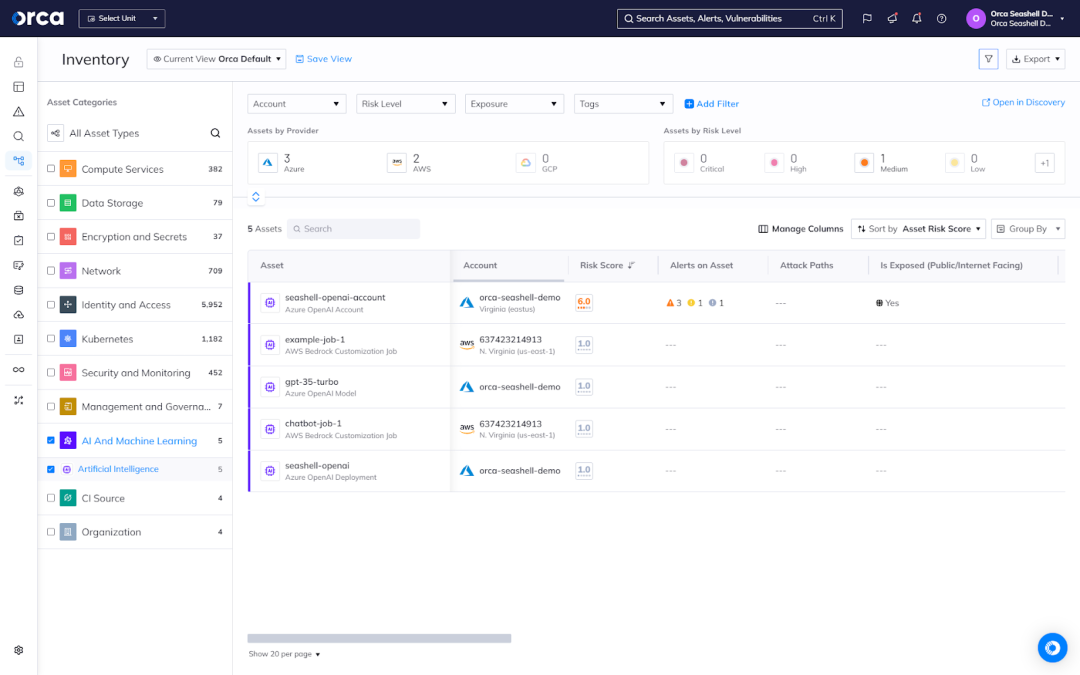

Complete AI and ML inventory and bill of materials (BOM)

Orca gives you a complete view of all AI models that are deployed in your environment—both managed and unmanaged, including any shadow AI.

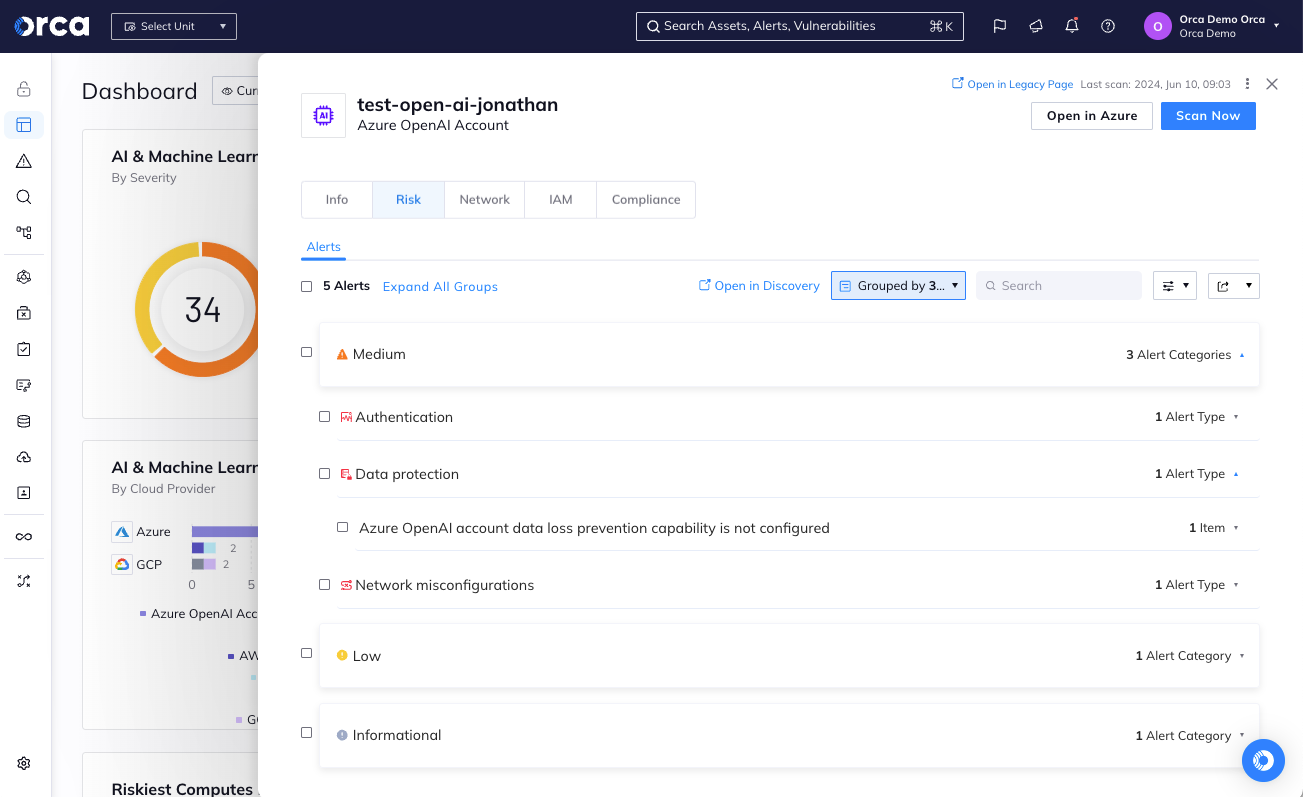

Address AI misconfigurations

Orca ensures that AI models are configured securely, covering network security, data protection, access controls, and IAM.

Detect sensitive data

Orca alerts if any AI models or training data contain sensitive information so you can take appropriate action to prevent unintended exposure.

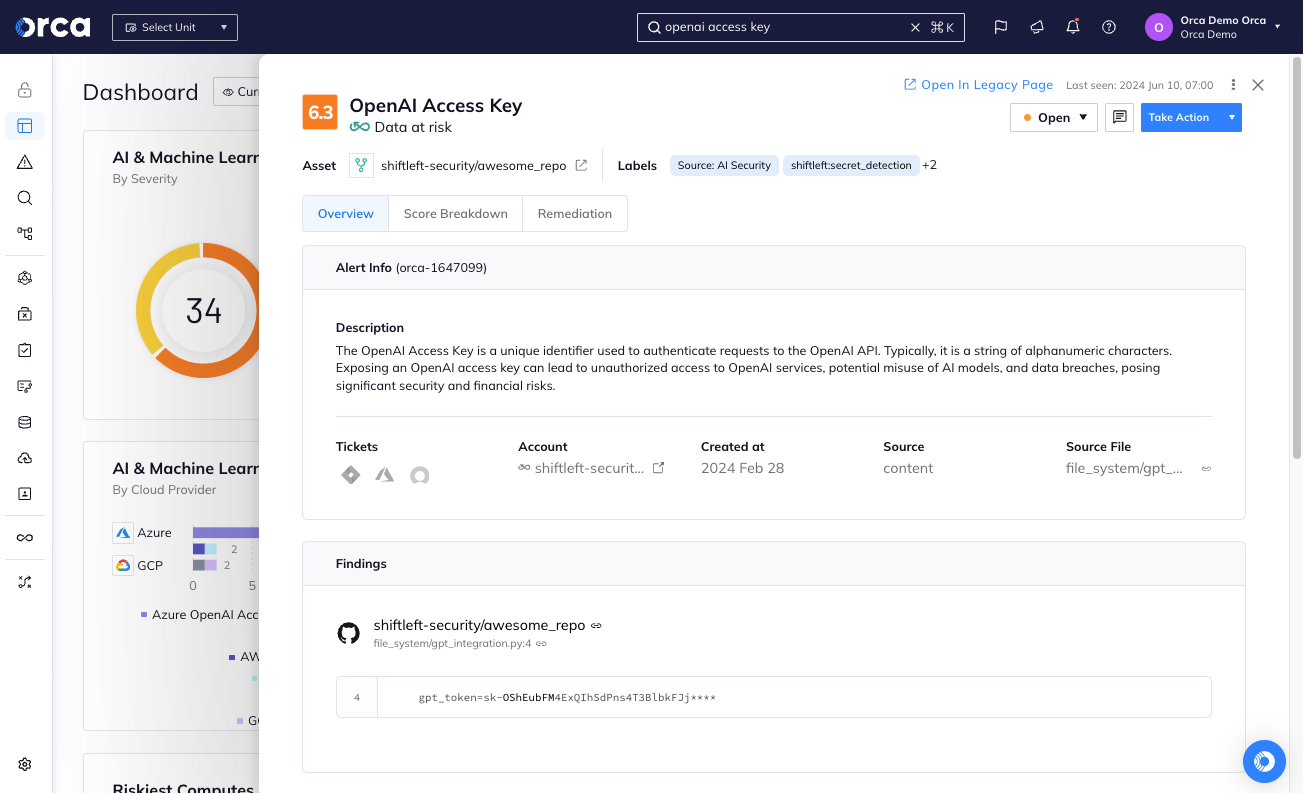

Discover exposed access keys

Orca detects when keys and tokens to AI services and software packages are unsafely exposed in code repositories.

Get full visibility into your deployed AI projects

Much like other resources in the cloud, shadow AI and LLMs are a major security concern. Orca continuously scans your entire cloud environment and detects all deployed AI models, keeping security teams in the know about every AI project, whether it contains sensitive data, and whether it’s secure.

- Get a complete AI inventory and BOM for every AI model deployed in your cloud, giving you visibility into any shadow AI.

- Orca covers the major cloud provider AI services, such as Azure OpenAI, Amazon Bedrock & Sagemaker, and Google Vertex AI.

- Orca also inventories the 50+ most commonly used AI software packages, including Pytorch, TensorFlow, OpenAI, Hugging Face, scikit-learn, and many more.

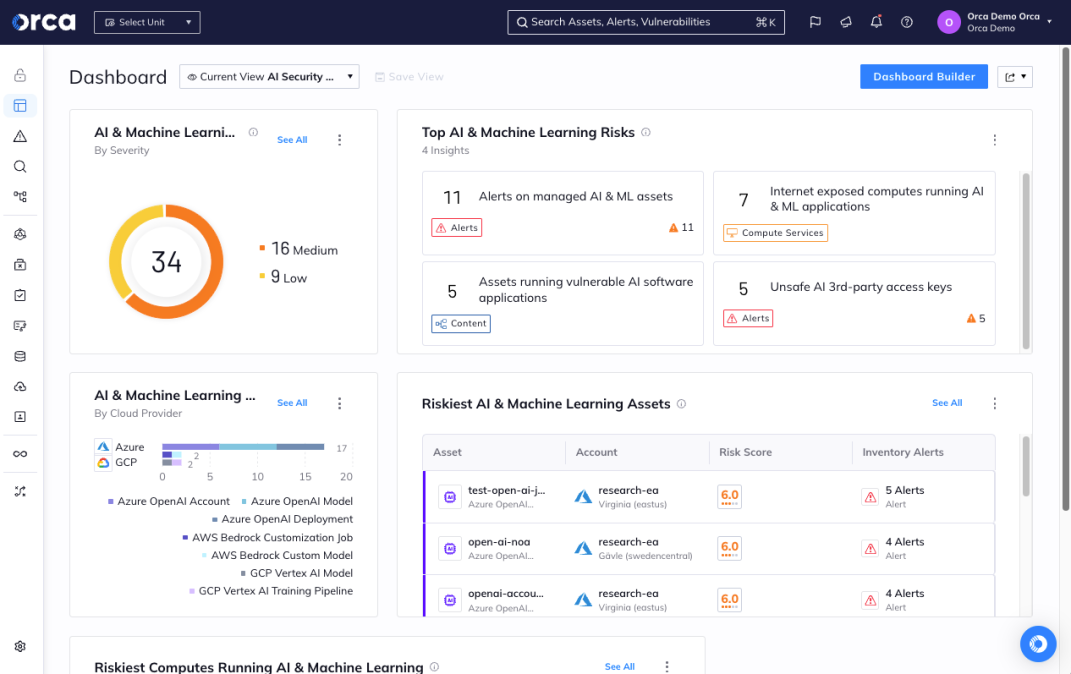

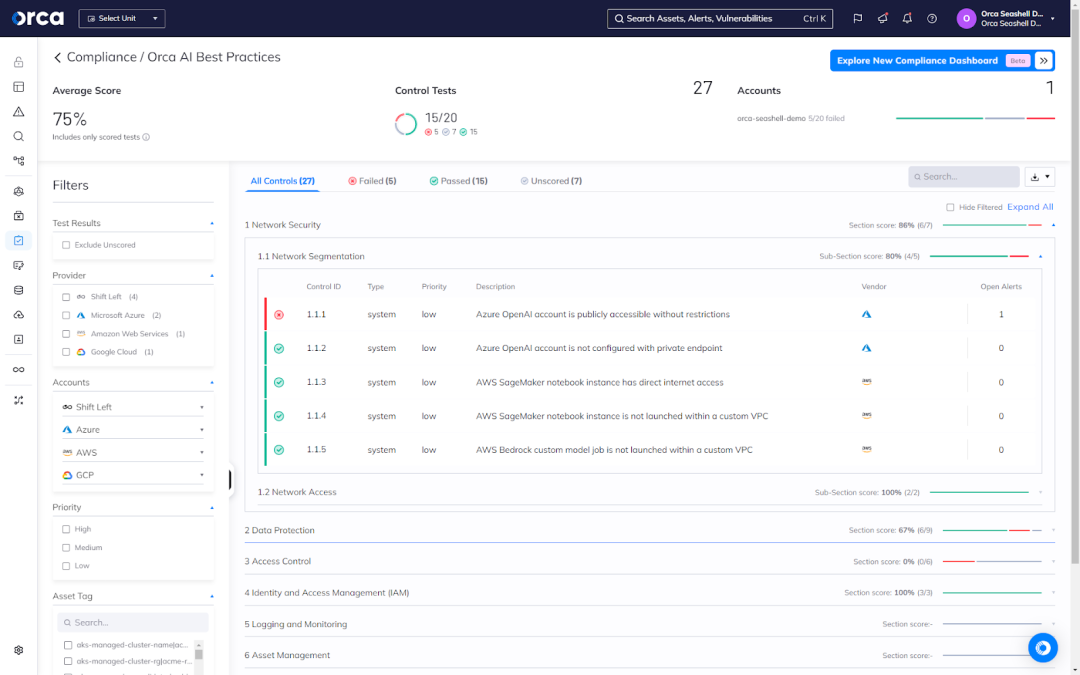

Leverage AI Posture Management (AI-SPM)

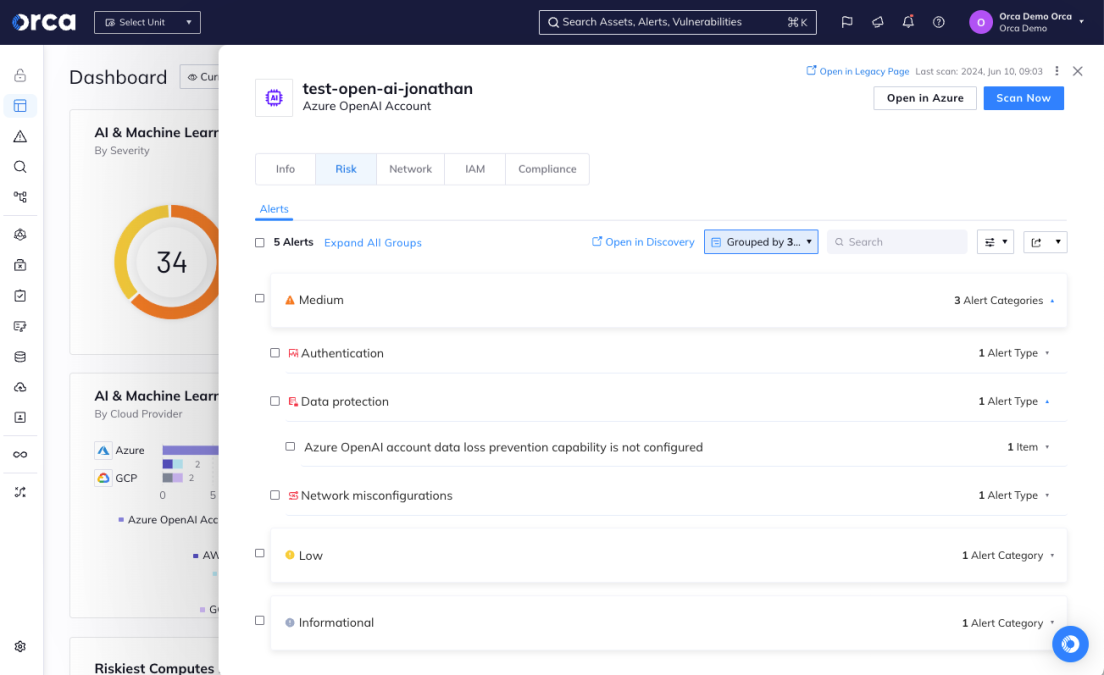

Orca’s AISPM alerts when AI models are at risk from misconfigurations, overprivileged permissions, Internet exposure, and more, and provides automated and guided remediation to quickly fix any issues.

- Orca’s compliance framework for AI best practices includes dozens of rules for proper upkeep of AI models, network security, data protection, access controls, IAM, and more.

- Protect against data leakage and data poisoning risk from AI models and training data.

Detect sensitive data in AI models and training data

Orca uses Data Security Posture Management (DSPM) capabilities to scan and classify the data stored in AI projects, and alerts if any sensitive data is found. By informing security teams where sensitive data is located, they can make sure that it is removed since AI models can be manipulated into “pouring” their training data.

- Orca scans and classifies all the data stored in AI projects, as well as data used to train or fine-tune AI models.

- Receive an alert when sensitive data is found, such as telephone numbers, email addresses, social security numbers, or personal health information.

- Ensure that your AI models are fully compliant with regulatory frameworks and CIS benchmarks.

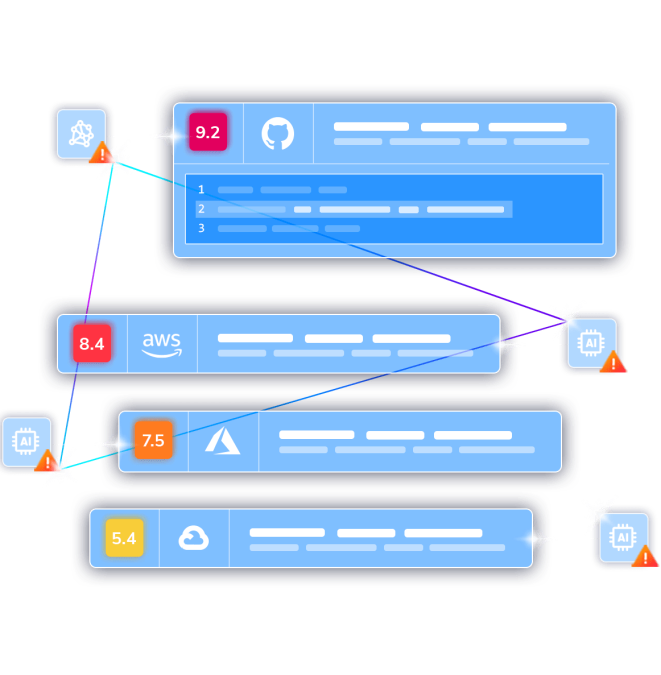

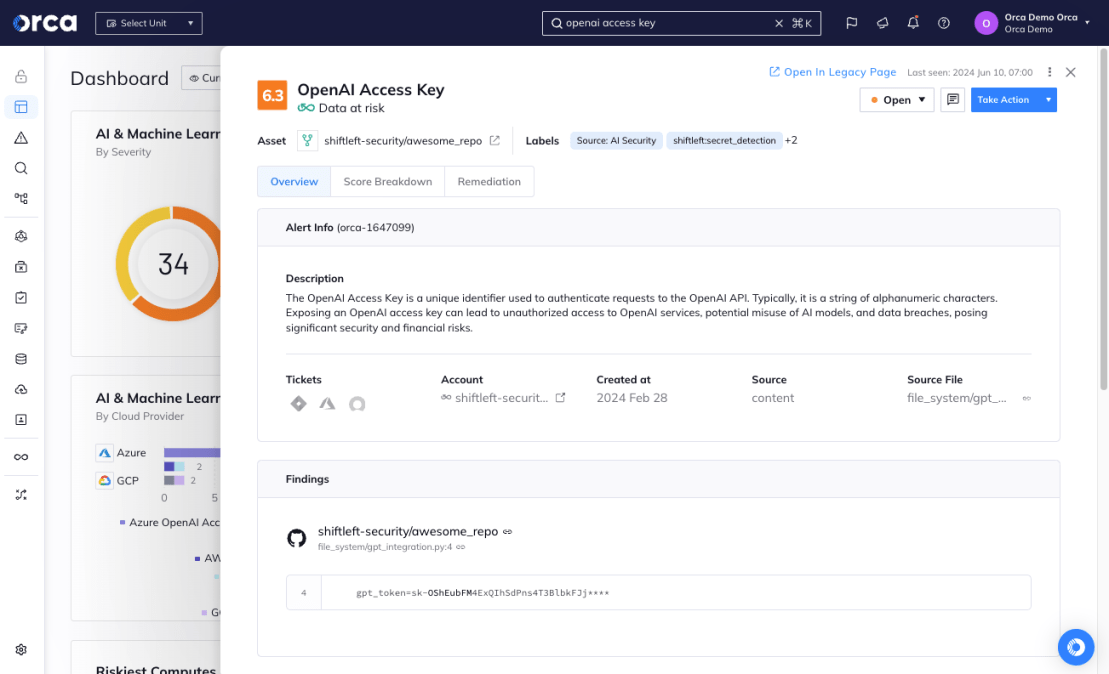

Detect exposed AI access keys to prevent tampering

Orca detects when AI keys and tokens are left in code repositories, and sends out alerts so security teams can swiftly remove the keys and limit any damage.

- Orca scans your code repositories (such as GitHub and GitLab), finding any leakage of AI access keys – such as tokens to OpenAI, Hugging Face.

- Leaked access keys could grant bad actors access to AI models and their training data, allowing them to make API requests, tamper with the AI model, and exfiltrate data.

Orca Has You Covered

Frequently Asked Questions

AI Security Posture Management is an emerging field of cloud security that involves addressing security risks and compliance issues associated with using AI models. It involves a collection of strategies, solutions, and practices designed to enable organizations to securely leverage AI models and LLMs in their business.

Like other cloud assets, AI models and LLMs present inherent security risks, including limited visibility, accidental public access, shadow data, unencrypted data, unsecured keys, and more.

In recent years, AI usage has increased dramatically. More than half of all organizations already use it in the course of their business. AI’s widespread adoption, coupled with the security risks of using AI services and packages, puts organizations at heightened risk of security incidents. The below figures help illustrate this risk:

- 94% of organizations using OpenAI have at least one account that is publicly accessible without restrictions.

- 97% of organizations using Amazon Sagemaker notebooks have at least one with direct internet access.

Despite its advantages, AI poses significant security risks that organizations must consider and address, including:

- Lack of visibility: Security teams don’t always know which AI models are currently in use and aren’t able to discover shadow AI.

- Data exposure: Misconfigured public access settings, exposed keys, and unencrypted sensitive data can cause data leakage.

- Data poisoning: Bad actors can potentially tamper with data and insert malicious content.

- Key exposure in repositories: These keys allow bad actors to make API requests, tamper with the AI model, and exfiltrate data.

AI Security Posture Management involves several important activities to cover end-to-end AI risks, including:

- Cloud scanning and inventorying: The entire cloud estate is scanned to generate a full inventory of all AI models deployed within the cloud environment(s).

- Security posture management: Secure configuration of AI models is ensured, including network security, data protection, access controls, and IAM.

- Sensitive data detection: Sensitive information in AI models or training data is identified and alerts are generated so appropriate action can be taken.

- Third-party access detection: Teams are alerted when sensitive keys and tokens to AI services are exposed in code repositories.

The Orca Cloud Security Platform secures your AI models from end-to-end—from training and fine-tuning, to production deployment and inference.

- AI and ML Inventory and BOM: Get a complete view of all AI models that are deployed in your environment – both managed and unmanaged.

- Security posture management: Ensure that AI models are configured securely, including network security, data protection, access controls, and IAM.

- Sensitive data detection: Be alerted if any AI models or training data contain sensitive information so you can take appropriate action.

- Third-party access detection: Detect when keys and tokens to AI services— such as OpenAI, Hugging Face—are unsafely exposed in code repositories.