Organizations are increasingly leveraging Generative AI and Large Language Models (LLMs) to optimize business processes and improve products and services. A recent Gartner report predicts that global spending on AI software will grow to $298 billion by 2027, with a compound annual growth rate (CAGR) of 19.1%. Some may even be of the opinion that these figures are conservative.

From assets scanned by the Orca Cloud Security Platform, we found that over 37% of organizations have already adopted at least one AI service, with the highest use being Amazon SageMaker and Bedrock (68%) followed by Azure OpenAI (50%), and Vertex AI comes in third at 21%.

Along with all the great business benefits and improved products and services that AI brings, there is however one catch: security. In our 2024 State of Cloud Security Report, we found that 82% of Amazon SageMaker users have exposed notebooks, meaning that they are publicly accessible. Especially since AI models often include sensitive data and intellectual property in their training data, these cloud resources are at even higher potential risk than other resources. This has given rise to a new type of security need: AI Security.

While the exposure of SageMaker notebooks is a significant concern, it is a condition that falls within the user’s responsibility under the shared responsibility model. Therefore, it’s important that organizations follow best practices and use the right AI security tools so they can easily identify and remediate these exposures, ensuring the secure deployment of their AI and ML workloads.

Today we are pleased to announce that the Orca Cloud Security Platform now offers complete end-to-end AI Security Posture Management (AI-SPM) capabilities, so Orca customers can continue to leverage AI at unhindered speed, but do so safely. By offering AI security from Orca’s comprehensive platform, organizations avoid having to add yet another point security solution specific to AI security to their arsenal, reducing overhead and integrating into existing workflows.

Why is Orca adding AI-SPM?

Many of the security risks facing AI models and LLMs are similar to other cloud assets, including limited visibility, accidental public access, unencrypted sensitive data, shadow data, and unsecured keys. Leveraging Orca’s patented agentless SideScanning technology, we’ve extended our platform to also cover AI models, providing the same risk insights and deep data that we provide on other cloud resources. In addition, we’re applying our existing technology for use cases that are unique to AI security, such as detecting sensitive data in training sets, that could later be unintentionally exposed by the LLM or Generative AI application.

Since the Orca platform does not require agents to inspect cloud resources, coverage is always continuous and 100%, and will immediately scan any new AI resources as soon as they are deployed and alert to any detected risks. However Orca goes beyond just covering AI models – the platform also maps out all the AI related packages that are used to train or infer AI.

By adding AI security to our comprehensive cloud security platform, organizations don’t need to procure, deploy, or learn how to use another separate point solution but can instead leverage one unified platform for all cloud security needs.

What are Orca’s AI Security capabilities?

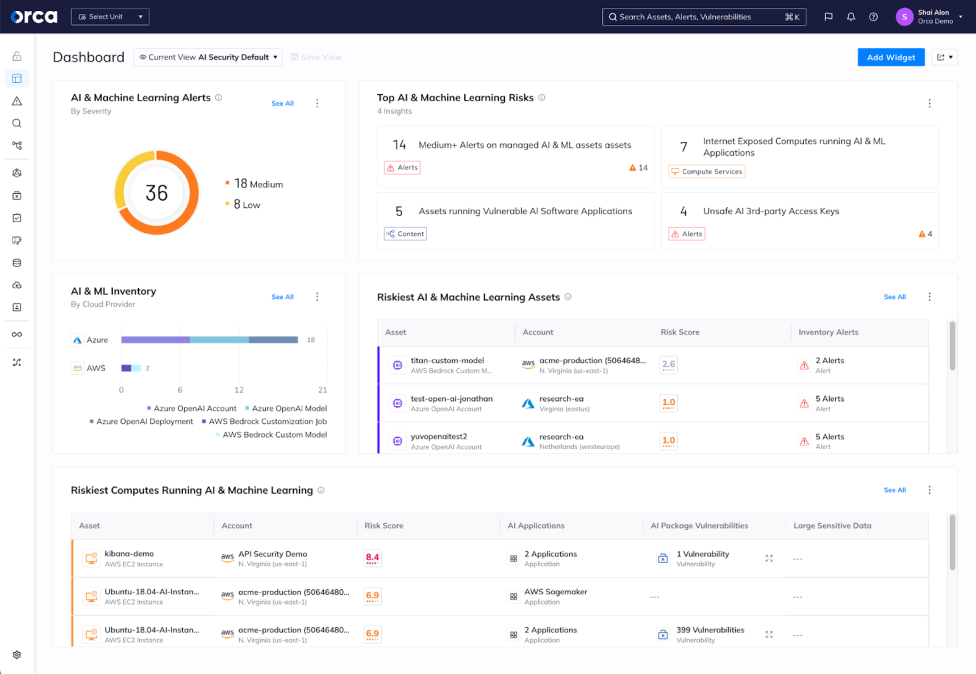

Orca includes a new AI Security dashboard that provides an overview of the AI models that are deployed in the cloud environment, what data they contain, and whether they are at risk. Orca covers risks end-to-end, from training and fine-tuning to production deployment and inference. Orca connects with your AI data and processes on all levels – the managed cloud AI services, the unmanaged AI models and packages that your developers are using, and even with shift-left detection of leaky secret tokens AI services in your codebase.

Armed with these insights, security teams can protect their AI projects from unauthorized access and data leaks. Below we describe each capability by describing the AI challenge, and how the Orca platform solves it.

#1. AI and ML BOM + inventory

Challenge: Much like other resources in the cloud, shadow AI and LLMs are a major concern. In their excitement to explore all the opportunities that Generative AI brings, developers are not always waiting for IT approval before integrating Gen AI services into their flows. And security is probably not the first thing on their mind. This leaves security teams in the dark about which AI projects have been deployed in their environment, whether they contain sensitive data, and whether they are secure.

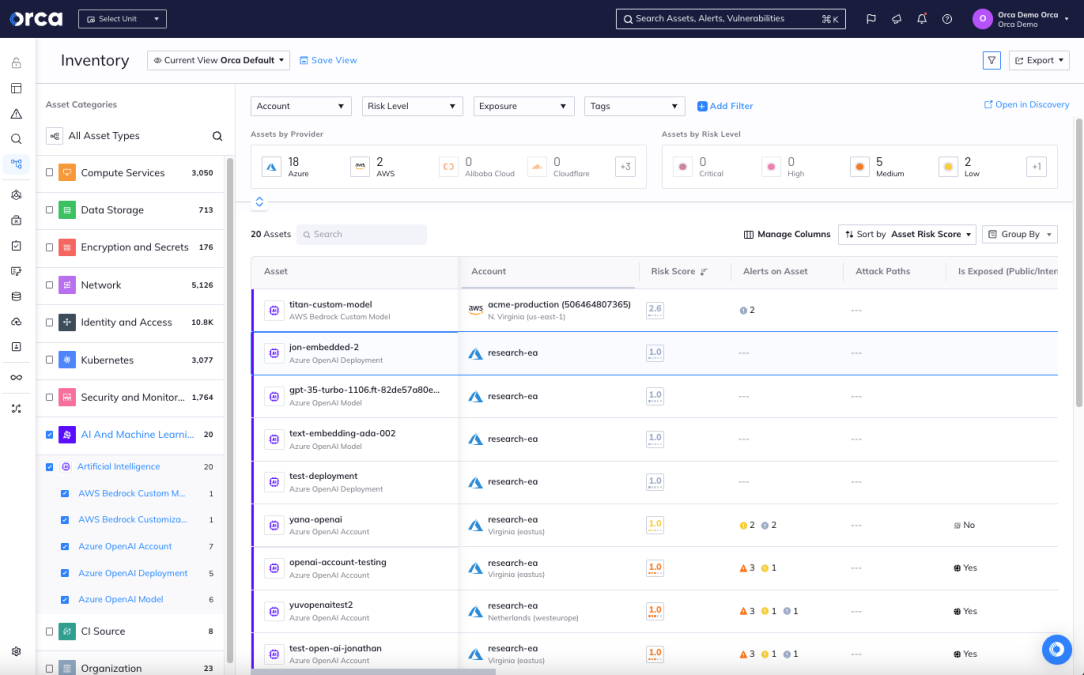

Orca solution: Orca scans your entire cloud environment and detects all deployed AI models, providing a full inventory and Bill of Materials (BOM). Orca detects projects on Azure OpenAI, Amazon Bedrock, Google Vertex AI, AWS Sagemaker and those using one of 50+ most commonly used AI software packages, including Pytorch, TensorFlow, OpenAI, Hugging Face, scikit-learn, and many more.

The Orca Platform shows a full inventory and bill of materials of all deployed AI models

#2. Warn when AI projects are publicly accessible

Challenge: Data used to train AI models is often sensitive and can contain proprietary information. Since it could be very damaging if this information got breached, it’s very important to ensure that AI models are not publicly exposed. However, misconfigurations do happen, and especially with Shadow AI, security may not be the first thing developers have on their mind. This leaves security teams struggling to ensure that all AI models are being kept private.

Orca solution: Since Orca has insight into the AI model settings and network access, Orca will alert whenever public access is allowed, so security teams can quickly fix the issue to prevent any data breaches.

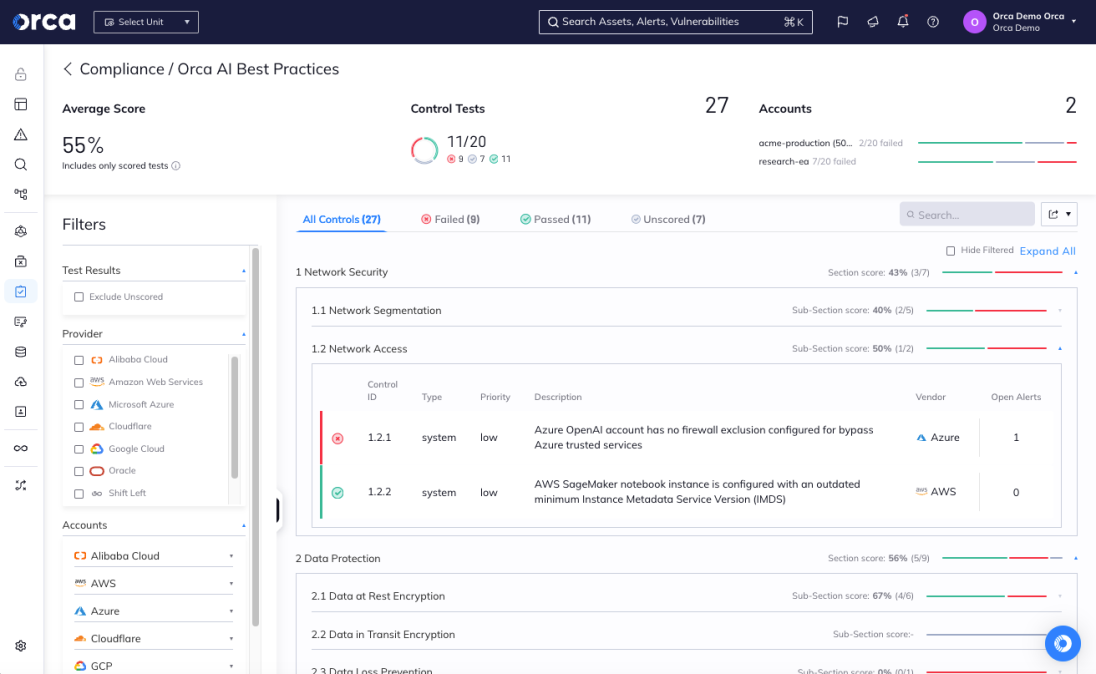

Orca AI Security best practices compliance performs AI-Security Posture Management

#3. Detect sensitive data in AI projects

Challenge: AI models use vast amounts of data to train models. It’s possible that this data inadvertently contains sensitive data, and that developers and security teams are not even aware of the presence of the data, such as PII and access keys. LLMs and Generative AI models could then “spill out” this sensitive data, which could be used by bad actors. In addition, if developers are not aware that the training set contains sensitive data, they may not prioritize security of the resource as much as it needs to be.

Orca solution: Using Orca’s Data Security Posture Management (DSPM) capabilities, Orca scans and classifies all the data stored in AI projects, and alerts if they contain sensitive data, such as email addresses, telephone numbers, email addresses, and social security numbers (PII), or personal health information (PHI). By informing security teams where sensitive data is located, they can make sure that these assets are protected with the highest level of security.

Orca displays pertinent security information in the AI Security dashboard

#4. Ensure encryption of data at rest

Challenge: While organizations can take all the best measures to protect the data in AI models, it is still possible that this sensitive data is breached. The most straightforward way to ensure that even if bad actors are able to access the data, it won’t be of much use to them, is to encrypt it. However, as with other security controls, encryption may not be what developers are necessarily thinking about when they are working on their groundbreaking AI projects.

Orca solution: Orca scans AI data in the background and makes sure that all data is encrypted, thus significantly reducing possible bad outcomes.

#5. Detect unsafe third-party AI access keys

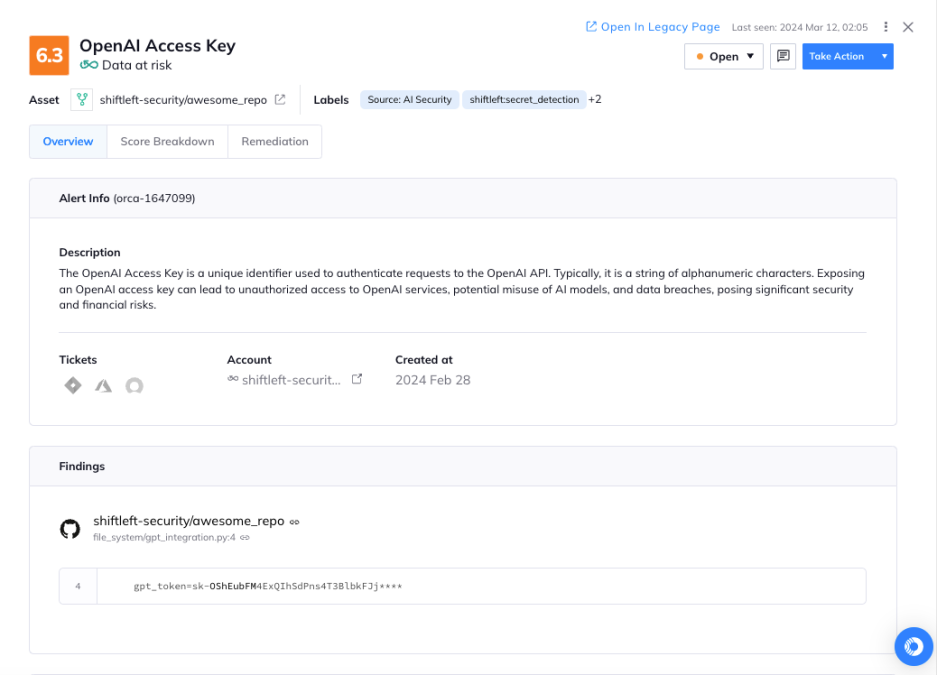

Challenge: Although this is certainly not best practice, developers often leave AI access keys in their code. Anyone who gets their hands on a key can use it to access the AI model and make API requests, allowing them to consume allocated resources, access training files, and potentially exfiltrate data. In addition, this access can be used to tamper with the AI model to generate inappropriate or harmful content.

Orca solution: Orca uses its code repository scanning to detect when AI keys and tokens are left in code repositories, and sends out alerts so security teams can swiftly remove the keys and limit any damage.

Orca alerts that it has found an OpenAI access key in a code repository

Which AI services does Orca scan?

Orca scans AI models deployed on Azure OpenAI, Amazon Bedrock, Google Vertex AI, AWS Sagemaker, and 50+ commonly used AI software packages, including Pytorch, TensorFlow, OpenAI, Hugging Face, scikit-learn, and many more.

About the Orca Cloud Security Platform

Orca identifies, prioritizes, and remediates risks and compliance issues across cloud estates spanning AWS, Azure, Google Cloud, Oracle Cloud, Alibaba Cloud, and Kubernetes. Leveraging its patented SideScanning technology, Orca offers a single, comprehensive cloud security platform, detecting vulnerabilities, misconfigurations, lateral movement, API risks, sensitive data at risk, anomalous events and behaviors, overly permissive identities, and more.

Learn More

Would you like to learn more about Orca’s AI Security capabilities? Sign up for our AI Security webinar on April 23rd at 10:00 AM Pacific, or schedule a personalized 1:1 demo and we can show you how your organization can take full advantage of AI without compromising security.