It’s easy to overlook inheritance in Google Cloud. Inheritance can give compute engine instances unintentionally more privileges than intended. So when examining permissions for a resource, keep in mind that the resource might have permissions granted to them via inheritance in addition to the permissions they granted directly.

In the previous blog post, Lateral Movement in Google Cloud: Abusing the Infamous Default Service Account Misconfiguration, we reviewed some essential Google Cloud components to include service accounts, service account types, roles, and cloud API access scopes to understand how these components can be used to gain lateral movement capabilities.

Using the same components mentioned above and different enumeration processes, an attacker can detect which compute engine instances could expose the storage data in its Google Cloud project scope.

Let’s walk through a demonstration. We start with a basic enumeration process to expose the storage data. Our initial access is achieved via either a compromised compute engine instance or stolen Google Cloud credentials.

Enumerating bucket access permissions

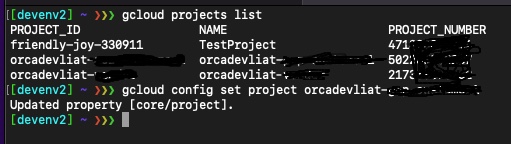

First, we list all the projects available and choose one:

Next, we list all the Google Cloud buckets within the selected project:

![]()

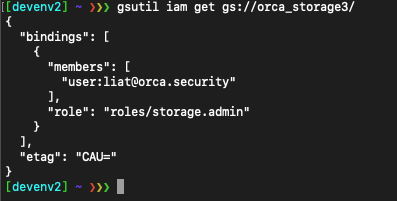

Now, let’s see what permissions this bucket has:

As we can see, only the user ‘[email protected]’ has admin permissions on the bucket ‘orca_storage3’.

But to expose the data in this bucket, does an attacker have to compromise the user [email protected]? We will try to answer that question later. Let’s continue enumerating and see how an attacker could still expose the data even without access to the user [email protected].

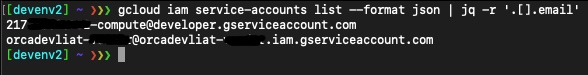

Let’s see what the service account for the project is:

We can see that our project has two service accounts enabled.

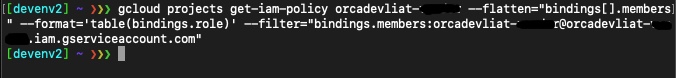

Let’s choose a service account and list all the roles it has:

We can see the first service account has no roles, which means there is not much we can do with it.

Let’s move on and select the other service account:

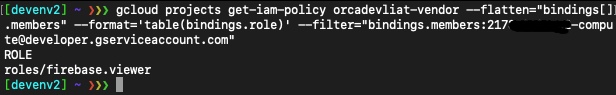

This service account has the following roles: roles/firebase.viewer.

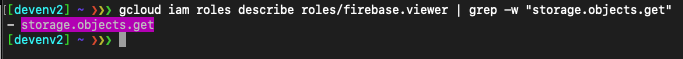

Let’s see if the role ‘roles/firebase.viewer’ has the ‘storage.objects.get’ permission:

It has the ‘storage.objects.get’ permission, which is enough to view storage objects, so we can continue to look for an instance configured with this service account.

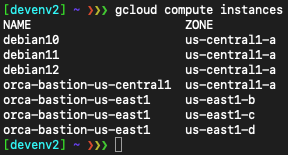

Listing the instances:

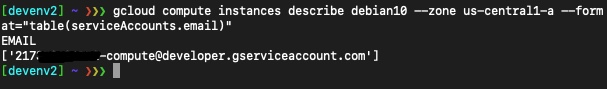

Let’s examine the ‘debian10’ instance and list its service account:

The ‘debian10’ instance is using the service account. Now let’s list its access scope:

We don’t have the right access scope to read storage data.

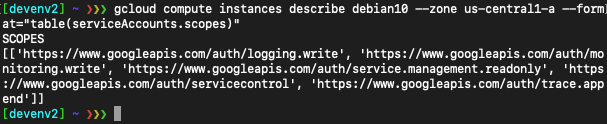

If we try to list buckets from the instance ‘debian10’, we get the following error, saying that we can’t perform this operation due to insufficient OAuth2 scope:

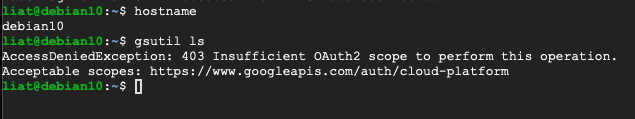

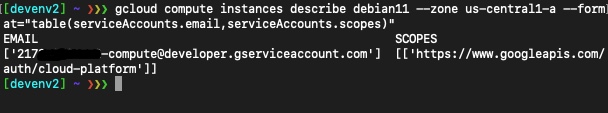

Let’s move on and examine the instance ‘debian11’:

Instance ‘debian11’ has all the appropriate permissions to enable an attacker to expose the storage data.

Instance ‘debian11’ has:

- Service account with firebase.viewer role

- Firebase.viewer role contains the ‘storage.objects.get’ permission

- All cloud API access scope

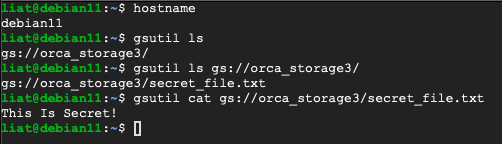

Let’s try to read the storage data from the instance ‘debian11’:

Conclusion

As shown in the above example we did not have to compromise the user [email protected] to expose the data in this bucket.

This storage bucket enumeration process can be tedious, especially when you have dozens of assets in your Google Cloud environment. However using the tool I wrote, G Cloud Platform Storage Explorer, all the work can be automated.

The Google Cloud Platform Storage Explorer tool crawls through all of your Google Cloud account’s available projects (based on your token’s access level) and detects which compute engine instances have access to all storage data within their project’s scope. It enumerates the privileges assigned directly to the bucket or indirectly via inheritance. You can use the instance’s token or provide your user credentials in the script. Examine the results to spot those that aren’t needed and eliminate them quickly.