We’re excited to announce that the Orca Research Pod has launched AI Goat, the first open source AI security hands-on learning environment based on the OWASP top 10 ML risks. Orca’s AI Goat, provided as an open source tool on the Orca Research GitHub repository, is an intentionally vulnerable AI environment built in Terraform that includes numerous threats and vulnerabilities for testing and learning purposes.

The learning environment was created to help security professionals and pentesters understand how AI-specific vulnerabilities based on the OWASP top 10 ML risks can be exploited, and how organizations can best defend against these types of attacks.

“Orca’s AI Goat is a valuable resource for AI engineers and security teams to learn more about the potentially dangerous misconfigurations and vulnerabilities that can exist when deploying AI models”, said Shain Singh, one of the OWASP ML Security Top 10 project leaders. “By using AI Goat, organizations can enhance their understanding of AI risks and the different ways attackers can leverage these weaknesses. This enables them to be much more effective in preventing AI attacks”.

“Our research shows that as security teams anticipate the adoption of AI and genAI to supercharge productivity and support business growth, they are looking for effective ways to ensure secure AI usage. Orca’s AI Goat makes an important contribution to the community to help organizations gain hands-on experience to better understand possible threats and methods of attacking AI models so they can mitigate security risk and defend against possible attacks.”

Melinda Marks, Practice Director, Cybersecurity at Enterprise Strategy Group

In this blog, we’ll explain more about Orca’s AI Goat environment, how to deploy it, and the different missions to complete as part of the learning experience.

About the AI Goat environment

AI Goat is an environment built on AWS, running the AI models on Amazon SageMaker. Deploying the environment exposes a user interface for an online shop selling soft toys with different machine learning models embedded in the store features. The application includes intentional misconfigurations, vulnerabilities, and security issues so security practitioners and developers can increase their understanding of how attackers can take advantage of these weaknesses. Currently, there are three ‘missions’ to complete to successfully leverage the weaknesses in the AI models.

What are the OWASP Top 10 ML risks?

AI Goat includes each of the Top 10 ML Risks identified by OWASP:

- Input Manipulation Attack: An attack where an attacker inputs malicious prompts into a Large Language Model (LLM) to trick the LLM into performing unintended actions, such as leaking sensitive data or spreading misinformation.

- Data Poisoning Attack: A bad actor intentionally corrupting an AI training dataset or a machine learning model to reduce the accuracy of its output.

- Model Inversion Attack: Where an attacker reconstructs sensitive information or original training data from a model’s outputs.

- Membership Inference Attack: When an attacker manipulates the model’s training data in order to cause it to behave in a way that exposes sensitive information.

- Model Theft: Gaining unauthorized access to a model’s parameters.

- AI Supply Chain Attacks: Modifying or replacing machine learning libraries, models, or associated data in the AI supply chain, compromising the integrity of the AI system.

- Transfer Learning Attack: When an attacker trains a model on one task and then fine-tunes it on another task to cause it to behave in an undesirable way.

- Model Skewing: When an attacker alters the distribution of training data, causing the model to produce biased or unreliable outcomes.

- Output Integrity Attack: An attacker manipulates the output of a machine learning model to change its behavior or cause harm to systems relying on those outputs.

- Model Poisoning: When an attacker manipulates an AI model or its training data to introduce vulnerabilities, biases, or backdoors that could compromise the model’s security, effectiveness, or ethical behavior.

Getting started with AI Goat

Deploying AI Goat is straightforward and fully automated using Terraform on AWS infrastructure. This approach ensures that you can quickly set up the environment and start exploring the vulnerabilities and how they can be leveraged.

Prerequisites

- An AWS Account with administrative privileges

- AWS Access Key and Secret Key

Automated Deployment

To seamlessly deploy AI Goat, follow the next steps:

- Fork the repository: Begin by forking the AI Goat repository to your own GitHub account.

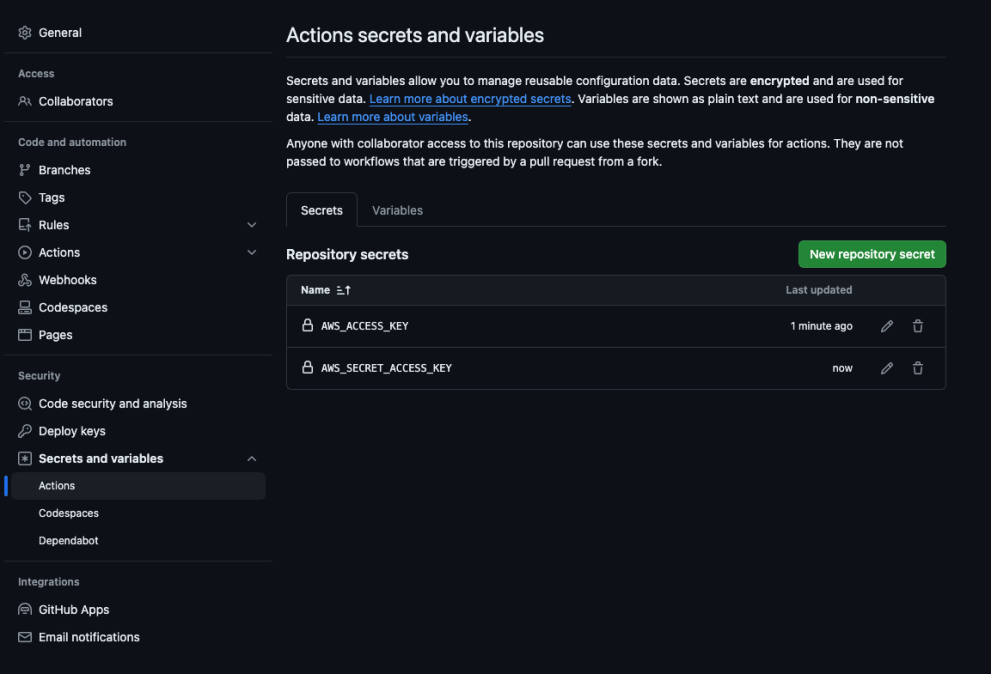

- Configure GitHub secrets: Add your AWS credentials to the GitHub secrets under the settings of your forked repository. The required keys are AWS_ACCESS_KEY and AWS_SECRET_ACCESS_KEY.

- Run the Terraform Apply Workflow: Navigate to the Actions tab in your GitHub repository and run the “Terraform Apply” workflow. This workflow will automatically deploy the entire infrastructure.

- Access the application: Once the deployment is complete, you can find the application URL in the Terraform output section and start hacking.

There is also an option to manually install the application, simply by cloning the repository, configuring AWS credentials and deploying using terraform:

git clone https://github.com/orcasecurity-research/AIGoat

aws configure

cd terraform

terraform init

terraform apply --auto-approveExploring AI Goat Vulnerabilities

AI Goat features a toy store application with several AI features, highlighting different vulnerabilities from the OWASP Machine Learning Security Top 10 risks. Users can explore scenarios such as an AI Supply Chain Attack, a Data Poisoning Attack, and an Output Integrity Attack, gaining hands-on experience in understanding and mitigating these risks.

We recommend avoiding browsing the repository files as they contain spoilers.

Mission 1: AI Supply Chain Attack

- Scenario: Product search page allows image uploads to find similar products.

- Goal: Exploit the product search functionality to read sensitive files on the hosted endpoint’s virtual machine.

Mission 2: Data Poisoning Attack

- Scenario: Custom product recommendations per user on the shopping cart page.

- Goal: Manipulate the AI model to recommend the Orca stuffed toy.

Mission 3: Output Integrity Attack

- Scenario: Content and spam filtering AI system for product page comments.

- Goal: Bypass the filtering AI to post the forbidden comment “pwned” on the Orca stuffed toy product page.

Important: Note that AI Goat creates a vulnerable environment in AWS. Therefore, to avoid the chance of any compromise, you must ensure that these resources are not exposed to important environments. Also, it is recommended to delete AI Goat when done, using Terraform Destroy.

AI Goat demonstration at DefCon

Orca is showcasing AI Goat at DefCon Arsenal on August 9th, between 11:00 am and 1:00 pm. This arsenal session will demonstrate how to deploy AI Goat, explore various vulnerabilities, and teach participants how these weaknesses can be exploited. Attendees will engage hands-on with the tool, gaining practical experience in AI security. Deployment scripts will be open-source and available after the session.

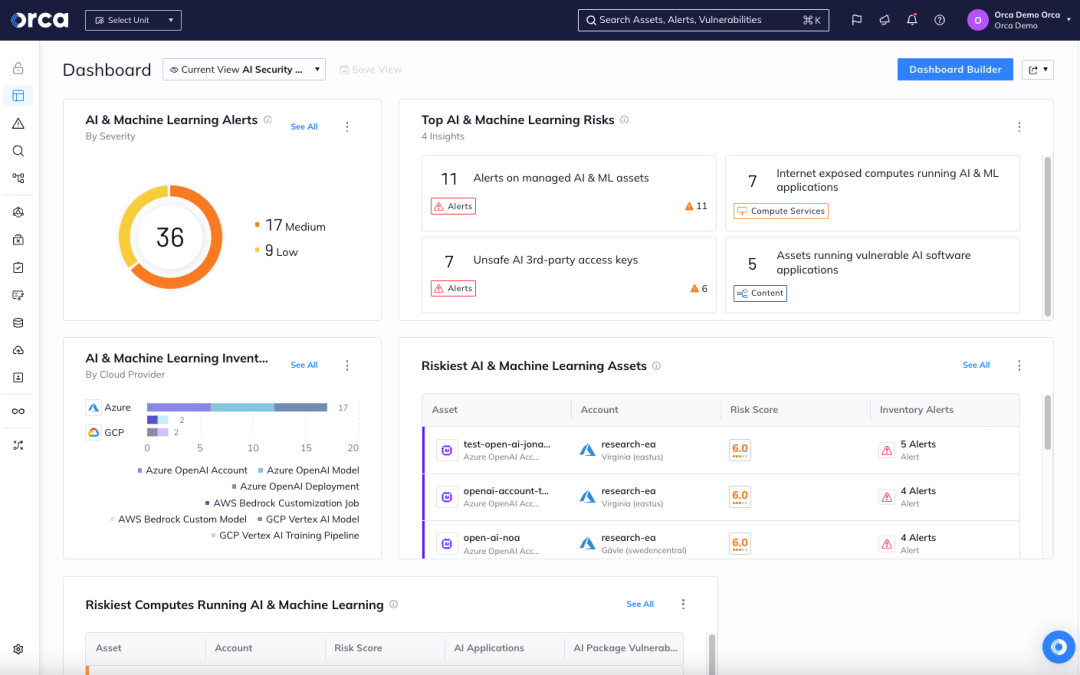

AI Security Posture Management

To help organizations ensure the security and integrity of their AI models, Orca provides AI Security Posture Management capabilities. Security starts with visibility. With Orca, organizations get a complete view of all AI models that are deployed in their environment—both managed and unmanaged, including any shadow AI.

In addition, Orca continuously monitors and assesses your AI models, alerting you to any risks, such as vulnerabilities, exposed data and keys, and overly permissive identities. Automated and guided remediation options are available to quickly fix any identified issues. If you would like to learn more about how to secure your AI models, schedule a 1:1 demo with one of our experts.

About the Orca Research Pod

The Orca Research Pod is a group of cloud security researchers that discovers and analyzes cloud risks and vulnerabilities to strengthen the Orca platform and promote cloud security best practices. To date, the Orca research team has discovered and helped resolve 25+ vulnerabilities in cloud provider platforms to help support a safe and secure cloud infrastructure. The Research Pod also creates and maintains several open source projects on GitHub, helping developers and security teams make the cloud a safer place for everyone.

The Orca Cloud Security Platform

Orca’s agentless-first Cloud Security Platform connects to your environment in minutes and provides 100% visibility of all your assets on AWS, Azure, Google Cloud, Kubernetes, and more. Orca detects, prioritizes, and helps remediate cloud risks across every layer of your cloud estate, including vulnerabilities, malware, misconfigurations, lateral movement risk, API risks, AI risks, sensitive data at risk, weak and leaked passwords, and overly permissive identities.