As the eerie fog of Halloween rolls in, beware—cyber ghouls are lurking, ready to cast wicked hexes on your AI in the cloud. Here are six sinister tactics they can use to haunt your systems and turn your AI projects into a digital nightmare.

This Halloween season, Orca Security’s State of AI Security Report has uncovered some truly haunting statistics about AI security risks. Based on our 2024 State of AI Security Report, here are six dark tricks that attackers have up their sleeves – and they might already be using them against your organization.

1. Beware the curse of default settings

Like an unlocked door on a dark night, default configurations invite trouble. Our research shows organizations are rushing to adopt cloud services without changing critical security settings, leaving their environments exposed to potential threats. We found that 98% of organizations have left their SageMaker notebook instances with root access enabled, while 45% of SageMaker buckets use predictable default names.

These seemingly innocent oversights leave digital footprints that lead straight to your data. With root access enabled, attackers gain the highest level of permissions in your environment. The predictable bucket names act like a glowing sign pointing attackers directly to your potentially sensitive data.

2. Stalk the shadows of private endpoint gaps

Your AI cloud services might not be as protected as you think. The truth is that 27% of Azure OpenAI accounts are exposed to the public internet without private endpoints.

Each unsecured connection creates another entry point for lurking threats. Without private endpoints, organizations leave their data transmissions exposed to potential interception, manipulation, and theft. These unsecured pathways give attackers the perfect opportunity to slip undetected into your cloud environment.

3. Hunting exposed keys in commit graveyards

In the shadowy corners of code repositories, exposed API keys await discovery. Our research has revealed an alarming pattern: organizations are unknowingly exposing their AI access keys through public repositories and insecure storage practices.

Our findings show:

- 35% of organizations using Hugging Face have an exposed Hugging Face access key

- 20% of organizations using OpenAI have an exposed OpenAI access key

- 13% of organizations using Anthropic have an exposed Anthropic access key

Even more spooky is where these keys are found:

- 81% of OpenAI’s exposed keys are hidden in repository commit history

- 77% of Hugging Face’s exposed keys haunt old commits

Most organizations remain unaware of these security specters haunting their infrastructure, leaving attackers free to access their services, steal sensitive data, and potentially compromise their entire cloud environment.

4. Hexing package vulnerabilities

Within seemingly secure systems, dangers lurk. The disturbing reality is that 62% of organizations are running AI packages with known vulnerabilities. These packages have an average CVSSv3 score of 6.9, and while only 0.2% of these vulnerabilities have public exploits, this is still cause for concern.

These security gaps silently compromise systems from within, turning trusted environments into potential security nightmares. Even low-risk vulnerabilities can become critical when they’re part of a larger attack chain.

5. Searching the vaults of unencrypted data

Data encryption should be your first line of defense, yet our research has uncovered a graveyard of cloud assets that don’t have encryption at rest for self-managed keys. The following statistics should send a chill down any security professional’s spine:

- 99% Amazon SageMaker notebooks have not configured encryption at rest for their self-managed keys

- 98% Azure OpenAI accounts have not configured encryption at rest for their self-managed keys

- 98% Google Vertex AI models have not configured encryption at rest for their self-managed keys

- 100% Google Vertex AI training pipelines have not configured encryption at rest for their self-managed keys

While our analysis didn’t confirm whether organizational data was encrypted via other methods, choosing not to encrypt with self-managed keys raises the potential attackers can exploit exposed data in the shadows.

6. Evading the outdated security ghosts

Perhaps most unsettling of all is how many organizations rely on outdated security measures to protect their modern cloud environments. 77% of organizations using Amazon SageMaker haven’t configured IMDSv2 for their notebook instances. This leaves them vulnerable to well-known attack vectors that modern security practices are already designed to prevent.

Don’t let security threats haunt your organization

This isn’t a spooky bedtime story – our State of AI Security Report confirms these threats are real. At this moment, attackers could be exploiting default settings to gain unauthorized access, collecting exposed API keys from forgotten repositories, intercepting unencrypted data, leveraging known package vulnerabilities, and circumventing outdated security measures.

However, since today is Halloween, the eve when we ward off the ghosts, we have compiled some useful resources for you to help you protect against the AI cyber ghouls:

- OWASP Top 10 ML risks

- Blog: Five critical AI security risks and how to prevent them

- Webinar: Top AI Risks of 2024 in Cloud Services: An Expert Deep Dive

- Report: 2024 State of AI Security Report

- Hands-on AI Security: Orca’s AI Goat

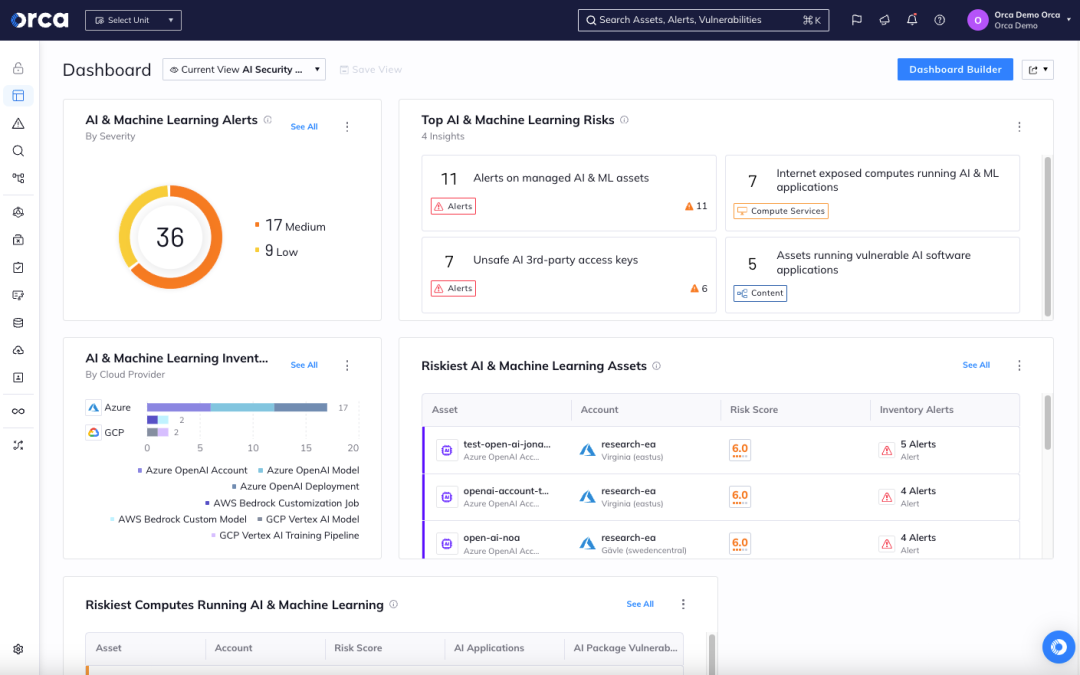

AI Security Posture Management

To help organizations ensure the security and integrity of their AI models, Orca provides AI Security Posture Management capabilities. Security starts with visibility. With Orca, organizations get a complete view of all AI models that are deployed in their environment—both managed and unmanaged, including any shadow AI.

In addition, Orca continuously monitors and assesses your AI models, alerting you to any risks, such as vulnerabilities, exposed data and keys, and overly permissive identities. Automated and guided remediation options are available to quickly fix any identified issues. If you would like to learn more about how to secure your AI models, schedule a 1:1 demo with one of our experts

The Orca Cloud Security Platform

Orca’s agentless-first Cloud Security Platform connects to your environment in minutes and provides 100% visibility of all your assets on AWS, Azure, Google Cloud, Kubernetes, and more. Orca detects, prioritizes, and helps remediate cloud risks across every layer of your cloud estate, including vulnerabilities, malware, misconfigurations, lateral movement risk, API risks, AI risks, sensitive data at risk, weak and leaked passwords, and overly permissive identities.