For those of you that don’t know me, I am Chief Information Security Officer (CISO) at Orca Security. I’m responsible for implementing our information security program here at Orca, and I oversee incident response, conduct security audits, ensure regulatory compliance, and manage our security, GRC and IT teams. Like many other CISOs, AI security is currently top of mind for me. With Orca being at the forefront of AI innovation, I am acutely aware of the double-edged nature of AI. While the use of AI brings great benefits, it also presents unique challenges and risks that must be managed.

In this blog post, I want to share more about what we’ve been doing at Orca to ensure our AI models are secure. I hope this information is useful for other CISOs and cloud security practitioners who are facing the similar challenge of securing their rapidly expanding AI deployments.

AI models we leverage at Orca

In January 2023, Orca was the first cloud security platform to integrate with ChatGPT to generate automated remediation instructions and code, and has been at the forefront of AI innovation ever since. We’ve partnered with some of the most impactful AI services on the market, including Microsoft OpenAI, Google Vertex, and Amazon Bedrock, to offer our customers the best AI experience on our platform.

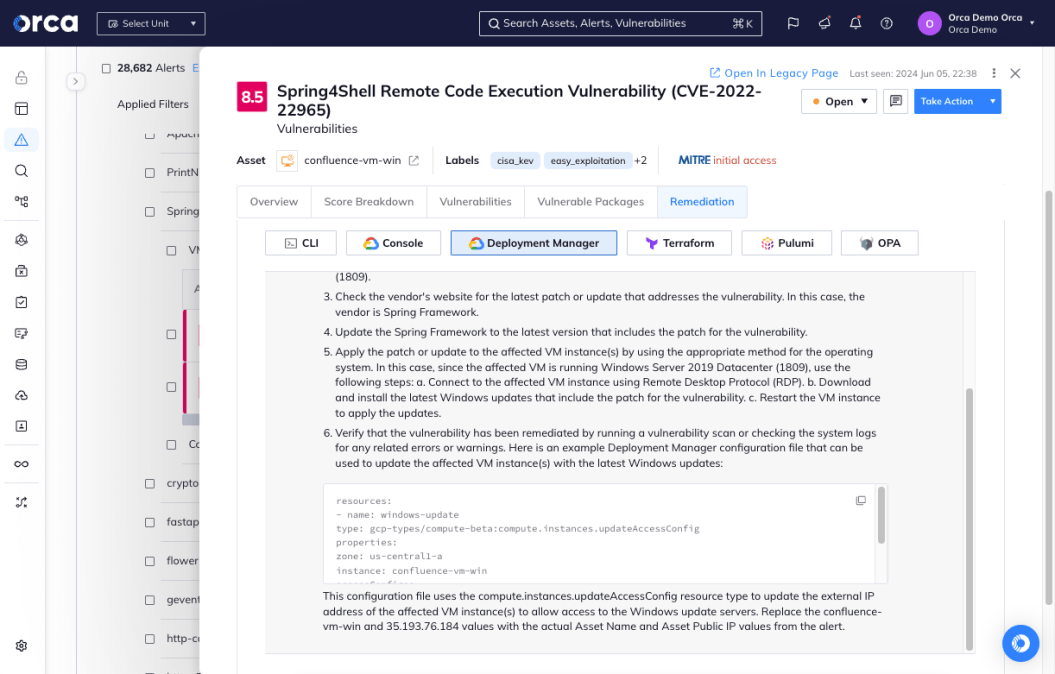

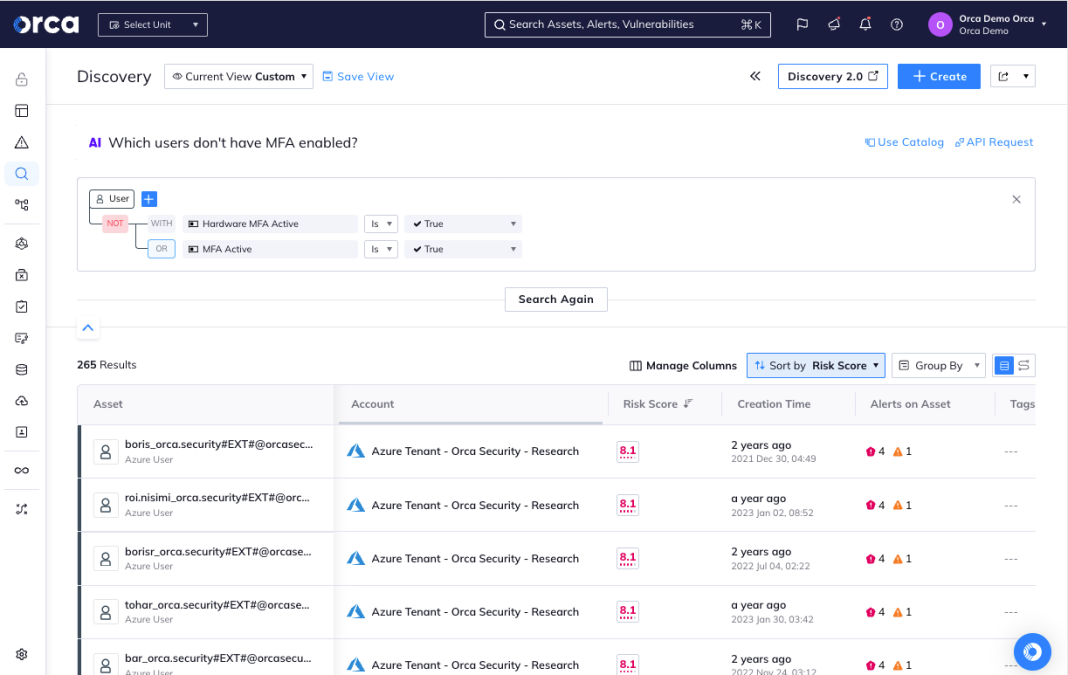

In September last year we launched our AI-powered search that allows users to easily and intuitively understand exactly what’s in their cloud environments by asking plain language questions such as “Do I have any log4j vulnerabilities that are public facing?” or “Do I have any unencrypted databases with sensitive data exposed to the Internet?”

This year we were also named a Google Cloud Generative AI partner and recognized on the first-ever CRN AI 100 list. With all this AI innovation at Orca, and being the CISO responsible for securing all Orca systems, AI security is certainly top of my mind.

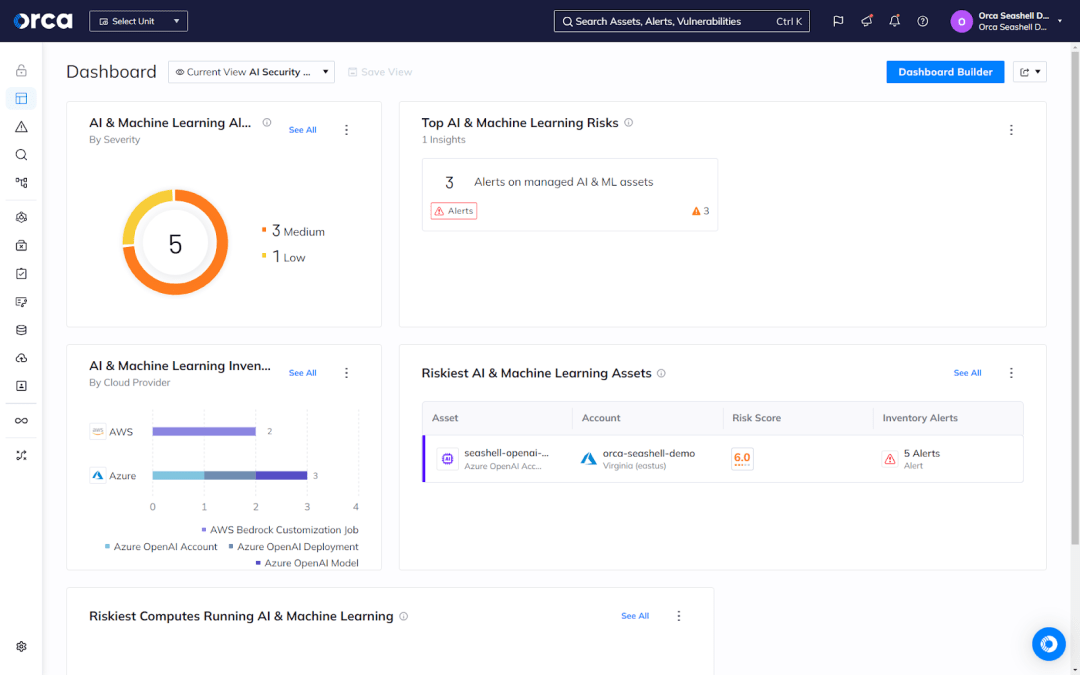

This is also why we recently added AI Security Posture Management (AI-SPM) to the Orca Platform, to allow our customers (and ourselves) to leverage continuous AI security across cloud environments.

AI security risks

New technology comes with new terminology. Before I delve into how to secure your AI models, I want to first explain some of the risk terminology specific to AI:

- Prompt injection: When an attacker inputs malicious prompts into a Large Language Model (LLM) to trick the LLM into performing unintended actions, such as leaking sensitive data or spreading misinformation.

- Data poisoning: A bad actor intentionally corrupting an AI training dataset or machine learning model to reduce the accuracy of its output.

- Model poisoning: When an attacker manipulates an AI model or its training data to introduce vulnerabilities, biases, or backdoors that could compromise the model’s security, effectiveness, or ethical behavior.

- Model inversion: This happens when an attacker reconstructs sensitive information or original training data from a model’s outputs.

AI compliance regulations

In addition to existing regulations that also apply to AI, such as GDPR, PCI-DSS, HIPAA and other frameworks that govern data privacy, new specific AI regulations are being introduced. The EU, US, and other countries have started to issue regulations around the safe and responsible use of AI, and we can expect these to be refined and expanded further the more AI usage grows:

- The European Union AI Act is a legal framework aimed at fostering the responsible development and use of AI, while ensuring public trust and the protection of fundamental rights.

- The White House AI Bill of Rights is not actually a law, but includes guidance on how to safely deploy artificial intelligence, including ensuring privacy and implementing protections against algorithm discrimination and explainability.

How we do AI Security at Orca Security

At Orca, we manage AI security by following these strategies:

- Ensure data integrity and privacy: Data is the lifeblood of AI, and ensuring its integrity and privacy is paramount. We enforce strict access policies and follow robust data governance practices, including data encryption, anonymization, masking, and secure storage. We verify the source and integrity of the data used in our training models.

- Secure the AI pipeline: First, we implement stringent access controls and authentication mechanisms to ensure that only authorized personnel can access our AI systems and data. Second, we detect and fix risks early in the CI/CD pipeline, using our own shift left security to identify and mitigate vulnerabilities and misconfigurations early in the development process.

- Protect AI keys: AI access keys can mistakenly be left in source code. A bad actor could use these keys to access the AI model and make API requests, allowing them to consume allocated resources, access training files, and potentially exfiltrate data. By implementing our AI-Security Posture Management (AI-SPM) capabilities, any exposed keys are detected and can be promptly removed. This keeps our AI models and training data secure and protects against data breaches, tampering and data poisoning.

- Monitor for anomalies and malicious behavior: Continuous monitoring of AI systems is vital for detecting and responding to possible malicious activity. We deploy our Cloud Detection & Response from the Orca Platform that leverages AI and machine learning to identify deviations from normal patterns. This alerts us to potential security incidents, enabling rapid response and mitigation. Regular audits and assessments are conducted to ensure compliance with security policies and standards.

- Employee awareness training: We train Orca employees on the proper usage of approved AI tools and explain the unique risks associated with AI. We expressly warn employees not to share any sensitive or confidential information with AI models. Collaboration between security teams, data scientists, and AI developers is fostered to ensure a holistic approach to AI security.

Considerations when implementing AI

Below I have included a practical checklist that lists important considerations when implementing AI models:

Internal vs. 3rd party managed LLM models (On-Prem vs. SaaS)

When assessing whether to host LLM models on-premises or in the cloud, the following factors should be considered:

- Internal (On-Prem):

- This means internal teams take on responsibilities including managing security, privacy, patching, maintenance, application security, and support.

- Compliance with company policies and regulatory requirements must be ensured.

- Robust monitoring and incident response strategies are needed.

- SaaS (3rd Party):

- Less overhead but a thorough risk management assessment of the third-party provider must be conducted.

- Evaluate the provider’s security practices, data handling procedures, and compliance with relevant standards.

- Review and understand service level agreements (SLAs) and data processing agreements.

Assess training data

- Data sensitivity:

- Check whether the training data includes customer or proprietary information.

- Implement data management and control measures to prevent data leakage, unauthorized inference, and misuse.

- Pay special attention to multi-tenant deployments to ensure data is isolated and protected.

Trustworthiness of generic models trained with public data

- Bias and reliability:

- Assess the potential for bias, misinformation, and the reliability of data sources.

- Implement measures to mitigate the impact of biased or unreliable data on model performance and outcomes.

- Regularly review and update training data to maintain accuracy and relevance.

Rigorous input validation for prompts and responses

- Security Principles:

- Apply strict input validation to prevent injection attacks, data leaks, and other security vulnerabilities.

- Implement application security best practices to safeguard the AI system from malicious inputs and outputs.

Customer opt-In/opt-out mechanisms

- Transparency and trust:

- Ensure customers have control over their participation in AI systems through clear opt-in/opt-out mechanisms.

- Foster transparency and involve customers in the AI adoption process to build trust and confidence.

Prioritize transparency and compliance

- Clear documentation:

- Maintain comprehensive documentation addressing security, privacy, and compliance concerns.

- Regularly update documentation to reflect changes in policies, practices, and regulatory requirements.

Implement AI security practices

- Fundamental security:

- Conduct thorough threat modeling to identify potential risks and vulnerabilities.

- Perform regular security design reviews to ensure robust protection against emerging threats.

- Implement continuous monitoring and improvement processes to adapt to new security challenges.

- AI Security Posture Management (AI-SPM):

- Get visibility into all AI models that are deployed in your environment—both managed and unmanaged, including any shadow AI.

- Address AI misconfigurations, such as AI models open to the public, unencrypted data, and overly permissive access.

- Automatically detect sensitive data in AI models or training data

- Detect and remove any exposed AI access keys

Additional resources for AI security

More and more guidelines are being published around securing AI. Here are the most important ones to explore:

- MITRE has published a comprehensive Threat Landscape for Artificial-Intelligence systems. This is an incredibly detailed framework that can help CISOs and security practitioners understand each weakness that attackers can use to discover an organization’s ML footprint and leverage it in related attacks.

- There is now an OWASP Top 10 for LLM and an OWASP AI Security and Privacy Guide, which both describe best practices in detail.

- There are also a number of GRC guides and standards that cover AI: NIST AI 600-1, NIST AI RMF, ISO 42001.

Conclusion

As AI continues to evolve, so too must our approach to securing it. At Orca, we’re committed to staying ahead of the curve, employing a multi-faceted strategy that encompasses technology, processes, and people. By understanding the unique challenges posed by AI and implementing robust security measures, we ensure that our AI systems are not only powerful but also secure and trustworthy.

I hope this article provided you with useful information on how to secure your organization’s AI models. I’d love to hear how you are securing your AI models and if you have any further advice to add. Feel free to reach out to me on LinkedIn and let’s continue to secure AI together.