Although it’s always preferable to prevent security incidents before they occur, it’s only a matter of time before an attacker will be successful in getting past your initial defenses. Therefore, it’s also important to continuously monitor events and behaviors for any signs that could indicate that an attacker has breached the environment.

Artificial Intelligence (AI) plays a crucial role in identifying when there’s an active attack occurring in your cloud environment. AI can process large volumes of data and logs, and in the blink of an eye, perform in-depth contextual analysis to determine when events are significantly anomalous and could possibly be harmful, therefore indicating the likelihood of malicious behavior. Armed with this speed and intelligence, security teams can instantly be alerted to potential attacks in progress and respond quickly to prevent further damage.

In order to avoid excessive alerts and causing desensitization, it’s important that AI and advanced algorithm programs take contextual factors into account, only alerting when there is a high likelihood of malicious intent. Often there are many factors that need to be taken into account, and these would be difficult and labor intensive to review manually. For example, as seen in the recent supply chain risk discovered in Google Cloud Build, security teams need an automated way to be alerted to unauthorized or irregular usage of the Cloud Build service account. This is where AI-powered anomaly detection provides the solution.

In this blog, we describe how the Orca Cloud Security Platform utilizes AI for advanced anomaly detection as part of its Cloud Detection and Response (CDR) capabilities.

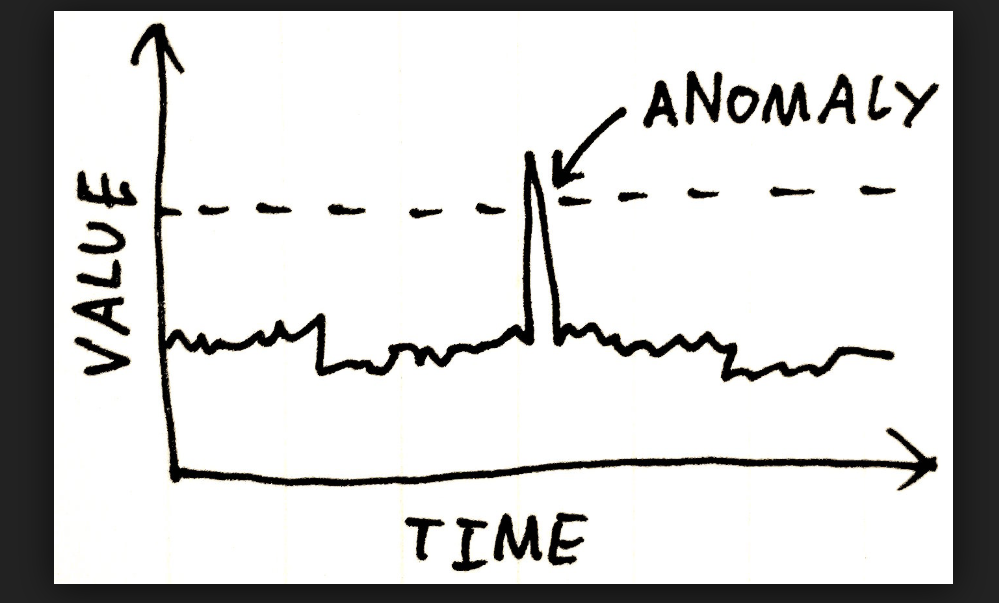

What Is Anomaly Detection?

Anomaly detection is an important method used to identify suspicious activities or behaviors that deviate from the norm, what might be called irregular behavior. In a cloud environment, where numerous users and applications interact, detecting anomalies can help identify potential security breaches, privilege escalation attempts, data exfiltration, or other malicious activities. It allows security teams to respond promptly and mitigate potential risks before they cause significant harm.

What Qualifies as “Irregular” Behavior?

Orca calculates the baseline of normal behavior based on the mean number of actions over the last 14 days. This is a moving average that is continuously updated. If the average changes significantly, then that is considered irregular.

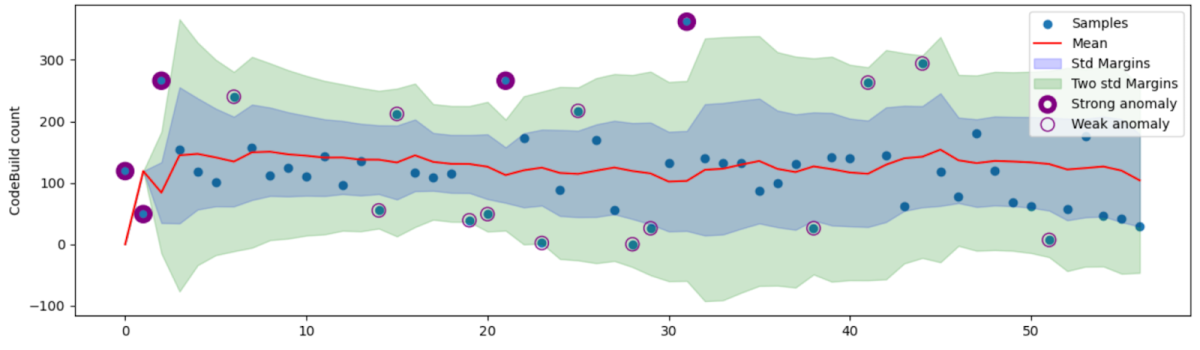

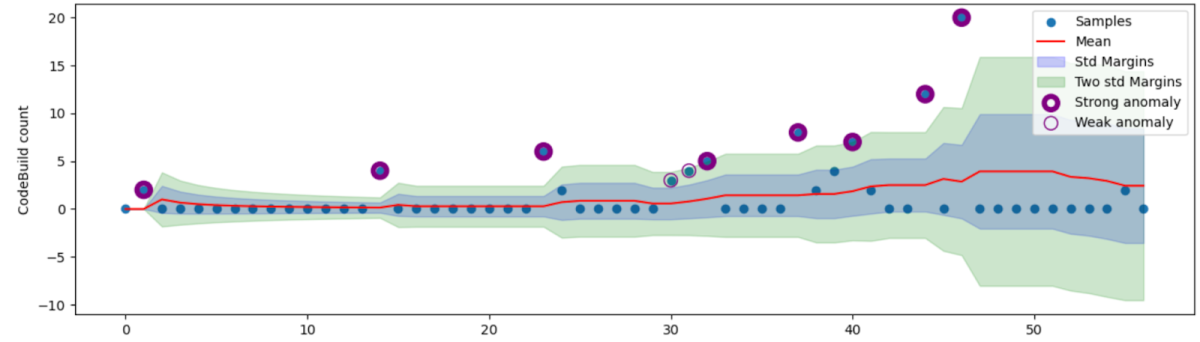

In the graphs below, which examine two different organizations, we keep track of the average and standard deviation of the number of actions that use the “cloudbuild.builds.create” permission in Google Cloud Build.

Organization A

Organization B

The blue dots signify our observations which form a dynamic baseline over time. The baseline has many features, but the simplest way to visualize them is through the mean and standard deviation. The red line represents the moving average, and the blue and green areas indicate one and two standard deviations from the mean, respectively.

The lower graph (of Organization B) presents a particularly interesting scenario. Many times we see services that are not being used at all, and then suddenly experience an uptick in usage. This could indicate that an attacker is cautiously testing the waters before ramping up their operations. An alert to such irregularities is of great importance to security teams.

The Role of AI in Anomaly Detection

Orca uses behavior modeling to process and analyze an organization’s cloud logs. For example, if you’re using AWS, Orca ingests every interaction an AWS customer has with an AWS service. Every fifteen minutes, these logs are ingested in batches by Orca, and then parsed to identify any activity associated with an AWS asset, user, or any other relevant information. This information is statistically modeled and then analyzed in the Orca Anomaly Analyzer, a component of Orca’s Cloud Detection and Response offering.

IAM Role Modeling

Orca supports IAM Role behavior for modeling, so let’s take a deeper look into this example to better understand modeling in a real world scenario.

The state of an IAM Role includes everything that we know about it: its properties (e.g. creation date, attached policies, Orca scan related info, etc), its behavior (characteristics of its past behavior), and Orca-specific data (e.g. alerts that are associated with this IAM role, etc). As such, a character is created and the behavior of a given role can be predicted, and likewise, anomalies detected.

It’s important to note that Orca is not looking for “malicious” behavior; it’s looking for behavior characteristics that seem out of place. Such characteristics, and changes therein, are evaluated for risk (e.g. risk of being part of any MITRE ATT&CK category).

A probe can be defined as a class that implements logic on a role’s time series characteristics. Each IAM Role state contains multiple probes, saved in Orca’s database. The Anomaly Analyzer is then able to model IAM Role states using probes. Each probe attempts to establish some descriptive behavioral characteristic (for example, regions used). When established, the probe can determine if subsequent behavior is “usual” or “anomalous”. Establishing a behavior is done using statistical methods. For example, a behavior observed over a longer period of time will be more “established”, allowing the detection of more subtle deviations. However, if the period is not “long enough”, the tolerance of a probe will be higher. All parameters are learned and adapted per probe/per organization.

When the Orca Platform detects a deviation that raises concern, the alerting process is triggered. The alerting process includes multiple steps such as evaluating the severity of the anomaly in relation to itself and other anomalies. Ultimately, the alert is shown to the user in a clear and concise format, allowing for fast comprehension and timely response.

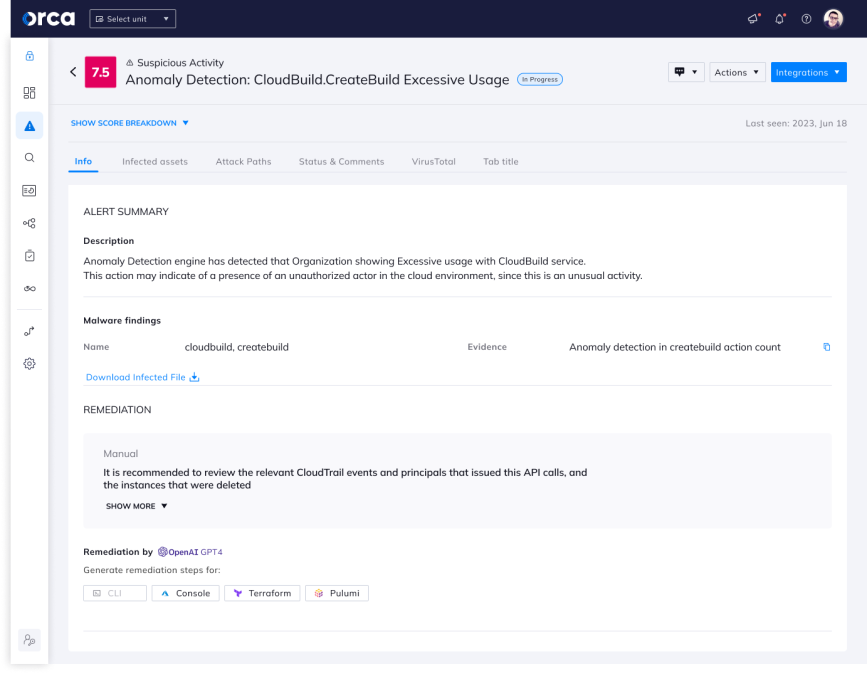

Orca alerts to an anomaly of excessive usage in the Google Cloud Build service account

Adding Cloud Context to Anomalies to Determine Severity

Unlike other solutions that only look at events and behaviors, Orca also considers what is running on the cloud workloads as well as current cloud configurations to assess which events indicate a possible malicious attack and could pose a serious risk to the organization.

Leveraging a single unified data model, Orca is able to combine and analyze the following information to create contextualized alerts:

- Cloud provider logs and threat intelligence feeds

- Risks in cloud workloads such as malware and vulnerabilities

- Risks in configurations, such as IAM risk, lateral movement opportunities, and misconfigurations

- Location of the company’s crown jewels (which contain PII and other sensitive data)

This enables Orca to understand the full context of an occurrence and quickly identify which events and anomalies are potentially dangerous, versus those that are non-malicious.

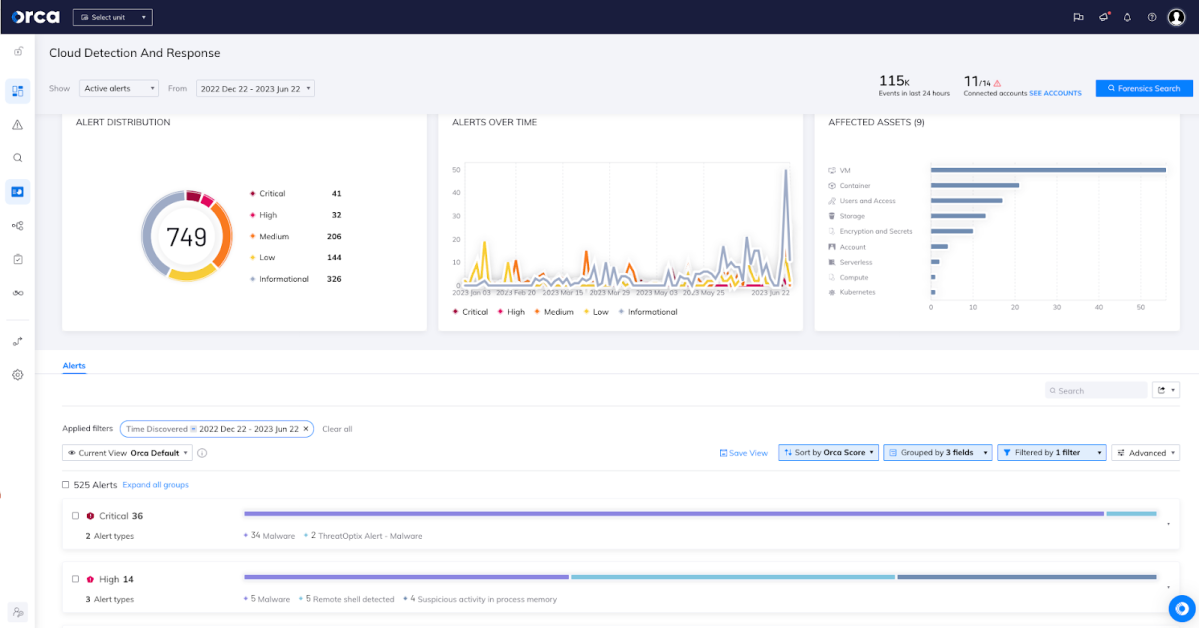

Orca’s Cloud Detection and Response dashboard shows data related to suspicious activity alerts

Learn More About Orca’s CDR and Anomaly Detection Capabilities

To learn more use cases of Orca’s anomaly detection capabilities, read our blog post Four Examples of How Orca CDR Detects Cloud Attacks in Progress.

Want to get more hands-on? Watch a demo or sign up for a free risk assessment. See how quickly and easily the Orca Cloud Security Platform can help you identify and address risks in your cloud.