This post was originally published on The New Stack.

The process of securing software, IT systems, and network infrastructure requires adopting best practices, tools, and techniques to make it worthwhile. There is no one-size-fits-all rule in regard to establishing a minimum status quo in cybersecurity operations.

Today, there are several options for securing infrastructure services that enable organizations to adopt a strong security posture (and improve their existing one). The Center for Internet Security (CIS) benchmarks (an extensive catalog of standards used as a baseline for security best practices) are at the top of this list. By having a reference guide for the minimum security controls, organizations can compare their practices against a consensus level.

This article explores the CIS benchmarks, including what they are, why they were established, and how to effectively evaluate them in the context of cloud security.

What are CIS Benchmarks?

The CIS benchmarks are consensus-based configuration baselines and best practices for securing systems. They are individually divided into different categories focused on a particular piece of technology. These categories include:

- Operating systems

- Server software

- Desktop software

- Mobile devices

- Networks

- Cloud providers

- Printing machines

In other words, the CIS benchmarks framework provides a list of the minimum required security controls and practices for running secure workloads.

The benchmarks come with complete reference documents which catalog them one by one using specific criteria like applicability, severity, rationale, and auditing steps.

Fig. 1 – Example of CIS for Linux

In addition to the benchmark documents, the CIS also offers hardened images for the major public providers. These images save security teams the time they would otherwise have to spend trying to bake the recommendations into their VMs from scratch.

Before we discuss benchmark rules in depth, let’s review an example of a benchmark.

Example Benchmark

Let’s take a look at one of the benchmarks from the CIS Distribution Independent Linux guide:

1.3.2 Ensure filesystem integrity is regularly checked (scored)

Profile Applicability:

Level 1 – Server

Level 1 – Workstation

Description: Periodic checking of the filesystem integrity is needed to detect changes to the filesystem.

Rationale: Periodic file checking allows the system administrator to determine on a regular basis if critical files have been changed in an unauthorized fashion.

Audit: Run the following to verify that aidcheck.service and aidcheck.timer are enabled and running:

# systemctl is-enabled aidcheck.service

# systemctl status aidcheck.service

# systemctl is-enabled aidcheck.timer

# systemctl status aidcheck.timer

Remediation: Run the following commands:

# cp ./config/aidecheck.service /etc/systemd/system/aidecheck.service

# cp ./config/aidecheck.timer /etc/systemd/system/aidecheck.timer

# chmod 0644 /etc/systemd/system/aidecheck.*

# systemctl reenable aidecheck.timer

# systemctl restart aidecheck.timer

# systemctl daemon-reload

There are a few important characteristics of each benchmark that you’ll want to understand in detail:

- Applicability – This shows which systems or services this benchmark applies to (since the current guide is for Linux, the main options are servers or workstations).

- Scored vs. unscored – A status of scored or automated means that the benchmark can be automated into a workflow (which leads to quicker implementation and faster identification of misalignments). On the other hand, a status of unscored or manual means that you cannot provide a pass/fail assessment score using automated tooling (which makes auditing more difficult).

- Audit steps included – Whenever possible, a list of auditing steps is included so that the reader can quickly check the benchmark.

- Remediation steps included – This is a series of commands that either set up or restore the benchmark to the correct status after a failure.

Now that you have a better picture of what the benchmarks look like, we’ll give you some specific recommendations for how to use them in cloud security workloads.

How to Use CIS Benchmarks in Cloud Security

Below, we’ll discuss the key areas for covering benchmark recommendations with CIS.

CIEM

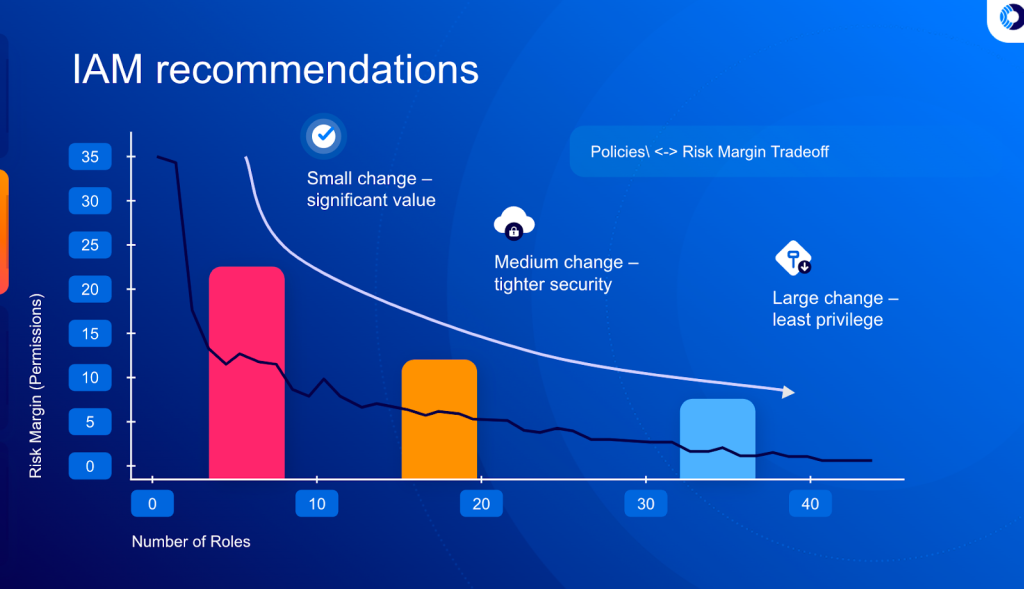

Identity security services are a must-have for interfacing with any reputable cloud provider. On the other hand, the ineffective use or misconfiguration of access control policies can significantly weaken an organization’s overall security posture.

A common risk when configuring cloud infrastructure entitlements management (CIEM) is having overly permissive identities or too many policies for the security teams to maintain. The CIS benchmarks require that you review individual cloud providers’ documents (AWS, Azure, and so on) for specific identity security rules.

For example, if you are operating with AWS, the CIS Amazon Web Services Foundations benchmark contains more than 23 benchmarks related to IAM. These recommendations need to be evaluated, applied to the account holder, and audited for compliance.

That’s why many organizations use automated tools to monitor CIS compliance. CIS also offers free and premium tools that you can use to scan IT systems and generate CIS compliance reports. These tools alert system admins if the existing configurations don’t meet the CIS benchmark recommendations.

On the other hand, you can tackle this problem by offsetting the risk to a dedicated cloud security custodian. By using a novel solution like Orca’s IAM Remediation, which can manage and provide accurate suggestions for IAM policies, you can relieve your team of the burden of having to accurately implement the baseline controls manually.

Fig. 2 – IAM Recommendations (source: https://orca.security/)

Data Security

Data security represents another critical area that warrants proper compliance. Data breaches and the exposure of sensitive PII can be devastating, both financially (since the lack of safety controls can result in lawsuits and fines) and in terms of reputational damage.

Since private data is a primary target of adversary attacks and foreign agents, it appears on the CIS benchmark list in many areas. For example, there are dedicated benchmarks for key rotations, setting the right permissions for data stored on disks, and ensuring encryption both at rest and in transit. The following are a few examples:

- In Kubernetes: 6.9 Storage 6.9.1 – Consider enabling Customer-Managed Encryption Keys (CMEK) for GKE Persistent Disks (PD).

- In AWS: 2.8 – Ensure rotation for customer-created CMKs is enabled.

- In Red Hat Linux: 2.2.20 – Ensure rsync is not installed or the rsyncd service is masked (automated).

- In Red Hat Linux: 1.3.1 – Ensure AIDE is installed (automated).

Again, to support these benchmarks, you’ll need to have a catalog of your organization’s systems and software, validate the existing security profiles, and make adjustments to cover the baseline CIS recommendations when needed.

The Orca Cloud Security Platform provides a Data Security Posture Management (DSPM) module that deals specifically with data security remediation out of the box. It offers a context-driven view of any sensitive data exposures, misconfigurations, and current risks inside the organization’s data stores. Having a continuous service for data security compliance simplifies security operations and improves overall safety.

Kubernetes Benchmark

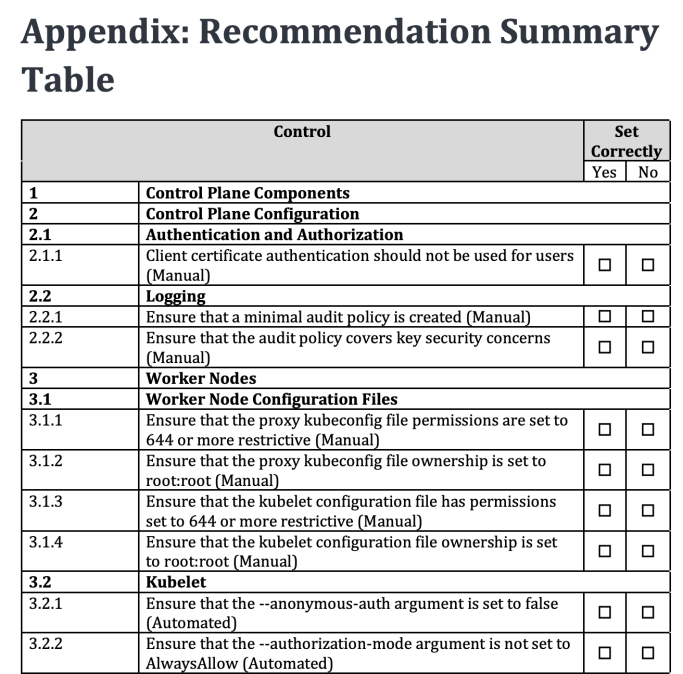

Kubernetes security is of considerable interest nowadays since many organizations are migrating their workloads to this technology. To ensure compliance and reliability, having an up-to-date and reliable security baseline for Kubernetes workloads is a must.

More specifically, there is a requirement that relevant security controls are aware of the Kubernetes architectural components and their security holes. CIS provides extensive benchmark material for securing K8s workloads that covers both base distributions and cloud providers.

Following the recommended approaches for K8s requires an extensive orientation process, since a typical deployment consists of many moving parts and components. For example, there are more than 60 recommendations in the CIS Google Kubernetes Engine (GKE) to date.

Fig. 3 – GKE Recommendations (source: https://www.cisecurity.org/benchmark/kubernetes)

The ephemeral nature of pods does not make this job any easier. You’ll need to invest a lot of time and resources to achieve security automation that covers the CIS benchmark levels.

An agentless security paradigm can help scale security recommendations and best practices while supporting thousands of containers and nodes. With Orca’s Container and Kubernetes Security module, you get better insights into any security gaps in your K8s clusters within minutes.

Next Steps with CIS Benchmarks

If you want to learn more about the CIS benchmarks, I recommend downloading the free resources from the official site. Take some time to review the benchmark recommendations and check which of the areas you should focus on. This will provide you with a more appropriate context for learning how to properly secure things and why.

Next, you’ll want to evaluate and automate the relevant CIS benchmarks for your organization. This will ensure that you separate the minimum required rules from unnecessary controls or policies to improve your security levels as a whole.

Finally, you’ll want to level up your infrastructure security baseline by utilizing a cloud native application protection platform (CNAPP) like Orca Security. Since they can offload most of the menial tasks through automation and advanced technology, the benefits of such services are multiplied. Request a demo or sign up for a free cloud risk assessment to see how the Orca Cloud Security Platform can help you achieve a new level of security and visibility in the cloud.